Pick and Place Machine Handles Irregular Objects using Novel Vision-guided Gripper

Combining a sophisticated gripping mechanism with machine vision allows packing systems to handle disparate objects

Rainer Bruns, Björn Cleves, and Lutz Kreutzer

Pick-and-place robots have been used for a number of years in diverse applications such as PCB manufacturing and food packaging. In most of these systems, the size, shape, and surface characteristics of the objects to be handled are well known. Consequently, system developers can choose from a range of robotic grippers specifically designed to handle individual products.

In many cases, the irregular nature of objects such as light bulbs, bottles, glassware, linen, and books requires the development of specialized handling equipment. To alleviate the need for development costs resulting from these requirements, the Helmut-Schmidt-University (Hamburg, Germany; www.hsu-hh.de) has developed a specialized gripper that can accommodate multiple objects. By combining this gripper with off-the-shelf machine-vision cameras and software, an automated packaging system can be used to pick and place disparate objects.

Today, many automation setups use 3-D laser sensors to perform object measurement. In these systems, the geometric dimensions of objects are compared with a 3-D CAD model to allow pick-and-place systems to accurately predict their location and position. However, where flexible packages such as bags or sacks need to be handled, more specialized picking systems that incorporate sophisticated handling mechanisms coupled with robotic vision systems need to be deployed.

In the design of the flexible gripper, a double-walled hose is filled with a fluid material such as water or air. This hose is glued into a cylindrical ferrule that forms the outer part of the gripper. To allow the gripper to pick objects, the underside of the hose is placed over the object to partially cover the object’s surface. By raising the plunger that is embedded in the cap of the ferrule, the hose and the object are roped into the gripper. Loosening of the object is then performed by lowering the plunger (see Fig. 1).

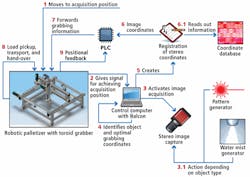

Before the flexible gripper can be used to pick an object, its location and position must be measured. To detect these objects, the system developed at the Helmut-Schmidt-University uses noncontact stereo measurements that are first used to create an elevation profile of the object and then segment single objects.

Elevation profiles

To create an elevation profile, five G-Cam5 16C GigE Vision color cameras from MaxxVision (Stuttgart, Germany; www.maxxvision.com) were mounted in parallel over a robotic palletizer from Berger Lahr (Lahr, Germany; www.schneider-electric-motion.com). To illuminate objects in the cameras’ field of view, two LED line lights from Lumitronix (Hechingen, Germany; www.leds.de)—each with 60 surface-mount LEDs and a luminous flux of 1200 lm—were mounted on the palletizer (see Fig. 2).

FIGURE 2. To locate objects, five GigE Vision cameras are mounted in parallel over a robotic palletizer. By computing an elevation profile and using image segmentation techniques, the height, shape, and location of objects in the robot’s field of view can be computed.

By mounting the cameras 200 mm apart, images from the cameras overlap, allowing the elevation distance of the object to be calculated by a binocular stereo operator included in the Halcon image-processing library from MVTec Software. To calibrate the cameras and the four stereo pairs, 21 different positions of a calibration plate were imaged and evaluated by Halcon’s camera calibration algorithms.

To calculate the elevation distance, a gray-value correlation analysis is performed for each of the four stereo pairs. Because the pixel distance, camera distance, and distance between cameras are known, a matrix elevation profile of gray values can be calculated geometrically.

Objects with low-contrast surfaces constitute a particular challenge caused by the absence of distinctive points. To overcome this, a Flexpoint laser pattern generator module from Laser Components (Olching, Germany; www.lasercomponents.com) was used to project a laser dot matrix onto the surface with a dot distance of approximately 5 mm.

To avoid reflections and to detect transparent packing materials, an ultrasonic nebulizer from Hirtz & Co (Cologne, Germany; www.hico.de) sprays the package with a nondestructive thin water fume.

Image segmentation

To perform image segmentation, objects with known surfaces are compared using correspondence analysis with template models. To segment unknown objects, edge-based and region-based segmentations are used. Edge-based methods detect edges by using grayscale correlation inside the elevation profile.

While these methods are fast, they often generate incomplete edges. More computationally intensive region-based methods subdivide the elevation profile into homogeneous areas of the same height or same surface gradient.

Using these segmentation techniques, known and unknown objects can be identified. For example, if segmentation results in a known pattern, then the object can be properly identified.

To identify unknown objects, their geometric parameters and color information are used. These characteristic data are fed to the input layer of a neural network, also implemented using Halcon, which relates the object to an object class. With every object, the neural network is trained again, resulting in an increasing classification quality.

After identification, the known height and position of each object are transferred to the robotic palletizer for picking. By incorporating the flexible grabber on the x-y-z stage, the vision-based robotic system can be used to pick and place a number of disparate objects.

The irregular nature of objects such as light bulbs, bottles, glassware, linen, and books requires specialized handling equipment.

Prof. Dr.-Ing. Rainer Bruns is head of the department of mechanical engineering and Björn Cleves is a member of the department of mechanical engineering at Helmut-Schmidt-University (Hamburg, Germany); Lutz Kreutzer is manager—PR and marketing at MVTec Software (Munich, Germany; www.mvtec.com).