Sophisticated Surveillance

Coupling Gigabit cameras, GPS, and INS systems to an onboard computer allows high-resolution, airborne surveillance images to be captured and analyzed

Ross McNutt and John Egri

Many of today’s wide-area persistent surveillance systems produce images with low resolution and narrow fields of view. For airborne patrols and surveillance of large areas, current aerial surveillance sensors such as gimbal-mounted cameras must often be manually positioned to obtain optimal image quality.

To provide both wide-area coverage and the ability to extract fine detail such as tracking the movements of individual targets within specific areas, Persistent Surveillance Systems has developed an airborne vision system that features onboard image-processing functions such as image stabilization, geo-rectification, image stitching, and compression for transmitting live images to a base station.

Using the system, ground-based personnel can zoom portions of images in real time, making the system useful for security surveillance, traffic management, and law enforcement applications.

Multiple cameras

Consisting of an airborne image-acquisition system and a ground-based user system for image recording, display, and post-processing, the Persistent Surveillance HawkEye acquires image data using an array of eight 11-Mpixel monochrome or 16-Mpixel Gigabit Ethernet cameras from Imperx. In a gimbal-mounted array on the aircraft, the cameras’ individual fields of view are arranged to collectively cover a square area of ground (see Fig. 1).

When acquiring images at 1 frame/s, the airplane circles at an altitude between 4000 and 12,000 ft, yielding a composite field of view that can encompass as much as a 4 × 4-mile ground area with minimal overlap of the individual camera images. The result is a maximum effective resolution of 16,384 × 16,384 pixels, at which scale an individual pixel represents a square area about 1.25 ft on each side (see Fig. 2).

To preprocess images, the cameras provide automatic gain control (AGC) and lookup-table (LUT)-based contrast enhancement. An onboard image processor then provides frame-to-frame image stabilization for each individual camera feed. To create the composite image, an image-stitching algorithm is used in conjunction with geo-rectification to correct for image distortions arising from variations in terrain height and camera viewing angle.

Geo-rectification begins with the airborne system collecting navigation data from both a global positioning system (GPS) and an inertial navigation system (INS) onboard the aircraft. Using this positioning and navigation information, HawkEye draws from an onboard mapping database to match the image to the map with an accuracy of 20 to 30 m. The system then extracts the terrain elevations needed to calculate the corrections. HawkEye also transmits the navigation data to the ground system to let operators identify landmarks by name or street reference.

Image stitching

Handling the image stitching and geo-rectification for such a large composite image requires considerable CPU time. For the processors used in the airborne system, the total CPU time needed to process a single image is between 3 and 5 s. This includes the compression needed to reduce the data rate to 300 Mbits/s for transmission of live video to a ground receiving station. To sustain the 1-frame/s capture rate and provide operators with live video feeds, the airborne computer system is designed as a multiprocessor in a pipeline configuration.

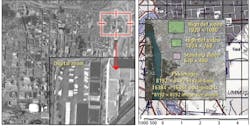

The ground system decompresses the live video stream and acts as a server to a bank of user stations (see Fig. 3). Each station offers operators a live image that can be panned and zoomed. The ground system also digitally records the video at full resolution. User stations can access the recorded image to provide a video rewind function to augment live viewing. Post-processing available at the ground station includes image analysis, such as motion detection, object tracking, and standard image-enhancement functions such as gamma correction.

FIGURE 3. The ground system acts as a digital video recorder and multiuser video server, and provides a post-processing capability for extracting additional information from recorded data.

The system’s scrolling and zoom control differs from the standard pan and zoom of traditional surveillance camera applications. In those applications, the operator must direct the camera to the area of interest. In the HawkEye system, the entire area is always captured and the operator simply selects the area and detail desired for display. This attribute supports HawkEye’s primary application, which is wide-area surveillance.

Law enforcement

The HawkEye system has been used to support major city law enforcement, large event security, and large-scale emergency response support. It has witnessed more than 30 murders and many other major crimes from kidnapping to car-jackings to house robberies. Captured images allow the criminals to be tracked forward and backward in time, providing valuable information to investigators.

HawkEye has also been employed to survey vast disaster areas such as the 2008 Iowa Floods, where its large image format allowed up to 450 square miles to be surveyed per hour at resolutions supporting the identification of people, vehicles, and wildlife in trouble, using motion detection capability to cue observers to critical areas.

Engineering the HawkEye system required development of proprietary image-processing algorithms, but the real challenge lay in system integration. The system had to coordinate the operation of eight cameras, interface to two different navigation systems, handle image processing and compression, send the data to the ground station via radio, and provide intuitive user software to support image analysis and investigations.

Currently, the HawkEye system operates on manned aircraft but does not require pilot interaction. However, the system can be installed on unmanned airborne vehicles, and Persistent Surveillance Systems has plans for further development efforts in this area.

Ross McNutt is president of Persistent Surveillance Systems (Dayton, OH, USA; www.persistentsurveillance.com) and John Egri is director of engineering at Imperx (Boca Raton, FL, USA; www.imperx.com).

Vision Systems Articles Archives