High-speed camera system studies dragonflies for biomechanical research

By studying biological systems, researchers at Harvard University (Cambridge, MA, USA) aim to gain an appreciation for the mechanisms associated with insect locomotion. The researchers expect to build an understanding of how certain species have evolved to develop skills such as predation and how such biologically inspired motion may be used in manmade robotic systems. Harvard assistant professor Stacey Combes is currently studying the predatory motion of dragonflies to provide a better understanding of these mechanisms.

Because dragonflies are predators that eat small insects such as fruit flies and live in marshy areas such as ponds, Combes and her colleagues have built a 12 × 12 × 12-ft area that simulates this environment. Dragonflies catch their prey while flying from perches such as reeds or stems, clasping the prey with their legs before returning to the stem to eat. “As such tasks are generally accomplished in just a few seconds, it is important to capture as many images as possible of the insect in flight at the highest speeds possible,” says Combes.

In the past, high-speed standalone cameras were used for this purpose. While these cameras proved useful, they only capture a limited number of image sequences before data must be transferred off-line to a host computer. “Because predatory events are unpredictable,” says Combes, “recording longer image sequences ensures that these events can be captured in a foreseeable fashion.”

Combes enlisted Systematic Vision (Ashland, MA, USA) to develop a multicamera system to perform the image-capture task. To capture a three-dimensional view of the predatory event, four Photonfocus (Lachen, Switzerland) DS1-D1312-160-CL-10 CMOS cameras are placed on a T-Slot Modular frame from 80/20 (Columbia City, IN, USA) at different heights and distances. Another four cameras are placed on a similar frame located perpendicular to the first. “These eight synchronized cameras allow 1312 × 1082 × 8-bit images to be transferred at a rate of 108 frames/s,” says Bill Carson, president of Systematic Vision.

Image data from all eight cameras are then transferred over each Camera Link interface to two four-port Karbon frame grabbers from BitFlow (Woburn, MA, USA) that are housed in a custom multicore Xeon computer from MicroDISC (Yardley, PA, USA). As image data are streamed at 1.3 Gbytes/s from the cameras, the data are stored using an array of eight 512-Gbyte SATA RAIDs. This allows image sequences of up to 30 min to be recorded from each camera.

To visualize, synchronize, capture, and store images, the system uses StreamPix 5 digital video-recording software from NorPix (Montreal, QC, Canada). Any manual aperture adjustment of each camera that may be required can be visualized dynamically on an SVGA monitor using StreamPix 5.

Before any images can be captured, the system must be calibrated. Researchers move a custom-developed wand in the 3-D field of view of the system. Because the size of the wand is known, captured image data can then be used to calibrate the position of any object in 3-D space.

Once the system is calibrated, dragonflies are placed on a perch within the environment, fruit flies released, and the image capture sequence initiated.

“To reduce the overhead of storing captured images,” says Carson, “images are first captured in NorPix’s proprietary SEQ file format. To analyze the sequence of events requires that these image sequences are first reformatted as a series of AVI images. After reformatting the 400 frames that typically comprise 2–3 s, the image sequences must be analyzed. This is accomplished by manually tagging the thorax of the dragonfly and fruit fly within each image.”

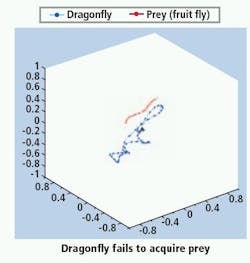

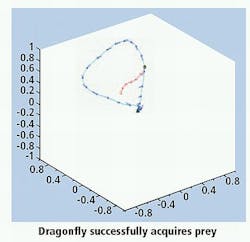

Three-dimensional (x, y, z) positional information along with temporal information for each image sequence is then exported into an Excel spreadsheet. To interpret these image data, Combes and her colleagues have developed a program in The MathWorks’ (Natick, MA, USA) MATLAB environment that visualizes the motion of the dragonfly in 3-D space (see figure).

“Excel data are also used to compute the velocity, acceleration, and turning speed of the insect and prey. By doing so, the peak acceleration and turning rate can be analyzed over a number of different image sequences. This allows the typical and sometimes exceptional motion of the insects to be more fully understood,” Combes says.

-- By Andy Wilson, Editor-in-Chief, Vision Systems Design