Kinect system helps doctors review records

Doctors may soon be using a system in the operating room that recognizes hand gestures as commands to tell a computer to browse and display medical images of the patient during a surgery.

Surgeons routinely need to review medical images and records during surgery, but stepping away from the operating table and touching a keyboard and mouse can delay the procedure and increase the risk of spreading infection-causing bacteria, says Juan Pablo Wachs, an assistant professor of industrial engineering at Purdue University (West Lafayette, IN, USA).

"One of the most ubiquitous pieces of equipment in US surgical units is the computer workstation, which allows access to medical images before and during surgery," he says. "However, computers and their peripherals are difficult to sterilize, and keyboards and mice have been found to be a source of contamination. Also, when nurses or assistants operate the keyboard for the surgeon, the process of conveying information accurately has proven cumbersome and inefficient since spoken dialogue can be time-consuming and leads to frustration and delays in the surgery."

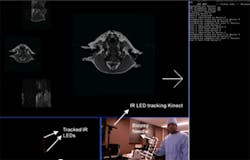

To resolve those issues, the Purdue researchers are creating a system based on a Microsoft Kinect camera and specialized algorithms to recognize hand gestures as commands to manipulate MRI images on a large display.

The researchers validated the system, working with veterinary surgeons to collect a set of gestures natural for clinicians and surgeons. The surgeons were asked to specify functions they perform with MRI images in typical surgeries and to suggest gestures for commands. Ten gestures were chosen: rotate clockwise and counterclockwise; browse left and right; up and down; increase and decrease brightness; and zoom in and out.

Critical to the system's accuracy is the use of "contextual information" in the operating room - cameras observe the surgeon's torso and head - to determine and continuously monitor what the surgeon wants to do.

"A major challenge is to endow computers with the ability to understand the context in which gestures are made and to discriminate between intended gestures versus unintended gestures," Wachs says.

"Surgeons will make many gestures during the course of a surgery to communicate with other doctors and nurses. The main challenge is to create algorithms capable of understanding the difference between these gestures and those specifically intended as commands to browse the image-viewing system. We can determine context by looking at the position of the torso and the orientation of the surgeon's gaze. Based on the direction of the gaze and the torso position we can assess whether the surgeon wants to access medical images," he adds.

Findings showed that integrating context allows the algorithms to accurately distinguish image-browsing commands from unrelated gestures, reducing false positives from 20.8 percent to 2.3 percent. The system also has been shown to have a mean accuracy of about 93 percent in translating gestures into specific commands, such as rotating and browsing images.

Related articles on the Kinect that you might also be interested in reading.

1. Microsoft’s Kinect helps keep surgery sterile

Engineers based at Microsoft Research (Cambridge, UK) have developed a system based on the Microsoft Kinect that allows surgeons to manipulate images in the operating theater.

2. Kinect software assists physical therapists

The West Health Institute (La Jolla, CA, USA) has developed a software application for Microsoft's Kinect for Windows platform that can help physical therapists ensure that patients perform exercises correctly.

3. Kinect helps stroke victims recover manual agility

Researchers at of Southampton University (Southampton, UK) and Roke Manor Research (Romsey, UK) have developed a biomechanics system using Microsoft's Kinect that can help stroke patients recover manual agility.

-- Dave Wilson, Senior Editor, Vision Systems Design