MIT: 3D nano-camera operates at the speed of light

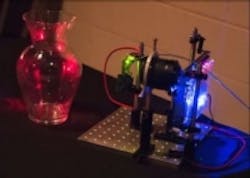

Researchers from the MIT Media Lab have developed a 3D camera based on time-of-flight technology which the team claims can operate at the speed of light, as well as generate 3D models of translucent or near-transparent objects.

The camera, which the team has dubbed the "nano-camera" costs only $500 and works similar to the newly released second-generation Microsoft Kinect device, in that the location of objects is calculated according to the time it takes a light signal to reflect off a surface and return to the sensor. With MIT’s new device however, the camera is not fooled by rain, fog, or even translucent objects, according to co-autor Achuta Kadambi, a graduate student at MIT.

"Using the current state of the art, such as the new Kinect, you cannot capture translucent objects in 3D," Kadambi said in an MIT press release. "That is because the light that bounces off the transparent object and the background smear into one pixel on the camera. Using our technique you can generate 3D models of translucent or near-transparent objects."

The new device employs an encoding technique to calculate the distance a signal has travelled, which can negate the impact that changing environmental conditions, semitransparent surfaces, edges, or motion might have on determining the correct time of flight measurement.

MIT Media Lab graduate student and team member Ayush Bhandari says that the technique is similar to existing techniques that clear blurring in photographs in that placing some assumptions on the model, the image can be unsmeared to produce a sharper picture.

In 2011, the MIT Media Lab team—which is led by associate professor Ramesh Raskar—created a trillion-frame-per-second camera, which the team said was capable of capturing a single pulse of light as it travelled through a scene by probing the scene with a femtosecond impulse of light and using fast but expensive laboratory-grade optical equipment to capture an image each time. This camera, however, costs around $500,000 to build. The nano-camera, on the other hand, probes the scene with a continuous wave signal which oscillates at nanosecond periods, allowing the team to use inexpensive hardware and reach a time resolution within one order of the $500,000 camera, while costing just $500 to build.

"By solving the multipath problem, essentially just by changing the code, we are able to unmix the light paths and therefore visualize light moving across the scene," Kadambi says. "So we are able to get similar results to the $500,000 camera, albeit of slightly lower quality, for just $500."

Nano-cameras could be used in medical imaging, collision-avoidance detectors for cars, and interactive gaming, according to MIT.

View the press release.

Also check out:

(Slideshow) 10 different ways 3D imaging techniques are being used

Academic researchers working to create pediatric eye cancer detection software

MIT researchers developing algorithm to improve robot vision

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter | Check us out on Google +

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.