GigE cameras to adopt AIA standard

Camera vendors are readying products to support the latest AIA GigE standard.

By Andrew Wilson, Editor

Many people in the machine-vision industry approach the subject of GigE cameras from a communications-protocol perspective, citing the benefits of a large installed base of networked CPUs and PLCs and the ease of transferring data between components using standards-based protocols. Until now, however, Ethernet-based systems have not been seen as a means to transfer image data between cameras and host computers.

Camera vendors, too, have different perspectives on how to implement Gigabit Ethernet interfaces into their products. Some currently available smart cameras, for example, perform high-speed image-processing functions on-board, producing pass/fail decisions that are transmitted using the well-defined TCP/IP to the host computer. While these companies may tout their cameras as Gigabit Ethernet-compliant, the function of the communications protocol is simply to transmit pass/fail data, rather than images, over the network. Other smart-camera vendors may use TCP/IP to transmit image data between camera and host computer using off-the-shelf network interface devices.

Using these standard devices, camera vendors can take advantage of numerous off-the-shelf software-development packages to rapidly develop client/server (camera/computer) programs. These can then be integrated with third-party image-processing packages to allow OEMs to develop machine-vision systems.

GigE targets vision

Despite the success of these systems, the Ethernet and Gigabit Ethernet protocols were not originally developed for machine-vision applications. The most common Ethernet implementation, with TCP/IP at the transport layer, provides a reliable message-delivery system and ensures data are not lost. This eliminates the need to build safeguards into software to handle communications. However, the method used by TCP/IP to validate data transfer reduces performance when image data are transferred at high-speed.

Further, at the PC, traditional Ethernet systems use a commercial network interface card/chip (NIC) driver and general-purpose software, such as the Windows or Linux IP stack, to receive and send data over TCP/IP connections. According to George Chamberlain, president of Pleora Technologies, these drivers and stack software are designed to deal with a host of different applications, resulting in too much latency and unpredictability for the real-time operation required by vision systems.

To overcome these problems, the GigE Vision Standard Committee of the Automated Imaging Association (AIA) was formed in June 2003 to define an open transport platform based on Gigabit Ethernet. The standard, which uses the User Datagram Protocol (UDP) at the transport layer instead of TCP, is due out later this year. It will have three main elements: a GigE Vision Control Protocol (GVCP), which defines how to control cameras and send image data to the host, a GigE Vision Stream Control Protocol (GVSP), which defines data types and describes how images are transmitted, and a Device Discovery (DD) mechanism, which defines how cameras obtain IP addresses and interoperate with third-party software. As part of the DD mechanism, camera vendors must provide customers with an XML file that describes camera-specific parameters in the format defined by the emerging GenICam standard.

“Although TCP is a good general-purpose protocol, it cannot meet the data rate and latency requirements of machine vision. GVSP would make a terrible general-purpose protocol but is an excellent protocol for machine-vision data,” says Marty Furse, CEO of Prosilica. The GVCP runs on top of UDP. Because UDP does not guarantee data delivery, GVCP defines mechanisms to guarantee reliable packet transmission and ensure minimal flow control.

Implementing the standard

To implement the GigE standard, camera vendors must format image and control data into IP packets for transmission over Gigabit Ethernet. At present, camera vendors are taking three approaches. The first is to use Pleora’s iPORT IP engine in an embedded FPGA or as an off-the-shelf product. The iPORT engine works with either a Gigabit controller chip, such as Intel’s 82540EM, which integrates both Media Access Control (MAC) and Physical Layer (PHY) functions, or with a Gigabit Ethernet PHY chip. Other camera vendors offload these functions using an FPGA in conjunction with a general-purpose processor and a Gigabit Ethernet controller chip.

Both Imperx and Mikrotron have implemented Pleora’s iPORT engine into their cameras (see Fig. 1). “Our Lynx GigE camera incorporates Pleora’s iPORT GigE interface,” says Petko Dinev, president of Imperx. “Because this uses UDP/IP as the transport layer, latency is minimized, and CPU requirements in the host PC are reduced.” The company’s Lynx Configurator is a GUI-based camera-configuration tool that allows developers to control the operating parameters of the camera and configure the IP engine’s ‘frame-grabber’ settings. The Lynx Terminal tool is an off-line tool used to download firmware, software, and look-up tables into the camera.

“Both tools offer PC-based camera-control software built from the iPORT camera interface application and the iPORT C++ SDK,” says Dinev. “The software allows users to control, configure, and retrieve image data from cameras via a remote, GigE-connected PC. The GigE link between the PC and the cameras it controls can be either point-to-point or network-connected over a LAN.”

Pleora’s GigE interface is also used in Mikrotron’s MotionBLITZ Cube camera. Says Burkhard Habbe, manager of sales and marketing, “Although the GigE Vision standard does not include any guarantee that datagrams sent over the UDP/IP protocol will be received in the same order by the receiving socket, the iPORT solution identifies any out-of-order packets when they arrive at the host/PC and inserts them in the correct place in the data stream, independent of whether the protocol being used is iPORT or GigE Vision,” he says. “We chose Pleora because its product was easy to integrate with our camera head, was more cost-effective than an internal development program, and helped us speed time to market. This allows us to offer a GigE camera, a driver for the PC, and Pleora’s already-developed SDK.”

Custom designs

Rather than use third-party products such as Pleora’s iPORT, both Prosilica and Tattile have developed their own GVSP offload engines in hardware using an FPGA and provide customers with custom drivers and SDKs. According to John Merva, president of Tattile USA, currently included third-party software packages include Halcon from MVTec and Streampix from Norpix-the only companies willing to move ahead before the final AIA standard is issued.

In the design of its forthcoming GigE camera, Alacron uses an off-the shelf processor, namely the S5610 chip from Stretch with built-in DMA-enabled, four-channel GigE controller and Internet protocol software from Treck optimized to take advantage of the S5610 hardware. According to Joseph Sgro, Alacron president, this will allow the camera to support both TCP/IP and UDP protocols. The camera’s embedded 5610 processor supports fully bidirectional TCP/IP communications with the remote host, including the ability to download a precompiled application developed using the Stretch interactive development environment.

“In all GigE-interface camera implementations, a Gigabit Ethernet controller or a Gigabit Ethernet PHY chip manages the low-level functions of the GigE link, such as the physical interface and the Ethernet layer,” says Pleora’s Chamberlain. “In addition, circuitry to convert the video to IP packets can be implemented in an FPGA (such as the iPORT engine), or it can be built from an IP software stack running on an embedded processor.

“To achieve real-time performance with low and consistent latency at high data rates, a powerful embedded processor is required. One way to reduce power consumption of the embedded processor is to use a coprocessor FPGA for some of the vision processing tasks. This results in a three-chip solution consisting of a GigE controller, embedded processor, and coprocessor FPGA. This is not as efficient as a two-chip solution such as the iPORT. It drives up costs, increases the design footprint, and requires more power, although not as much as a GigE controller and embedded processor approach,” says Chamberlain.

Common APIs

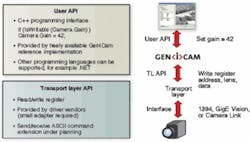

To allow interoperability between cameras and third-party software, the GigE Vision standard is using the GenICam standard being developed by the European Machine Vision Association (EMVA). The goal of GenICam is to provide a single camera-control interface to all types of cameras, no matter whether they use the GigE Vision, Camera Link, or 1394 DCAM standards (see Fig. 2). The first GenICam release, due out in April 2006, will publish an XML schematic that defines how camera-specific parameters should be presented in an XML description file. To comply with GigE Vision, camera vendors must provide customers with an XML file that conforms to this schematic.

The first GenICam release will also include the GenApi library, a reference implementation of a dynamic application programming interface (API) that demonstrates how to parse the XML file to present camera parameters to end users or developers. The use of GenApi will not be mandatory for compliance with the first release of GigE Vision, but camera manufacturers will likely incorporate at least some of it in their SDKs.

The GigE Vision and GenICam committees are also working together to produce a list of mandatory, optional, and recommended feature names and descriptions. This list will initially support GigE Vision, then will likely be used as a starting point to build lists for Camera Link and 1394 features.

Future versions of GenICam will see the addition of GenTL, an open-architecture and reference tools for transport layer management. Some groups involved in GigE Vision are working on reference transport layers, but these are not yet available. In the meantime, GigE Vision applications will need to rely on proprietary transport layer architectures.

For its GigE Vision GE-Series Gigabit Ethernet cameras, for example, Prosilica includes its SDK and driver free of charge. According to Marty Furse, several third-party software vendors including National Instruments (NI), Matrox, Stemmer Imaging, and Unibrain are working with Prosilica to complete the development of their GigE Vision drivers. According to Furse, NI has already demonstrated Prosilica’s GE650 camera with its native driver. In addition to third-party support, Prosilica also offers its GigE Vision SDK modeled after the company’s DCAM SDK.

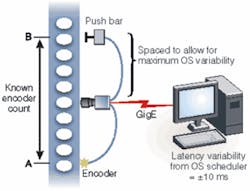

While work by the AIA and EMVA still needs to be solidified to bring GigE cameras to the forefront of machine vision, system latency can be a problem due to the multitasking nature of the Windows and Linux IP communications stacks. “In some vision systems, in-camera I/O activity must be complemented by commands from PC-based applications software,” says Pleora’s Chamberlain. “For instance, if, after analyzing the image of a product, the software detects a fault, it may have to activate a push arm to remove the product from a conveyor belt (see Fig. 3). In the past, frame grabbers handled this trigger. With GigE cameras, such triggers can be problematic because vision application software usually runs on Windows or Linux. Trigger requests can thus be stalled in OS queues, interrupting real-time signal flow.”

GigE cameras with I/O capabilities can bypass this limitation to a certain extent. By time-stamping each image and correlating it to an encoder count, the I/O system has the location of the product on the conveyor belt. “A push bar located far enough away from the camera can accommodate the maximum possible variability from the OS scheduler and any other network equipment,” says Chamberlain. “The PC-based application then sends the in-camera I/O system the time stamp of the exact moment the defective product will pass the push bar, and it is triggered accordingly.”

Company Info

| A&B Software New London, CT, USA www.ab-soft.com | Alacron Nashua, NH, USA www.alacron.com |

| Automated Imaging Association Ann Arbor, MI, USA www.machinevisiononline.org | Basler Vision Technology Ahrensburg, Germany www.baslerweb.com |

| European Machine Vision Association Frankfurt, Germany www.emva.org | Imperx Boca Raton, FL, USA www.imperx.com |

| Matrox Dorval, QC, Canada www.matrox.com/imaging | Mikrotron Unterschleissheim, Germany www.mikrotron.de |

| MVTec Software Munich, Germany www.mvtec.com | National Instruments Austin, TX, USA www.ni.com |

| Norpix Montreal, QC, Canada www.norpix.com | Pleora Technologies Kanata, ON, Canada www.pleora.com |

| Prosilica Burnaby, BC, Canada www.prosilica.com | Sony Electronics Park Ridge, NJ, USA www.sony.com/videocameras |

| Stemmer Imaging Pucheim, Germany www.stemmer-imaging.de | Stretch Mountain View, CA, USA www.stretchinc.com |

| Tattile Brescia, Italy www.tattile.com | Treck Cincinnati, OH, USA www.treck.com |

| Unibrain San Ramon, CA, USA www.unibrain.com |