Introduction to neural net machine vision

Neural networks offer improved classification capabilities for machine-vision systems.

By Fred D. Turek

Electronic neural networks accomplish a particular type of learning and decision-making process similar to that of the human brain. Indeed, the human brain routinely does certain things no computer has ever come close to doing. Fundamentally, these tasks rely on the ability to learn and make decisions in multivariable processes where, strictly speaking, the information is incomplete and the equation is both complex and unknown. While many define the word “decision” as determining a specific course of action, another type of decision of more interest in machine vision isclassification.

For example, “who is that person sitting on my sofa?” or “is that tomato good enough to put into my grocery cart?” are classification-type decisions. In neural-net terminology, each item on this list of choices is aclass. In the first case, there might be a thousand classes (a list of people that they might recognize); the second case has only two classes (“good enough” and “not good enough”). The latter is called a binary classification. Another defining attribute of such types of decisions is that they involve using and weighing multiple criteria, subconsciously learning and assigning different decision-making weights or priorities to each criterion.

To place machine-vision neural nets in the framework of general neural-net terminology: they are “supervised” and usually use single or multilayer Perceptron architecture. “Supervised” means that during the training process, a person provides answers and the network trains itself (adjusts the weights that it assigns the inputs) to best achieve the given answers.

MACHINE VISION USES

Within a machine-vision program, neural networks can be used as a tool in the chain of events prior to a final decision or used to make a final decision. Most machine-vision applications require functionality that is best accomplished by non-neural net tools. A good example would be an application involving explicitly defined single-variable pass-fail criteria where the task is to check 20 dimensions on a part and to fail the part if any one of the 20 dimensions is out of tolerance. In such applications, although neural net functionality may be useful earlier in the solution/program, a neural net is not required or beneficial for the final decision.

Uses of neural networks can be characterized by the neural net’s role in the solution. The first category is where the neural net is the only analysis and decision-making tool in the system. The goal of such systems is minimizing the work and expertise required to accomplish machine vision. Such systems are often marketed with unrealistic claims.

About eight years ago, FSI Technologies evaluated a unit that was advertised with the words “just show it good and bad parts, tell it which are which, and it starts working. All other vision tools and expertise are not required.” This proved to be true only in special cases, and indeed it was only usable in special cases (“no expertise required”) within special cases (“no other tools required”) because the advertising claim was used as a design philosophy. “No expertise” actually meant that there were no provisions to apply expertise to tell the system what was most relevant (or useful) for differentiation and no way to “lift the hood” to understand why the system was not working (including to view the inputs to the neural net). And, “no other tools required” merely meant that no other machine-vision tools were included in the unit.

Later, FSI Technologies opted to use (and be a partner for) neural-network software from NeuroCheck, where the inputs to the neural net are more controllable and where the neural net was just one of many tools in a very large toolbox. More commonly, neural nets are used on portions of applications where the required decision is an interaction between multiple variables, and/or where a thorough or explicit definition of the decision criteria is not available.

CLASSIFICATION AND SORTING

In identification, classification or sorting objects into a set of results or categories using a range of features and attributes is advantageous or necessary. This sorting (including an important subset of two-choice decisions) is arguably the most prevalent use of neural networks. A few of the many examples include species identification, color analysis that handles complex real-world variations, and automated cell analysis.

Another major class of examples is inspection for defects where the severity of the defect is a subjective combination of multiple variables. A specific example in this category is scratch analysis on a surface inspection, where multiple characteristics of a defect must be combined to determine its severity, and where examples (but not an explicit definition) are available to define the defect. A long/thin or short/wide scratch may be acceptable, but a long-wide scratch unacceptable.

Another example is character recognition where the neural net recognizes graphical objects from inputs that are contained in its various graphical attributes. This provides the ability to learn and classify objects that are not characters, to deal with degraded images, and to do so at a very high rate of speed.

We developed a fruit classification system for tomatoes that illustrates attributes and advantages of a neural net machine vision system. Fruit classification is very demanding because stores and customers are very finicky about the tomatoes that are shipped to them. Although a veteran inspector may know what a particular customer wants, when asked to describe exactly what the inspection criteria are, the inspector may not be able to adequately do so. In reality, human inspectors are subconsciously looking at and weighting many different attributes of each tomato when they make their decisions.

To automate the classification process, a neural network can be used with other machine-vision tools and methods. Our classification system was based on a FSI FyrEye-3000 Inspection System, including an FSI CVS-700 machine-vision system running NeuroCheck 5.1 software.

A critically important part of developing a neural-net machine-vision system is the creation of a useful image. This includes a range of tasks, especially design of the lighting and the geometric relationship between the lighting, the target, the optics, and the camera so that the desired attributes may be successfully handled by the next stage of the process. Undirected comparison of overall images is sometimes-but seldom-a good way to do automatic inspection. This rule applies to both standard algorithms and systems based on neural networks. For classification problems, this usually means concentrating on a list of attributes that are likely to be useful.

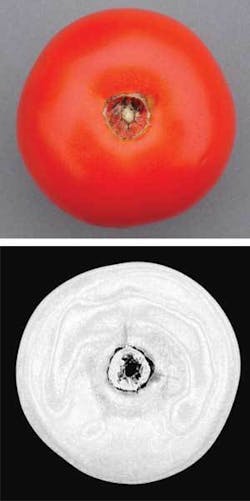

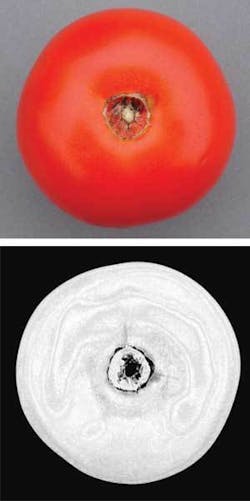

In our case, first an individual tomato is selected and defined (by its perimeter) as an object to be inspected. This perimeter definition (an ROI object) is available for later use, separately from the original image. In conversations with the inspector, it is obvious that color is important. In a color analysis tool, a color class called “PerfectRed” was created. The inspector highlights various examples of ideal color, which the tool then integrates and stores as the “PerfectRed” definition. Then, in the “run” phase, the color tool transforms each pixel of the image into a (using gray scale) indication of its degree of match to “PerfectRed.”

Sub-optimal lighting design was used, which caused some areas to look falsely brighter (glare) to the camera, but in this case the next step differentiated underlying tomato color variations. For the transformation, RGB images were translated to HIS color space, and a transformation based on “Hue only” was used to retain the underlying tomato color variations while largely eliminating those caused by imperfect lighting. In the resultant image, the grayscale of each pixel actually codes/represents its degree of match to the trained “PerfectRed” (see Fig. 1). The previously created ROI object was combined with this special image. Now, objects consisting of this perimeter plus the section of the special image within it are available as objects for the neural net to learn from and then inspect.

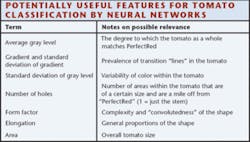

Next, the training data file is created by developing a list of features that might be relevant or useful for classification. These are then selected as inputs into the neural net (see table on p. 20). Two classes comprising “GoodEnough” and “NotGoodEnough” were then created. In the first half of the training stage, the inspector was asked to classify a number of tomatoes. These chosen classes were recorded and linked to the object/image for storage as a pair in the training data file.

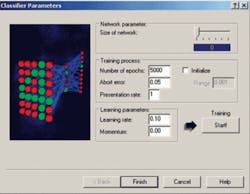

After this task was accomplished a classifier was created. This required selecting the training data and then providing the ability to change various defaults such as the size of the neural net, and the “abort error” - a measure of how accurate the net needs to be before the training process is allowed to terminate. During classifier creation, the weights of various links to the nodes in the “invisible” layer of the neural net are adjusted in iterations until the “error” value is brought below the target value (see Fig. 2).

Next a final pass/fail (“evaluate classes”) tool was added, including setting the required class and confidence factor for passage. Common practice is to set this figure stringently enough so a bad tomato is never passed. This usually results in rejecting a few borderline good tomatoes.

TESTING AND VERIFICATION

Testing and verification in “run” mode allows changes to be made if required. Different tomatoes were inspected and classified manually and then by the system. The output shows the classifications plus the “confidence factor,” which is, in essence, a figure of merit of the whole classification process. A simple and extreme illustration of this would be if the same tomato was run twice during training, and the inspector erroneously placed the same tomato into two different classes. If that tomato (or one like it) is presented during run mode, the classification confidence factor will be very low. The neural net is, in essence, reporting that because it was told that tomatoes with those same attributes belong in two different classes it is not confident of properly classifying them with the available information.

The test achieved a 100% match to the inspector’s classification-this perfection is unusual and is not required for 100% proper performance of the system. A few discrepancies on “borderline” tomatoes would be the norm, after which the final “pass/fail” tool would prevent the marginal ones from passing. Final verification would involve running hundreds of tomatoes (or more) without ever passing a bad one. If it fails this verification, the various steps are then modified until this is achieved. For the neural net, this may include changing any parameter, including the instances and classifications in the training data, and the list of hopefully-relevant parameters that are provided to the neural net.

While neural net training takes time, the software makes experimentation faster and easier. The entire final inspection program (including image acquisition) consists of just nine lines of code and the neural net accomplishes its classification in less than 1/1000 s (see Fig. 3).

FRED D. TUREK is COO of FSI Technologies, Lombard, IL, USA; www.fsinet.com.

null