Multispectral imaging offers new tools

The characterization of nonhomogeneous materials is one of many applications for this emerging technology.

By Jens Michael Carstensen

For the past several decades, imaging and machine vision have been an obvious choice for the characterization of nonhomogeneous materials. Geometric measurements such as counting and assessing size and size distribution are now implemented in inexpensive off-the-shelf systems. The same goes for detecting highly standardized patterns such as machine-printed characters, barcodes, and Data Matrix codes. Many systems also can do shape measurement and, to some degree, color measurements. However, obtaining proper radiometric measurements-including color measurements-with vision systems requires effective handling of a number of critical issues that arise from the inherent properties of such systems

The pixel values are typically a composite of many different optical effects, including diffuse reflectance, specular reflectance, topography, fluorescence, illumination geometry, and spectral sensitivity. The precise composition will typically depend on pixel position. And vision systems must deal with heterogeneous materials, which means that making useful assumptions such as smoothness about theses effects is less useful. Thus, the combination of geometry and radiometry in every measurement adds a great deal of complexity but also offers a very large measurement potential.

An effective way of dealing with these issues uses a twofold strategy:

- Carefully design the vision system with respect to the task at hand; optimize illumination geometry; and focus on reproducibility and traceability of the measurements.

- Provide the necessary redundancy in the imaging system to enable meaningful statistical analysis of the image data.

There are two powerful means of obtaining an effective redundancy: using multiple wavelengths or spectral sensitivity curves; and using multiple illumination geometries. In the first approach we talk about multispectral vision and in the second we talk about multiray vision. While multispectral techniques mainly focus on surface chemistry and color in a general sense, multiray techniques are more oriented toward physical surface properties such as shape, topography, and gloss. A well-known technique for estimating shape from shading-photometric stereo-is a special case of multiray vision. Multispectral vision and multiray vision can obviously be combined to further enhance redundancy.

MULTISPECTRAL APPROACH

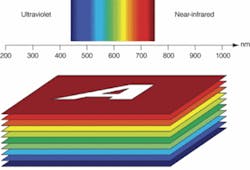

A multispectral image is a stack of images or bands-each representing the intensity image at a given wavelength (see Fig. 1). A standard gray-level image may be seen as a one-band image and an RGB-image as a three-band image. Some multispectral images may have several hundred bands, and these are typically referred to as hyperspectral images. In the present context we acquire images up to 20 spectral bands. With 2 Mpixels/band this gives 40 Mpixels, and with 16-bit sampling this means that a full representation of the images will require 80 Mbytes of storage.

From a spectroscopic viewpoint, in the multispectral image we obtain a spectrum in each pixel. This pixel spectrum may, under the right circumstances, reveal much more than an accurate color measurement. Even with a standard CCD camera, it may reveal specific features such as the surface chemistry in each pixel. These facts are well know in fields such as spectroscopy, chemometrics, and statistics, but are mostly based on homogeneous samples. Remote sensing has been the main area for multispectral image analysis for many years, but recently surface chemistry mapping though multispectral imaging has gained momentum in vision technology, as well.

AN IMPLEMENTATION

We have implemented multispectral imaging in our VideometerLab system, which provides high-resolution multispectral images at wavelengths from 230 to 1050 nm. The multispectral measurements are easily acquired by a standard black-and-white CCD camera (1280 × 960 pixels) by strobing light consecutively from LEDs with different spectral characteristics into an integrating sphere (see Fig. 2). It is internally reflected by the diffuse white inner surface of the sphere, and illuminates the object under investigation with very diffuse and homogeneous light.

Light sources other than LEDs can be used, and the setup can be combined with backlight strobes such as diffuse multispectral (another sphere below the sample), collimated, darkfield, or brightfield backlight. The strobing is performed by a 20-channel strobe controller that provides highly specified and stable strobes of light. The acquisition of a multispectral image with high radiometric accuracy and high resolution can be obtained in a few seconds.

For many applications measuring up to 20 different bands including the near-infrared (near-IR) will provide much more specific information about the sample than a trichromatic (RGB) image. The relative presence of some wavelengths and absence of others is a very specific characteristic of many material properties-the most prominent ones are reflection, transmission, fluorescence, and scattering. In most practical situation, by carefully selecting 20 spectral bands, multispectral imaging will perform as well as hyperspectral imaging of the same spatial quality.

Multispectral imaging can be applied to many different situations, including characterization of materials according to surface chemistry and characterization of component distribution in composite materials. It also can be used to remove irrelevant material from the analysis and find defects and foreign matter. For example, the near-IR band can make it easy to isolate a coffee spot on a textile fabric. This enables precise characterization of the spot or the textile, or a combination of the two. The textile structure and spot can easily be separated. Even if the color is visually similar or if the product is transparent, multispectral imaging will often reveal the difference (seeVision Systems Design, October 2006, p. 69).

Likewise, a transparent liquid such as water can be made clearly visible through multispectral vision, for example, a pharmaceutical cartridge with water solution (see Fig. 3). In the cartridge, water absorption is directly visible, as is the air in the capsule. Typically, indium gallium arsenide (InGaAs) based cameras that are sensitive to wavelengths in the 1000-2000-nm range provide such multispectral images (see “Dual-band camera registers visible and near-IR images,”p. x). However, the difference lies in the fact that these images can also be made using a standard silicon-based camera.

In addition, material properties can be assessed based on reflectance spectra or scattering effects. For example, fat content can be assessed in minced meat by disregarding the significant topographical variation. Likewise, textile fabric can be assessed using multispectral imaging (see Fig. 4). Many other applications could benefit from low-cost multispectral imaging, including inspecting food quality and safety; monitoring pharmaceuticals and medical devices; checking materials such as fur, leather, seeds, textiles, metal, paper, and pulp; imaging printed matter; and forensics.

Multispectral imaging technology has the potential to become an important tool for future measurements of nonhomogeneous samples. The technology is certainly not constrained to the wavelength range between 230 and 1050 nm, although going beyond this range will mean more specialized and often more expensive sensors with less resolution and image quality. High-performing and relatively inexpensive systems are or will be available to provide accurate results in a broad range of applications and the reproducibility that enables useful database generation and data mining.

Jens Michael Carstensen is chief technology officer at Videometer, Hørsholm, Denmark; www.videometer.com.

Dual-band camera registers visible and near-IR images

High-speed inspection systems for monitoring moving objects often struggle to obtain sufficient contrast between desirable and undesirable product attributes. To address this challenge, we have combined visible and near-infrared (near-IR) imaging in a single, dual-band camera that enables accurate sorting algorithms. Rather than trying to coregister the output of separate visible and IR cameras, this dual-band camera registers data from the same resolved spot at the same time and across a 60° field of view in both the 400- to 900-nm wavelength band and the 1100- to 1700-nm band. To ensure spatial registration, the two spectral bands must propagate with high efficiency through one lens system and be detected within an integrated package that includes a beamsplitter, an InGaAs array, and a CCD sensor.

The camera produces B/W images from the CCD and InGaAs arrays. These two images can be merged into a single false-color-coded composite picture. The InGaAs picture shows contrast not available in the visible spectrum. The composite merges the CCD data (coded in shades of blue) with the InGaAs data (coded in shades of red). This merged image allows a user to rapidly visualize and process those portions of an image having different contrast in the two spectral bands.

Potential applications span several industries. The high moisture sensitivity of near-IR images enable the monitoring of wet-paper-processing steps, pharmaceutical products, and the separation of blood from underlying tissue during surgical procedures. Visually dark fabrics and metals such as copper and gold appear brighter in the near-IR, enabling defect detection and sorting applications. The near-IR also offers more contrast between meals and plastic trays, so that automated food-placement lines can be monitored to minimize spillage (see figure).

G. E. CARVER, principal scientist

S. RANGWALA,vice president, product development

Princeton Lightwave, Cranbury, NJ, USA

www.princetonlightwave.com.