FOOD & BEVERAGE: Laser triangulation system measures food products

Producers of packaged meat products must ensure that the portions of meat that are packed are accurately portioned and cut. Deviating from specified weights by more than a half an ounce for a 16-oz steak, for example, can be expensive. Similarly, cutting the meat in smaller portions will result in unhappy customers, especially if the meat is to be offered to some of the world’s most famous restaurants.

To accomplish economical product portioning, manufacturers of food slicing and packaging equipment are incorporating volumetric scanning systems into their products. By doing so, preprogrammed parameters, such as weight and thickness, can be used to determine various slicing configurations from the generated 3-D model of the meat and then provide the highest-yield cuts.

“In the design of these systems,” says Ignazio Piacentini, CEO of ImagingLab (Lodi, Italy; www.imaginglab.it), “a three-dimensional model of the food must be generated before the slicing process can begin.” Piacentini and his colleagues teamed up with Aqsense (Girona, Spain; www.aqsense.com) to build a prototype 3-D scanner that was shown at VISION 2009, held in Stuttgart, Germany in November (see Fig. 1).

To create a 3-D model, structured light is used to illuminate the product as it traverses the scanner’s conveyor belt. By using three ZM-18 laser line emitters from Z-Laser Optoelektronik (Freiburg, Germany; www.z-laser.com) positioned at equal 120° angles around the gantry, the complete 360° surface of the meat is first illuminated. As the light is reflected from the surface of the meat, it is captured by three MV-D1024E-3D01-160-CL Camera Link cameras from Photonfocus (Lachen, Switzerland; www.photonfocus.com) that are also positioned at equal 120° angles around the gantry. Digital output from these three cameras is then transferred to a host PC using three NI-PCIe-1427 Camera Link frame grabbers from National Instruments (Austin, TX, USA; www.ni.com).

To generate a 3-D image, ImagingLab adopted the SAL3D library from Aqsense, which provides a set of tools for laser peak detection, calibration, merging of multiple images, Z-mapping, and geometric computation. By running the Aqsense peak detection algorithm within the FPGA of each Photonfocus camera, the speed of estimating the maximum intensity point of the reflected Gaussian light is increased (see “Reading the Shapes,” Vision Systems Design, March 2008).

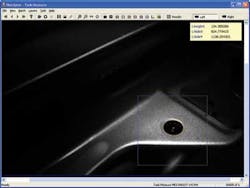

After this peak is computed in all three cameras, the data are transferred to the PC and a 3-D image is generated by merging multiple point cloud images. To build the user-interface for the system, Aqsense and ImagingLab integrated the SAL3D library within National Instrument’s LabView software (see Fig. 2). “Because the SAL3D library can now be used within LabView,” says Piacentini, “it can be used with NI’s ImagingLab robotics library by other systems integrators, wishing, for example to build 3-D robotic vision systems.”

Before the 3-D scanner can be used for food processing, however, the system must be calibrated. To achieve this, Aqsense developed a 3-D metal calibration object, accurate to 50 μm that is first imaged by the structured laser light and camera system.

“Imaging and analyzing such a precisely formed object,” says Ramon Palli, general manager of Aqsense, “allows the tools within SAL3D to accurately calibrate the system.” After this calibration process, the system can then compute the volume of food products within 0.1% accuracy. These data are then used by the slicing system to cut portions of the food at a constant volume and, therefore, constant weight.

According to Piacentini, prospective customers in the food industry will benefit from this development because of the increased accuracy and speed it provides in determining constant volume and weight portioning. “Minimizing the waste in these automating portioning machines,” he says, “dramatically increases the yield.”