SERVICE ROBOTS - Vision-guided robot heads for space

To support the work of astronauts on the International Space Station (ISS), NASA (Washington, DC, USA; www.nasa.gov), General Motors (Detroit, MI, USA; www.gm.com), and Oceaneering Space Systems (Houston, TX, USA; www.oceaneering.com) are developing a humanoid robot, known as R2. Although the robot has the appearance and proportions of an astronaut, R2 has no lower torso, its body fixed to a stationary rack. Designed to assist astronauts during extra-vehicular activities (EVAs), the robot combines a number of tactile, force, position, range-finding, and vision sensors to allow it to perform functions such as object recognition and manipulation.

“Of the many planned ISS tasks for R2, sensing and manipulation of soft-goods materials used to hold a set of tools is among the most challenging,” says Brian Hargrave, Robonaut ISS applications lead at the Robotics and Automation group of Oceaneering Space Systems. “To remove a tool, the box must be identified, opened, the tool removed, and the box closed. Despite the base being secured, the fabric lid tends to float in zero-gravity conditions and can fold in on itself in unpredictable ways. To perform this task, therefore, lid-state estimation and grasp-planning techniques must be coordinated using a combination of computer vision and motion-planning methods.”

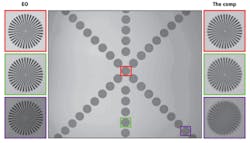

In the design of the R2 robot, a 3-D time-of-flight (TOF) imager will be used in conjunction with a stereo camera pair to provide depth information and visible stereo images to the system. While a Swiss Ranger SR4000 TOF camera from MESA Imaging (Zurich, Switzerland; www.mesa-imaging.ch) will generate the 3-D positional information, two Prosilica GC2450 GigE Vision cameras from Allied Vision Technologies (Stadtroda, Germany; www.alliedvisiontec.com) will capture color stereo images. Halcon 9.0 image-processing software from MVTec Software (Munich, Germany; www.mvtec.com) will be used to integrate the various sensor data types in a single development environment.

To achieve robust, automatic recognition and pose estimation of objects, complex patterns from the stereo and TOF sensors will be analyzed. The TOF sensor separates the background from the objects in front of the robot, so that the background pixels in the camera images can be ignored. Since the system uses a single laptop computer connected to the robot to control the robot’s custom-built tactile force and position sensors and perform object recognition, processing power is limited.

Consequently, regions of interest (ROIs) within the images will first be segmented based on color, pixel intensity, or texture, and pattern recognition techniques will be applied. “Searching for a pattern in a small ROI is much faster than searching for a pattern in a large image. Simple pattern recognition will be used to find the ROI, while complex pattern recognition will be used inside the ROI,” says Hargrave.

To locate the soft-goods box, Hargrave and his colleagues will use texture segmentation functions within Halcon 9.0. Because the box lid fasteners are composed of latches and grommets, these elements will be identified in the stereo images by using shape-based matching techniques. These same techniques will be used to search for fasteners in any orientation once the lid is opened and floating in zero gravity.

To compute the pose of these lid fasteners, the location of the fastener components in each stereo image must be identified.Halcon’s built-in classification techniques will be used with stereo-pair calibration and TOF positional information to perform pattern-recognition functions. In this way, the system will generate a feasible trajectory for the robot to fold the lid to open the box.—Contributed by Lutz Kreutzer, MVTec Software, Munich, Germany

Vision Systems Articles Archives