IMAGE PROCESSING: FPGAs enable real-time atmospheric compensation

In theory the ability of an optical telescope to resolve an object at long distances is limited by the size of the main mirror, but in reality the random nature of atmospheric turbulence plays a more important role. To compensate for this atmospheric disturbance, American astronomer David Fried developed a technique known as speckle imaging to increase the resolution of images captured by ground-based telescopes.

Recently, the technique has been adapted for horizontal and slant path applications by Carmen Carrano, a leader in Atmospherics at Lawrence Livermore National Laboratory (Livermore, CA, USA;www.llnl.gov). In her paper entitled “Speckle imaging over horizontal paths” [Proc. SPIE, Vol. 4825, 109 (2002)], Carrano describes a bi-spectrum speckle imaging technique that can be used to obtain a diffraction-limited estimate of an object in an image from a time series of short exposures.

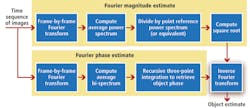

To accomplish this, the power spectrum and bi-spectrum of each image are first computed using a Fourier transform to provide information about the amplitude and phase of the signal. Averaging the amplitude and phase information, recombining the image data, and then computing an inverse FFT subsequently results in a corrected image (see Fig. 1).

However, to accommodate for spatially varying point-spread functions that occur when the algorithm is applied to earth-bound imaging applications, overlapping subregions of the image are separately processed and reassembled to form the final image. This results in a single corrected image with quality near the diffraction limit (see Fig. 2).

“Implementing this technique on a personal computer,” says Michael Bodnar, senior engineer at EM Photonics (Newark, DE, USA;www.emphotonics.com), “requires several seconds to analyze a single frame.” Thus, even though the company’s version of its atmospheric compensation software (ATCOM) is offered as an entry-level version for PCs, it also offers an accelerated version based on an FPGA module from Alpha Data (San Jose, CA, USA; www.alpha-data.com).

While the software-only version can process 720p HD images at 1 frame/s, accelerating the algorithm on the Nvidia-based system results in a 10-fold speed increase in processing throughput. This can further be accelerated to 60 frames/s using the FPGA implementation.

“The complexity of speckle processing can be attributed to both the volume of floating-point computations required to perform the FFT and bi-spectral analysis and the large memory bandwidth requirement needed,” says Bodnar. Indeed, profiling the software algorithm showed that the bi-spectrum calculation accounted for 80% of overall serial execution time in a microprocessor-based implementation. “This bottleneck was mainly due to the recursive dataflow inherent to the bi-spectrum calculation and the tens of millions of floating-point values generated,” explains Bodnar.

To handle this dataset, pixel data is first converted from the real space into the frequency domain using a hardware-based FFT implementation. In the original microprocessor code, FFTs were computed on a tile-by-tile basis using a single-precision floating-point format. In EM Photonics’ implementation, data are transferred in rows of pixels, not tiles, and two separate hardware blocks calculate the horizontal and vertical FFTs, eliminating the need to buffer entire input frames.

To perform the necessary calculations while meeting the real-time objective, EM Photonics’ embedded system uses a Xilinx Virtex-5 LX330T FPGA-based 64-bit PCI mezzanine board from Alpha Data specially developed for image processing. This FPGA board is powered by a PMC carrier board that supports modular video daughter boards that connect directly to the FPGA processing board.

Currently, the speckle processing engine supports different daughter boards for analog and digital image data protocols including NTSC, VGA, DVI, SDI, and HD-SDI. It can also handle a variety of different video formats, including NTSC 480p/60, 720p/30, and 720p/60, each with up to 10-bit grayscale quantization.