PRODUCT FOCUS - Vision-Guided Robots Find a Role in Food Production

Vision-guided robotic systems are automating every aspect of meat production, from slaughtering to packaging

Andrew Wilson, Editor

Meat production has traditionally been a manual process requiring skilled labor to perform repetitive, often hazardous, tasks. Because slaughtering, cutting, sizing, and packaging have required these specialized skills, the process of automation has lagged behind other commercial food automation processes such as bottling and canning beverages and vegetables.

According to the market research report "Vision for Service Robots" (A. Shafi and C. Holton, PennWell Corp., 2011), only 3% of total food-processing equipment employed robotic systems in 2009; it is only quite recently that, due to increased market competition, operational costs, and safety requirements, vision-guided robots have been used to automate meat production processes. Today, automation systems are being deployed at numerous stages in the meat production process such as evisceration, carcass splitting, deboning, trimming, slicing, weighing, and packing.

Internal affairs

One of the first steps in automating such systems is evisceration—the process of removing the internal organs of animals such as cows or pigs. In the early stages of meat processing, hock or hoof cutting has typically been performed by a worker manually snipping the hocks from the carcass using a hydraulic or pneumatically powered cutting tool.

To perform this task automatically, Jarvis Products has developed a vision-guided robotic system dubbed the JR-50 (see "Automated Meat Processing," Vision Systems Design, May 2008). The company mounted a Jarvis 30CL cutter on a six-axis robotic arm from the Motoman Robotics Division of Yaskawa America; it is guided by a DeepSea G2 embedded 3-D vision system from TYZX.

Beef carcasses hung from chain-driven suspended hooks enter the hock-cutting station. Once regions within the operating range of the cutter are determined, the system locates and tracks potential hocks based on their size and shape. The x, y, and z coordinates of each hock are sent to the robot controller, which updates the robot arm path as it moves the cutter to the hock (see Fig. 1).

After hock removal, the carcass is ready for further processing such as rectum removal—a task known as debunging. In 2010, a system for debunging pig carcasses was demonstrated by MoviMED, based on a vision system that uses a 3-D vision-guided robot (see "Pork Process," Vision Systems Design, March 2010). Pig carcasses hung upside down and moved along a conveyor to a debunging station, where a laser-based 3-D camera from Automation Technology is mounted to an M-710i industrial robot from Fanuc Robotics. After the rear of the carcass is scanned, point-cloud data of the buttocks and rectum are used to determine the location of the rectum. After this location is established, a robot equipped with a pneumatic vacuum saw cuts and extracts the colon before the meat is further butchered.

Fully integrated systems have also been deployed at the Coesfled plant of Westfleich, where four robots from Kuka Robotics are used to remove the rectum and open the pelvic bone and belly of pig carcasses. Protected against moisture, contamination, and cleaning agents by hygienic protective suits, the two KR 30s and two KR 60s robots are integrated with a 3-D laser scanner and PC software package from Banss Schlacht und Fördertechnik.

After the laser scanner generates 3-D data of the carcass's surface, the PC-based software generates individual cutting data for each carcass. The first robot, a KR 30, then cuts the front hocks and a second suspended KR 30 removes the rectum. Following a second 3-D laser measurement, a KR 60 breaks the pelvic bone with a cutting tool and scores the abdominal wall. Then, a second KR 60 opens the belly and chest of the carcass with a circular cutter. After each cut, the tools are disinfected in 82°C water.

A separate piece

To separate parts of the carcass for further processing, consistent accurate cuts must then be made to ensure that the maximum yield is achieved from each carcass. To accomplish this, Machinery Automation & Robotics (MAR) in collaboration with Meat and Livestock Australia has developed a system known as BeefScriber that uses a tungsten-tip circular saw blade interfaced to an industrial robot. Integrated vision and 3-D laser profiling sensors in the system relay scanned information and set cutting profiles. After portions of meat are segregated, they can be further processed depending on the needs of the customer.

In some instances, portions of meat may need to be deboned and sliced before they can be packaged. In this operation, the Hamdas-R pork thigh-deboning system from Mayekawa Electric can be used to recognize the size and shape of bones and has successfully automated the deboning process. By integrating an x-ray recognition system with four RX160 robots from Stäubli, the system is capable of deboning 500 hams per hour with only 10 workers. According to the company, this task previously required 20 workers to yield the same amount of product.

Mayekawa Electric is not the only company to have deployed x-ray-based robotic vision systems for this purpose. Scott Technology has also developed such a system for lamb deboning (see "X-ray Imaging Delivers a Better Cut," Vision Systems Design, July 2011). After carcasses are x-rayed using an NDI-225 fan-beam x-ray tube from Varian Medical Systems, images are detected using an x-ray detector from Sens-Tech interfaced to a PC. Because the carcass passes twice between the x-ray tube and detector at different angles, the two different images allow a 3-D map of the lamb's skeleton to be produced. After images are processed using the Halcon tool from MVTec Software, critical ribs and structures are identified and used to control a custom cutting machine that divides the meat into 10 different cuts.

The whole package

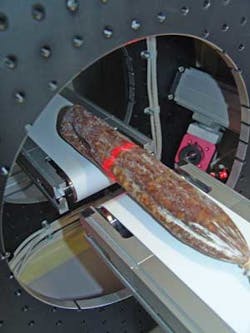

After meat is cut and deboned, it is then sliced, packaged, and shipped to the customer. Here again, vision-guided robotics are speeding up these processes. To ensure that portions of meat are accurately portioned and cut, manufacturers of food slicing and packaging equipment are incorporating volumetric scanning systems into their products. Two years ago, ImagingLab showed a prototype 3-D scanner that it had developed with Aqsense to perform just this task (see "Laser triangulation system measures food products," Vision Systems Design, January 2010).

To create a 3-D model, structured light is used to illuminate the product as it traverses the scanner's conveyor belt. By employing three ZM-18 laser-line emitters from Z-Laser Optoelektronik positioned at equal 120° angles around the gantry, the complete 360° surface of the meat is illuminated (see Fig. 2).

Light reflected from the surface of the meat is captured by three MV-D1024E-3D01-160-CL Camera Link cameras from Photonfocus, also positioned at equal 120° angles around the gantry. Digital output from these cameras is then transferred to a host PC using three NI-PCIe-1427 Camera Link frame grabbers from National Instruments. When multiple point-cloud images are merged, the 3-D image is generated. The data are then used by the slicing system to cut portions of the food at a constant volume and, therefore, constant weight.

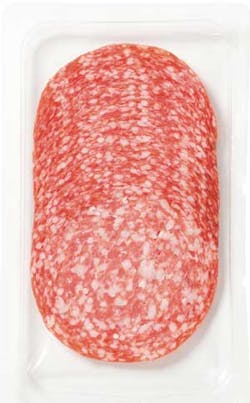

While such systems are being used to optimize the slicing of meat cuts, other vision-guided robots are used to pack the finished meat products for customer shipment. At Charkman Group (Boras, Sweden), for example, a fully automatic slicing line is already in operation that slices and packs high volumes of salami, ham, turkey, rolled pork, and other cooked meats (see Fig. 3). At the heart of the line is an intelligent portion loading (IPL) IRB 340 robot from ABB called FlexPicker that is guided by vision software from Cognex. Capable of handling up to 150 picks per minute, Charkman's system can slice a variety of products and be programmed to handle 26 pack variations and sizes.

Just as the adoption of vision-guided robots has nearly fully automated the production of automobiles, so too will the use of these systems have a significant impact on meat production processes. For meat producers this will reduce the skilled manual labor required, decrease costs, and increase throughput, which should positively affect their bottom line.

Company Info

ABB

Auburn Hills, MI, USA

www.abb.com

Aqsense

Girona, Spain

www.aqsense.com

Automation Technology

Bad Oldesloe, Germany

www.automationtechnology.de

Banss Schlacht und Fördertechnik

Biedenkopf, Germany

www.banss.de

Cognex

Natick, MA, USA

www.cognex.com

Fanuc Robotics

Rochester Hills, MI, USA

www.fanucrobotics.com

ImagingLab

Lodi, Italy

www.imaginglab.it

Jarvis Products

Middleton, CT, USA

www.jarvisproducts.com

Kuka Robotics

Augsburg, Germany

www.kuka-ag.de

Machinery Automation & Robotics

Silverwater, Australia

www.machineryautomation.com.au

Mayekawa Electric

Tokyo, Japan

www.mayekawa.com

Meat and Livestock Australia

North Sydney, Australia

www.mla.com.au

Motoman Robotics Division,

Yaskawa America

Miamisburg, OH, USA

www.motoman.com

MoviMED

Irvine, CA, USA

www.movimed.com

MVTec Software

Munich, Germany

www.mvtec.com

National Instruments

Austin, TX, USA

www.ni.com

Photonfocus

Lachen, Switzerland

www.photonfocus.com

Scott Technology

Dunedin, New Zealand

www.scott.co.nz

Sens-Tech

Langley, UK

www.sens-tech.com

Stäubli

Pfäffikon, Switzerland

www.staubli.com

TYZX

Menlo Park, CA, USA

www.tyzx.com

Varian Medical Systems

Palo Alto, CA, USA

www.varian.com

Westfleisch

Münster, Germany

www.westfleisch.de

Z-Laser Optoelektronik

Freiburg, Germany

www.z-laser.com