IMAGING SOFTWARE: GPU toolkit speeds MATLAB development

For more than a decade, the MATLAB computing language from The MathWorks (Natick, MA, USA) has provided researchers with numerous tools for rapid prototyping of data analysis, numeric computation, and image-processing tasks. The company’s Image Processing Toolbox provides developers with a number of functions for tasks such as image enhancement, image deblurring, feature detection, noise reduction, image segmentation, spatial transformations, and image compression.

Since many of these tasks are computationally intensive, simple image-processing tasks such as small kernel convolution on relatively large images can take seconds to perform. To speed the development time of programmers using MATLAB, AccelerEyes (Atlanta, GA, USA) has developed a GPU toolkit known as Jacket for MATLAB that allows M-code developers to port their code to CUDA and run it on any Tesla, Quadro, or GeForce graphics card from Nvidia (Santa Clara, CA, USA).

Without any knowledge of Nvidia’s Compute Unified Device Architecture (CUDA) parallel computing architecture or programming languages such as C for CUDA, developers can implement MATLAB code on the company’s graphics processors in a very efficient manner.

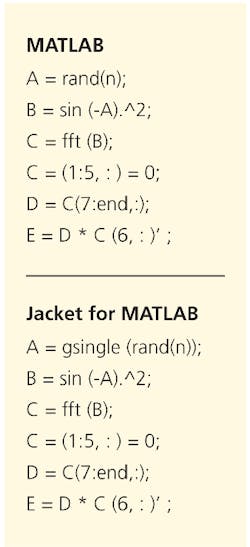

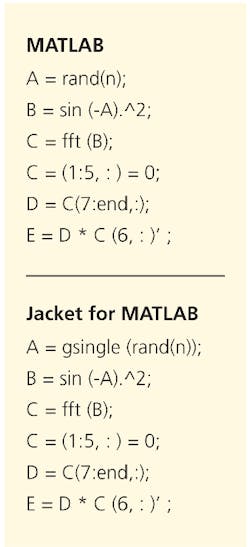

As a trivial example, the top part of the figure shows a program written in MATLAB that generates random numbers and performs simple arithmetic, a Fourier transform, subscript assignment and referencing, and a matrix multiple. The bottom section shows the same program coded in Jacket. As can be seen, the random number data is first tagged as a single precision number and—without additional code—the complete program will run on the GPU. According to James Malcom, vice president of engineering with AccelerEyes, this program would take hundreds of lines of code if written in raw CUDA.

Those wishing to integrate their own custom C or C++ code into such programs can use MATLAB MEX-files to call C or C++ routines directly from MATLAB as if they were MATLAB built-in functions. In a similar manner to the trivial example shown in the figure, the MEX files and MATLAB code can be quickly tailored to run on the GPU using the AccelerEyes’ libJacket for C/C++.

At the SPIE Defense, Security + Sensing show in April 2011, in Orlando, FL, Bill Stott, sales manager with AccelerEyes, demonstrated the power of Jacket for MATLAB in image processing. Using an image of 915 × 915 pixels × 8 bits, a 3 × 3 convolution running on a 3.16-GHz dual-core Intel processor took approximately 1.5 s. When the same function was commenced using Jacket for MATLAB running on a Tesla 62050 GPU operating at 1.5 GHz, the same convolution took approximately 47 ms. According to Stott, the company has also benchmarked 3 × 3 convolutions on images of 640 × 480 pixels × 8 bits running at 30 frames/s.

To date, a number of companies have used Jacket for MATLAB in their projects. At the Indian Institute of Technology (Roorkee, India), for example, Jaideep Singh and his colleagues have shown how the two-dimensional discrete wavelet transform (DWT) can be used to compress medical images using CUDA-capable GPUs. Acceleration of the image-compression algorithm was accomplished by writing two functions—wavedec2GPU and wdencmpGPU—that mimic MATLAB routines but are executed on the GPU.

Tests were performed on 512 × 512-pixel head-scan CT images for varying wavelet decomposition levels. When the same algorithm was compared running on both an Nvidia Tesla C1060 CPU and an Intel Xeon CPU at 2.5 GHz with 4 Gbytes of RAM, Jacket was shown to increase the processing speed by a factor of 38 over CPU-based MATLAB code. Thus, says, Singh, the 2-D DWT image-compression technique is suitable for real-time compression, transmission, and decompression, allowing the resulting data to be used for diagnostic purposes.

Vision Systems Articles Archives