Imager uses angle-sensitive pixels

Cornell University scientists (Ithaca, NY, USA) have developed a class of CMOS image sensor which, when combined with a standard camera lens, performs lightfield imaging without any additional off-chip optics.

Conventional digital cameras record light from three-dimensional scenes by capturing a 2-D map of light intensity onto an imager. However, the new sensor directly records both the local incident angle of light and the intensity information from a lightfield.

The key to this new technique is the deployment of what the researchers term "angle-sensitive pixels" or ASPs. This approach uses local stacked diffraction gratings over each pixel to generate a strong, periodic sensitivity to incident angle. ASPs' gratings are built using only the metal layers already present in a standard CMOS process (normally designated as wires for carrying electrical signals between transistors), and so incur no added manufacturing cost over a standard CMOS image sensor.

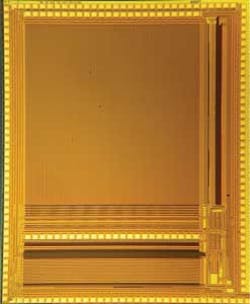

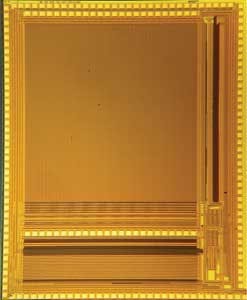

Fabricated in a 180-nm mixed-mode CMOS process, the sensor developed at Cornell sports a 400 × 384-pixel array that employs 7.5-μm ASPs, which use pairs of local diffraction gratings above a photodiode to detect incident angle.

To demonstrate the capabilities of the ASP-based image sensor, the researchers created a lightfield camera by placing the imager behind a commercial fixed-focus camera lens.

Compared to a traditional intensity-sensitive imager, the chip captures a much richer description of the light striking it. Through post-processing the image data, the researchers determined the ranges of objects from the imager and refocused out-of-focus parts of a scene. This approach may prove a useful alternative to time-of-flight imaging systems, structured light systems, or passive systems that use multiple cameras.

Vision Systems Articles Archives