3-D IMAGING: Multicamera system performs motion analysis

Analyzing human movement from image data has many applications ranging from motion picture production to medical analysis. In many systems used today, artificial markers are placed on the body and an image sequence captured. By interpreting the position of these markers as the subject moves, the kinematics of human motion can be captured, wire frames computed, and 3-D images reconstructed.

Since positioning these markers can be laborious and time-consuming, markerless approaches—though more computationally challenging—are more efficient and less expensive. To be effective, Markerless Motion Capture (MoCap) systems must determine the position, orientation, and joint angles of the human body from captured image data alone.

This is the approach taken by pofessor Bodo Rosenhahn and his colleagues at the Leibniz University of Hannover (Hannover, Germany). Rosenhahn enlisted the expertise of IMAGO Technologies (Friedberg, Germany) to develop a motion capture system that incorporates multiple visible and infrared (IR) cameras.

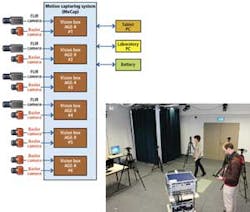

By capturing data in the visible and IR spectra, reconstructed 3-D image data can both model the human form and map the thermal energy emitted from the human body (see Fig. 1). In the system developed by IMAGO, eight Aviator avA1000-100gc Gigabit Ethernet cameras from Basler (Ahrensburg, Germany) running at 100 frames/s are used to capture visible information from the scene, while four VGA microbolometer-based cameras (FLIR A615) running at 50 frames/s from FLIR Systems (Wilsonville, OR, USA) were used to image the data.

As images are captured, image data are transferred to six Intel Atom-based VisionBox AGE-X embedded machine-vision systems running Windows 7 Embedded from IMAGO Technologies. "In this application," says Carsten Strampe, president of IMAGO Technologies, "it was necessary to synchronize the cameras so that 3-D data construction could be accurately performed" (see Fig. 2).

The vertical sync signal from one IR camera was used as a master to synchronize the eight visible-light cameras. This synchronization was performed by a custom auxiliary board embedded into the cabinet of the final system. Motion analysis of the IR image data collected could then be used to map data from the three asynchronously free-running IR cameras onto the 3-D reconstructed images.

In operation, the 0.5-Gbyte DDR memory in each of the embedded vision systems is capable of storing up to 15 s of images from each of the 12 cameras. As images are captured, Bayer interpolation from the visible light cameras is then performed and transferred to each of the computers' hard drives. 3-D image reconstruction can then be performed using the visible data.

To configure the system, a user interface developed in Windows Presentation Foundation (WPF) by IMAGO was deployed on an Atom-based tablet computer from ads-tec (Leinfelden-Echterdingen, Germany). Interfaced to the system via Gigabit Ethernet, this tablet allows the user to configure the system, adjust camera parameters, display output from a single camera or multiple cameras, and start and replay captured scenes.

Because the system was required to operate in standalone mode in outdoor environments, it needed a portable power supply. The system is powered from a 41Ah lead battery from Exide Technologies (Büdingen, Germany). To ensure that power did not fail, the voltage from this unit was digitized using custom analog-to-digital circuitry (ADC) on the system's custom auxiliary board. This enabled dynamic monitoring of the power from the system's tablet-based interface.

Vision Systems Articles Archives