HYPERSPECTRAL IMAGING: Spectral vision systems support the food industry

Computer vision is currently being used in a number of food-related applications, including inspection and grading of fruits and vegetables as well as assessing the quality of meat, fish, cheese, and bread.

As part of a collaborative project between AIDO—the Technological Institute of Optics, Color and Imaging (Valencia; Spain; www.aido.es) - and AINIA (Paterna, Spain; www.ainia.es) - a technological center with more than 1000 associated food industry companies, researchers are currently studying different types of spectral vision systems. The aim of the project, known as Optifood-Spectral, is to explore how spectral image-analysis techniques can be applied to serve the needs of integrators that develop automated analysis systems.

To demonstrate the effectiveness of such systems when applied to food production, Emilio Ribes, PhD, Multi-Spectral Unit manager, and his colleagues at AIDO have developed two prototype systems using hyperspectral and infrared (IR) imaging techniques.

In the development of the hyperspectral imaging system, a Xeva-1.7-640 camera from Xenics (Leuven, Belgium) with a spectral response from 900–1700 nm was coupled to a liquid-crystal tunable filter from Cambridge Research & Instrumentation Inc. (Hopkinton, MA, USA). In this manner, spectral samples with bandwidths of 10 nm could be obtained across the 950–1650-nm spectral range.

Software developed in MATLAB from The MathWorks (Natick, MA, USA) is first used to configure the system, the number of spectral bands acquired and their corresponding exposure times. Depending on the exposure time and the number of bands to be acquired, the total acquisition time can vary. If 70 bands are to be acquired, for example, the total acquisition time is approximately 2.5 min.

Ribes and his colleagues analyzed the spectral response of a piece of pork using ENVI 4.1 image-analysis software from Exelis Visual Information Solutions (Boulder, CO, USA). In the range from 1200–~1350 nm, and from 1500–1650 nm, meat can be clearly distinguished from fat and bone (see Fig. 1). Although the fat and bone profiles seem to exhibit a similar response, some differences exist in the range from 950–1100 nm.

Ribes and his colleagues have also developed systems that highlight the differences between how near-IR (NIR) and thermal IR (TIR) cameras can be used to classify such products.

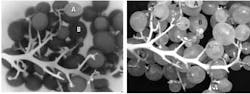

One system consists of a UI-6410SE uEye 640 × 480-pixel GigE camera from IDS Imaging (Obersulm, Germany) and a 384 × 288-pixel Miricle TIR camera from Thermoteknix (Cambridge, UK) mounted on a mechanical arm. Results of acquiring images of grapes after removal from refrigeration are shown both for the TIR camera and the NIR camera (see Fig. 2).

After 15 min of being removed from refrigeration, the grapes tend to warm to reach the 25°C ambient room temperature, but grapes with more water content change their temperature more slowly that grapes with less water content. This velocity warming effect can also be due to different sizes of grapes, i.e., smaller objects are less resistant to changes in temperature.

In the left image, the grapes with less water content (i.e., riper grapes) and/or of smaller size (A) appear lighter than the grapes with higher water content and/or of a bigger size (B). However, this difference cannot be observed when using an NIR camera (the right image).

Both multispectral and IR-based systems from AIDO are prototypes; they have not yet been field-tested or commercialized—a task that Ribes and his colleagues hope will be taken up by the many members of the AINIA alliance.

Note: The project Optifood-Spectral has been funded by the Valencia Regional Government through its Institute of the Small and Medium Enterprises (IMPIVA) and the European Regional Development Fund (ERDF) over 2011.

Vision Systems Articles Archives