NOVEL IMAGERS : Gigapixel camera employs multiple image sensors

Military systems require optical imaging systems that can view objects at long distances with high resolution and large fields of view (FOV). For this reason, the Defense Advanced Research Projects Agency (DARPA; www.darpa.mil) initiated its Advanced Wide FOV Architectures for Image Reconstruction and Exploitation (AWARE) program to fund new focal-plane arrays and camera designs.

Taking up this challenge, David Brady, PhD, and colleagues at Duke University (www.duke.edu) have developed a 1-Gpixel camera capable of imaging scenes with a 120° FOV. The architecture of Duke University's 1-Gpixel camera is radically different from traditional high-resolution cameras that use single imagers coupled to high-resolution lenses.

"While it is feasible to build gigapixel imagers, it is challenging to build lenses with enough resolving power to match the resolution of such sensors," Brady says. To overcome this limitation, the multiscale camera developed at Duke divides the imaging task between an objective lens and a multitude of smaller optics rather than forming an image with a single monolithic lens.

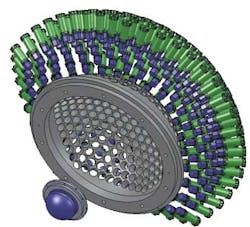

This camera employs a multiscale lens system composed of a primary 6-cm objective lens and a secondary micro-camera array (see Fig. 1). Manufactured by Photon Gear (www.photongear.com), the 6-cm objective is used to focus light onto a 30-cm hemispherical dome with 220 openings for micro-optic cameras (Fig. 1b). As the light impinges on the dome, it is captured by multiple micro-cameras placed in these openings.

In each micro-camera, a relay lens is used to demagnify the 2.5° FOV it captures of the hemispherical dome and the resultant image captured using a 14-Mpixel CMOS imager from Aptina Imaging (www.aptina.com). Within the camera housing, an FPGA is used to correct for any chromatic and spherical aberrations created by the objective lens.

Captured images from pairs of cameras are transferred over an Ethernet interface to a micro-camera control module. These control modules are used to control the focus and exposure of each camera within the hemispherical dome. To perform this focusing, each camera can be moved laterally by controlling a motor mounted on each micro-camera module. In addition, these micro-camera modules are used to transfer images from pairs of cameras to a number of Ethernet switches that are interfaced over Gigabit Ethernet to a Linux-based computer cluster.

After these images are transferred, an image-stitching algorithm, developed at the University of Arizona (www.arizona.edu), is used to register the separate images and generate a composite image, a process that takes approximately 20 sec (see Fig. 2).

In the multiscale imaging system's current incarnation, 98 micro-cameras have been deployed. In this configuration, it requires 13 sec to transfer multiple images from all 98 cameras to the host computer cluster.

While at present the system has been shown to capture gigapixel images, Brady expects to reduce the size of the electronics and optics in the next generation of multiscale imaging systems while increasing the number of micro-cameras to between 500 and 1000.