Smart Software Secures Surveillance Systems

Wesley Cobb

Large-scale security systems comprise numerous hardware and software components that must be configured and maintained to ensure their effectiveness. In light of this, a major US transit agency has incorporated a software package capable of analyzing images captured by multiple cameras to identify anomalous events.

Many currently available surveillance systems are able to perform these functions, but they must be programmed to identify specific types of objects and their behaviors in certain scenes. As a result, they are expensive to implement and have limited capabilities. Software from Behavioral Recognition Systems (BRS) eliminates these former restrictions by learning to autonomouslyidentify and distinguish between normal and abnormal behavior within a scene, analyzing movements and activities on a frame-by-frame basis.

Using the AISight machine-learning-based video surveillance recognition software, the transit agency captures image sequences from cameras, detects and tracks objects, learns the patterns of behavior they exhibit, remembers those patterns, recognizes the behaviors that deviate from them, and alerts operators of any unusual events.

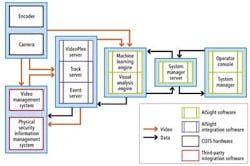

The BRS tool works in conjunction with a video management system (VMS) from Verint and a physical security interface management (PSIM) software package from CNL Software to provide a complete surveillance system for the agency (see Fig. 1).

Computer vision

Systems deployed by the transit agency at its rail and bus depots comprise a number of infrared cameras fromFLIR Systems and daylight cameras from Axis Communications that acquire image data from a scene. The data are then passed to the BRS AISight system for analysis.

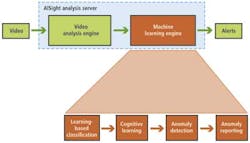

If the data from the cameras are in a Real Time Streaming Protocol (RTSP) format, then they are passed directly to the computer-vision engine component of the BRS system for further processing (see Fig. 2). The video analysis engine is the part of the AISight Analysis Server software that performs object detection, object tracking, and the characterization of objects and their movements in a scene.

If the data from the cameras are not in RTSP format, Videoplex middleware residing on a Windows-based server converts the data to the RTSP format prior to processing by the Linux-based AISight system.

To distinguish between the active elements in the foreground of a scene and the elements in the background, the computer vision engine captures several frames of the video images and presents them to an Adaptive Resonant Theory (ART)neural network.

As the content and volatility of the background pixels are likely to remain relatively consistent compared with those in the foreground, over a period of time the neural network "learns" to identify which pixels represent part of the background image and which represent objects in the foreground.

One advantage of neural-network-based background image subtraction is that the background can consistently be removed from the foreground image even as weather and lighting conditions change the values of the pixels in the background image; the neural network continuously accommodates such changes.

Having computed the background model of a scene, the computer vision engine then subtracts the background image from any new image frame that it is presented with to identify groups of pixels in the image that have changed.

To decide whether groups of pixels represent an object that should be tracked by the system, the vision engine first identifies whether or not collections of pixels are moving in concert over several consecutive frames. If they are, then they are tentatively tagged as potential candidates (known as blobs) that may be worth tracking.

The computer video analysis engine creates bounding boxes around each of the blobs and extracts kinematic data relating to each of them, including their position, current and projected direction, orientation, and velocity and acceleration. The system also acquires the optical properties associated with the foreground pixels that are in the bounding box such as their shapes, color patterns, and color diversity.

Those features are used by the system to track where any one individual blob is moving across several frames of video. To do so, the system performs a match between the kinematic and the optical properties of the blob at one specific location and those of neighborhood regions in the image, after which a best fit determines the most probable location of the blob.

Classifying the blobs

After identifying which of the blobs in the image are worth tracking and having captured both the kinematic and the optical properties associated with them, the AISight system then trains itself to classify the blobs.

The system assigns numerical values to the optical properties of the blobs, which it collates with the blob's associated kinematic data values. These two groups of values define a multidimensional feature vector that is used to position the blob in the vector space of a multidimensional map -- a topographic organization of the data in which nearby locations in the map represent objects with similar properties.

After the system has been presented with many multidimensional vectors, clusters of points emerge in specific regions of the multidimensional space of the map. These data clusters indicate that a particular type of subject with similar properties and movement -- such as a bus or a train -- has been observed in scenes from image sequences over a recorded period of time.

One advantage of the self-organizing map is that the system can immediately use it to recognize an unusual subject type. Although such a subject type may appear stable and repetitive frame after frame, the system will identify that it does not represent any one of the known event types learned by the system, and can alert an operator prior to any further processing.

Learning behavior

Having classified object types in the image sequences, the machine learning engine in the AISight system then teaches itself the patterns of behavior the objects exhibit, after which it can recognize any anomalous deviation from this behavior (see Fig. 3). The machine learning engine tasked with the role is comprised of approximately 50 interlocking ART neural networks coordinated through a cognitive model that (in a simplified fashion) replicates the perceptual processes of the human brain.

To reduce the number of variables presented to the neural networks, a numerical value is assigned to each type of object located in the multidimensional map. This type classification -- which could represent a bus, individual, or baggage at a station -- together with the kinematic data associated with it are the only data that are presented to the neural networks for further processing.

Each one of the neural networks learns about some particular nuance of behavior that has been observed in the scene by analyzing the specific data that is presented to it, after which it can identify a set of regularly occurring behavioral patterns in the image sequences.

While one of the neural networks may be presented with data specifically to determine the behavioral relationships between the kinematic properties of the objects -- such as an individual and a train -- another may use a different set of data to learn about unusual pathways that an object, such as a bus, takes through a scene.

The behavioral patterns learned by the neural network engines are then stored as memories in an area of the system called the episodic model. Each of the neural network engines can create, retrieve, reinforce, or modify these memories, allowing them to continuously learn about patterns of events that occur within any scene.

Whenever the neural network engines observe object types and their behaviors, they compare those to their current memories. The less frequently they have observed an object type and its behavior in the past, the weaker their memory will be and the more unusual the system will consider the current activity to be.

After a learning period of approximately two weeks, the system will learn enough about which events are unusual and which are not. Unusual events can then be flagged and an alert issued to an operator who can take the appropriate action.

Configuring the system

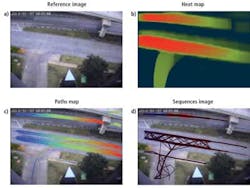

Users can configure the BRS AISight system software to modify alert criteria, manage individual access rights, and add additional cameras. Furthermore, the software allows operators to view any one of the cameras in the system, view any alerts that have been generated by the system, and review alerts that have been generated in previous sessions (see Fig. 4).

To alert operators to unusual or suspicious behavior at each of its bus and rail depots, the transit agency's system was designed to operate seamlessly with a VMS from Verint. The VMS is responsible for recording image data from the cameras, storing them, and enabling operators to view images in real time.

To visualize the objects that are being tracked by the AISight software, data from it are passed through "track server" middleware running on a Windows-based server where they are converted into a format that can be read by the VMS system and overlaid onto a live camera stream. This allows operators to visualize the various objects that the AISight system is tracking at any one time, whether they are suspicious or not.

In a similar fashion, events that are flagged as suspicious by the AISight system are also converted into a format that can be read by the VMS employing the "event server" middleware. In this way, original images from the cameras can be superimposed with color-coded images to highlight the behavior of objects in the scene learned by the neural networks (see Fig. 5).

The last element in the system deployed by the transit agency is a PSIM software package from CNL Software. Running on a remote server, this system integrates, connects, and correlates all the data from the VMS and AISight systems situated at the rail stations, rail yards, and bus depots.

This system correlates the information from the CCTV systems and other security subsystems such as intrusion detection and badge management systems. It allows operators to quickly visualize the entire environment and assess any alarm by reviewing related security information.

While the BRS AISight system has been developed to recognize the patterns within sets of data, it also could be used to classify and compare nonvisual data together with the imaging data, allowing sound or sonar data to be simultaneously acquired and analyzed.

Wesley Cobb, PhD, is chief science officer at Behavioral Recognition Systems (Houston, TX, USA).

Company Info

Axis Communications

www.axis.com

Behavioral Recognition Systems

www.brslabs.com

CNL Software

www.cnlsoftware.com

FLIR Systems

www.flir.com

Verint

http://verint.com