VISION-GUIDED ROBOTICS: Mobile robots inspect rails, consumer goods

Coupling the latest technological advances in robotics with audio, visual, and tactile feedback systems and software is paving the way for the next generation of mobile robots. This was the theme of a presentation at this year's NIWeek by Luca Lattanzi, a machine-vision and robotics specialist with the Loccioni Group (www.loccioni.com). In his presentation, Lattanzi described the development of a mobile robot for railroad switch inspection and another for consumer goods inspection.

"While many rail inspection systems can inspect the condition of the rail tracks," says Lattanzi, "these systems are not designed to measure the rail switches -- the linked tapering rails that are used to guide a train from one track to another." To inspect these, the Loccioni Group has developed Felix, an autonomous mobile robot that provides remote inspectors with visual profiles of these switches (see Fig. 1).

Mounted on the railway track, the Felix robot is equipped with two 3-D profilometers developed by Loccioni, based on a Z-Laser ZM18 red laser-line projector (www.z-laser.com) and an IDS UI-5490SE GigE camera. Specific diagnostic algorithms have also been developed in order to process and analyze acquired data. Reflected laser light is captured by the profilometer's 2-D camera, a depth map computed, and from this depth map the contour and profile of the tracks and rail switches determined. Once this information is computed, track and switch point distance can also be determined. Image and measurement data are then transmitted wirelessly to an operator for further analysis.

"In many robotics applications," says Lattanzi, "it is not only necessary for such robots to take 3-D visual measurements but also to perform acoustic and vibration analysis." This is especially important where robots are expected to test consumer products.

Tasked with developing a robot for quality-control testing of washing machines, Lattanzi and his colleagues developed a Mobile Quality Control Robot called Mo.Di.Bot that emulates many of the functions of a human operator and is capable of autonomously navigating a testing laboratory replete with multiple machines (see Fig. 2).

Multiple sensory systems were integrated around a RobuLaB80 from Robosoft (www.robosoft.com). To emulate the movement of the human hand, the robot is equipped with a dexterous three-fingered hand employing tactile sensors from Schunk. This in turn is attached to a MINI45 six-axis force/torque transducer by ATI Industrial Automation (www.ati-ia.com) and a Schunk lightweight arm that provides seven degrees of freedom.

To perform environmental perception and modeling, the robot is equipped with both a SICK S3000 collision avoidance and safety laser system and a Microsoft (www.microsoft.com) Kinect 3-D sensor. While audio data are captured with an electret microphone, visual data are imaged using a USB UI-1480SE 2560 × 1920-pixel color USB 2.0 camera from IDS.

USB data captured from these devices are transferred to host PC memory using a USB-9229/9239 interface from National Instruments (NI) while an NI PCI-4472 analog interface card is used to capture audio data. This analog interface card is also used to transfer data to the PC from a single-point PIVS 300 industrial vibrometer from Polytec that measures any vibration of the unit under test.

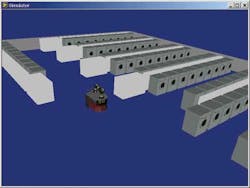

To allow the robot to perform tasks autonomously, the washing machine quality-control inspection system was first modeled using Microsoft Robotics Studio and NI's Robotics Simulator (see Fig. 3). By modeling the location of each of the washing machines to be analyzed, the trajectory of the robot can be optimized.

Once the modeling is accomplished, the robot autonomously moves to each machine, performing tasks such as opening and closing of the front door of the machine and initiating specific machine cycles by manipulating switches and rotary controls of the machine. Diagnostic vision tasks are then performed using the onboard vision inspection, vibration analysis, and acoustic measurement systems written in NI's LabVIEW graphical programming language. A video of the robot in action can be found at http://research.loccioni.com/projects/interaid/.