Kinect API Makes Low-Cost 3-D Imaging Systems Attainable

Andrew Wilson, Editor

Costing just over $100, it is no wonder that Microsoft's Kinect holds the Guinness World Record of being the fastest-selling consumer electronics device. Of the more than 18 million units sold to date, most are deployed by consumers eager to explore new ways to interact with their favorite games. However, the unit has also attracted interest from software developers wishing to discover whether they can apply the system's 3-D imaging capabilities to industrial and scientific processes.

Microsoft itself has encouraged the interest by offering developers a software development kit (SDK) to build applications around the device. This, in turn, has spawned a number of web sites -- most notablywww.kinecthacks.com and http://openkinect.org -- dedicated to providing the latest code, news, and applications related to Kinect.

Based on range camera technology developed by PrimeSense, the Kinect employs an infrared (IR) laser emitter, an IR camera, and an RGB camera. To compute a depth map, a speckle pattern is first projected onto the image and compared to a reflected pattern from a reference image, from which 3-D image data can be extracted (http://bit.ly/PFo48J).

Measuring resolution

In their paper "Accuracy and Resolution of Kinect Depth Data for Indoor Mapping Applications," Kourosh Khoshelham and Sander Oude Elberink of the University of Twente (www.itc.nl) determined that the point spacing in the depth direction is as large as 7 cm at a range of 5 m (http://bit.ly/RtNqRO). This correlates well with data from PrimeSense that specify the depth resolution of 1 cm at a 2-m distance and a spatial (x/y) resolution of 3 mm at the same range, as Mikkel Viager of the Technical University of Denmark (www.dtu.dk) points out in "Analysis of Kinect for mobile robots."

While this spatial and depth resolution may remain unacceptable for many high-resolution, high-speed 3-D vision systems, Microsoft's Kinect has found numerous applications in robotic guidance, gesture recognition, and medical imaging systems. Part of the success of these projects lies in the availability of Microsoft's Kinect application programming interface (API) and other third-party image-processing toolkits from companies such as The Mathworks,Eye Vision Technology (EVT), MVTec Software, and National Instruments (NI).

Microsoft's Kinect for Windows SDK supports applications built with C++, C#, or Visual Basic using Microsoft Visual Studio 2010. The Kinect for Windows SDK version 1.5 offers skeletal and facial tracking, while the Kinect for Windows Developer Toolkit uses a natural user interface (NUI) to allow developers to access audio, color, and depth image data (see Fig. 1). In addition, Microsoft's Kinect software runtime converts depth information into a skeletal outline that can be used for motion tracking.

Machine-vision software vendors are also offering development tools to allow data from the Kinect to be incorporated with their existing software for developing machine-vision and robot guidance systems. MVTec has implemented a simple HALCON interface to transform depth image data into metricalx, y, and z data. Using these data, the company's HALCON library can then be used to develop systems for applications such as bin picking, packaging, or palletizing.

Similarly, the Kinesthesia Toolkit for Microsoft Kinect developed by the University of Leeds (www.leeds.ac.uk) presents the developer with an API for use with NI's LabVIEW programming language. Initially developed for medical rehabilitation applications and packaged using JKI's VI Packet Manager, the Kinesthesia Toolkit allows NI LabVIEW programmers to access RGB video, depth camera, and skeletal data (see "Therapy system gets physical with Kinect-based motion analysis").

Machine-vision software support for the Kinect is also available from EVT. Recently, the company demonstrated contour matching of 3-D image data using its EyeVision 3D software. Like MVTec, EVT expects the capability to be deployed in palletization, depalletization, and object-recognition systems in conjunction with KUKA,ABB, and Stäubli robots.

Open-source code

These commercially available packages offer developers an easy way to interface the Kinect to familiar machine-vision software, but open-source implementations that use OpenCV are also available. Willow Garage has a Kinect module called ROS Kinect that allows it to be used with ROS, an open-source meta-operating system for robots. The Kinect ROS stack contains low-level drivers, visualization launch files, and OpenCV tutorials and demonstration software (http://bit.ly/Q2FpXC).

Willow Garage is also part of a consortium sponsoring the Point Cloud Library (PCL), a project aimed at developing algorithms for 2-D/3-D image and point-cloud processing (http://pointclouds.org). The PCL framework contains filtering, surface reconstruction, registration, and model-fitting algorithms used to filter outliers from noisy data, stitch 3-D point clouds together, segment relevant parts of a scene, recognize objects based on their geometric appearance, and visualize surfaces from point clouds.

Pick and place

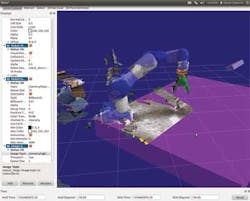

Numerous gesture recognition and 3-D modeling applications for the Kinect are commonplace, and now industrial and medical applications for the device are slowly emerging. At the Southwest Research Institute (www.swri.org), researchers have developed a system based around a robot from Yaskawa Motoman Robotics equipped with a three-fingered robotic gripper from Robotiq that is capable of identifying and picking randomly placed objects (see Fig. 2).

After acquiring data from the Kinect sensor, background points are removed to isolate the objects. 3-D points representing the object are then analyzed and a path-planning algorithm is used to position the robotic arm in the correct location. A video of the system in action can be found athttp://bit.ly/zWRmzj.

Practical applications of robotic vision systems using the Kinect were also demonstrated at the 2011 Pack Expo show in Las Vegas by Universal Robotics. There the company demonstrated how its MotoSight 3D Spatial Vision system could be used in conjunction with Motoman robots for moving randomly placed boxes. Using 9000 Series webcams from Logitech and a Kinect sensor, the MotoSight 3D Spatial Vision located randomly positioned boxes, ranging in size from 6 to 24 in., at rates up to 12 boxes/min on pallets up to 48 in.2 and 60 in. high.

Mobile robots

Kinect-based robotic guidance systems are also finding uses in mobile robotic applications. Researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL; www.csail.mit.edu) have developed a robot that uses Microsoft's Kinect to navigate its surroundings (see Fig. 3). Using simultaneous localization and mapping (SLAM), the robot can build a map within an unknown environment using Kinect's video camera and IR sensor. By combining visual information with motion data, the robot can determine where within a building it is positioned (http://bit.ly/xAZQJK).

Such robots are also finding use in quality control systems, replacing the manual tasks once delegated to human operators. At NIWeek in August 2012, Luca Lattanzi of the Loccioni Group demonstrated a Kinect-enabled robot designed for quality control testing of washing machines (see October 2012 cover and "Mobile robots inspect rails, consumer goods"). In operation, the robot autonomously moves to each machine, opening and closing the front door of the machine and initiating machine cycles by manipulating switches and rotary controls.

Medical imaging

While the Kinect is gradually finding uses in bin-picking and mobile robotics, applications are also emerging in medical imaging systems, most notably in low-cost stroke rehabilitation equipment. At NIWeek, researchers at Leeds University showed how a system could be used to track a patient's skeleton, analyze gait in LabVIEW, and feed the data back to the system's operator.

Leeds University is not alone in developing healthcare and rehabilitation systems. Marjorie Skubic, professor of electrical and computer engineering in the MU College of Engineering at the University of Missouri (www.missouri.edu), is working with doctoral student Erik Stone to use the Kinect to monitor behavior and routine changes in patients. Stone's article, "Evaluation of an Inexpensive Depth Camera for Passive In-Home Fall Risk Assessment," won the best paper award at the Pervasive Health Conference held in Dublin, Ireland, last May (http://bit.ly/nIfely).

Similar efforts by collaborators from the University of Southampton (www.southampton.ac.uk) and Roke Manor Research (www.roke.co.uk) have resulted in a Kinect-based system that measures hand joint movement to help stroke patients recover manual agility at home. By creating an algorithm that tracks and measures hand joint angles and the fine dexterity of individual finger movements, the project aims to help people recovering from a stroke to perform regular and precise exercises so that they recover faster. Collected data can then be analyzed by therapists to continually monitor patient progress.

Although the spatial and depth resolution of Kinect-based robotic and medical systems may be limited, the low cost of devices like this has engendered new interest in 3-D imaging. With this enticement, new 3-D algorithms, open-source code, and applications will surely emerge -- some of which may be applied to stereo-based, structured light, and time-of-flight systems.

3-D Imaging Software, Hardware, & System Vendors

ABB

www.abb.com

Eye Vision Technology (EVT)

www.evt-web.com

KUKA Robotics

www.kuka-robotics.com

Loccioni Group

www.loccioni.com

Logitech

www.logitech.com

The MathWorks

www.mathworks.com

Microsoft

www.microsoft.com

MVTec Software

www.mvtec.com

National Instruments (NI)

www.ni.com

PrimeSense

www.primesense.com

Robotiq

www.robotiq.com

Stäubli

www.staubli.us

Universal Robotics

www.universalrobotics.com

Willow Garage

www.willowgarage.com

Yaskawa Motoman Robotics

www.motoman.com

For more information about 3-D cameras, digitizers, structured lighting, and other components that can be combined for 3-D imaging systems, visit theVision Systems Design Buyer's Guide (http://bit.ly/NNgN5v).