Machine vision: A look into the future

Andrew Wilson, Editor

Imagine a world where the laborious and repetitive tasks once performed by man are replaced with autonomous machines. A world in which crops are automatically harvested, sorted, processed, inspected, packed and delivered without human intervention. While this concept may appear to be the realm of science fiction, many manufacturers already employ automated inspection systems that incorporate OEM components such as lighting, cameras, frame grabbers, robots and machine vision software to increase the efficiency and quality of their products.

While effective, these systems only represent a small fraction of a machine vision market that will expand to encompass every aspect of life from harvesting crops and minerals to delivering the products manufactured from them directly to consumers' doors. In a future that demands increased efficiency, such machine vision systems will be used in systems that automate entire production processes, effectively eliminating the manpower and errors that this entails.

Vision-guided robotic vision systems will play an increasingly important role in such systems as they become capable of such tasks as automated farming, animal husbandry, and crop monitoring and analysis. Autonomous vehicles incorporating vision, IR and ultrasound imagers will then be capable of delivering these products to be further processed. Automated sorting machines and meat-cutting systems will then prepare the product for further processing. After which many of the machines that are in use today will further process and package these products for delivery—once again by autonomous vehicles. In this future, customers at supermarkets will find no checkout personal as they will be replaced by automated vision systems that scan, weigh and check every product.

Unconstrained environments

Today's machine vision systems are often deployed in constrained environments where lighting can be carefully controlled. However, to design vision-guided robotic systems for agricultural tasks such as planting, tending and harvesting crops requires that such systems must operate in unconstrained environments where lighting and weather conditions may vary dramatically. To do so, requires systems that incorporate a number of different imaging techniques depending on the application to be performed.

Currently the subject of many research projects, these systems currently divide agricultural tasks into automated systems that plant, tender and harvest. Since each task will be application dependent, so too will the types of sensors that are used in each system. For crop picking, for example, it is necessary to determine the color of each fruit to determine its ripeness. For harvesting crops such as wheat, it may only be necessary to guide an autonomous system across the terrain.

In such systems, machine vision systems that use stereo vision systems, LIDAR, INS and GPS systems and machine vision software will be used for path planning mapping and classification of crops. Such systems are currently under development at many Universities and research institutes around the world (see "Machine Vision in Agricultural Robotics – A short overview" - http://bit.ly/19MHn9M), by Emil Segerblad and Björn Delight of Mälardalen University (Västerås, Sweden; www.mdh.se).

In many of these systems, 3D vision systems play a key role. In the development of a path planning system, for example, John Reid of Deere & Company (Moline, IL, USA; www.deere.com) has used a 22 cm baseline stereo camera from Tyzx (Menlo Park, CA, USA; www.tyzx.com) mounted on the front of a Gator utility vehicle from Deere with automatic steering capabilities to calculate the position of potential obstacles and determine a path that for the vehicle's on-board steering controller (http://bit.ly/1bRFQAX).

Robotic guidance

While 3D systems can be used to aid the guidance of autonomous vehicles, 3D mapping can also aid crop spraying, perform yield analysis and detect crop damage or disease. To accomplish such mapping tasks, Michael Nielsen, and his colleagues at the Danish Technological Institute (Odense, Denmark; www.dti.dk) used a Trimble GPS, a laser rangefinder from SICK (Minneapolis, MN, USA; www.sick.com) a stereo camera and a tilt sensor from VectorNav Technologies (Richardson, TX, USA; www.vectornav.com) mounted on a utility vehicle equipped with a Halogen flood light and a custom made Xenon strobe. After scanning rows of peach trees, 3D reconstruction of an orchard was performed by using tilt sensor corrected GPS positions interpolated through encoder counts (http://bit.ly/1fxp36W).

While 3D mapping can determine path trajectories, map fields and orchards, it can also being classify fruits and plants. Indeed, this is the aim of a system developed by Ulrich Weiss and Peter Biber at Robert Bosch (Schwieberdingen, Germany; www.bosch.de). To demonstrate that it is possible to distinguish multiple plants using a low resolution 3D laser sensor, an FX6 sensor from Nippon Signal (Tokyo, Japan; www.signal.co.jp) was used to measure the distance and reflectance intensity of the plants using an infrared pulsed laser light with a precision of 1 cm. After 3D reconstruction, supervised learning techniques were used to identify the plants (http://bit.ly/19ALVMM).

Automated harvesting

Once identified, robots and vision systems will be employed to automatically harvest such crops. Such a system for harvesting strawberries, for example has been developed by Guo Feng and his colleagues at the Shanghai Jiao Tong University (Shanghai, China; www.sie.sjtu.edu.cn). In the design of the system, a two-camera imaging system was mounted onto a harvesting robot designed for strawberry picking. A 640 x 480 DXC-151A from Sony (Park Ridge, NJ, USA; www.sony.com) mounted on the top of robot frame captures images of 8-10 strawberries. Another camera, a 640 x 480 EC-202 II from Elmo (Plainview, NY, USA; www.elmousa.com) was installed on the end effector of the robot to image one or two strawberries.

While the Sony camera localized the fruit, the Elmo camera captures images of strawberries at a higher resolution. Image from both these cameras were then captured by an FDM-PCI MULTI frame grabber from Photron (San Diego, CA, USA; www.photron.com) interfaced to a PC. A ring-shaped fluorescent lamp installed around the local camera provided the stable lighting required for fruit location (http://bit.ly/16mWhmq).

Although many such robotic systems are still in early stages of development, companies such as Wall-Ye (Mâcon, France; http://wall-ye.com) have demonstrated the practical use of such systems. The company's solar powered vine pruning robot Wall-Ye incorporates four cameras and a GPS system to perform this task (Figure 1). A video of the robot in action can be viewed at http://bit.ly/QDFy1q.

Crop grading

Machine vision systems are also playing an increasingly important role in the grading of crops once harvested. In many systems, this requires the use of multispectral image analysis. Indeed, this is the approach taken by Olivier Kleynen, and his colleagues at the Universitaire des Sciences Agronomiques de Gembloux (Gembloux, Belgium; www.gembloux.ulg.ac.be) in a system to detect defects on harvested apples.

To accomplish this, a MultiSpec Agro-Imager from Optical Insights (Santa Fe, NM, USA; www.optical-insights.com) that incorporated four interference band-pass filters from Melles Griot (Rochester, NY; USA; www.cvimellesgriot.com) was coupled to a CV-M4CL 1280 x 1024 pixel monochrome digital camera from JAI (San Jose, CA, USA; www.jai.com). In operation, the Multi-Spec Agro-Imager projects on a single array CCD sensor four images of the same object corresponding to four different spectral bands. Equipped with a Cinegon lens from Schneider Optics (Hauppauge, NY, USA; www.schneideroptics.com, camera images were then acquired using a Grablink Value Camera Link frame grabber from Euresys (Angleur, Belgium; www.euresys.com). Hyper-spectral images were then used to determine the quality of the fruit (http://bit.ly/19nGkdG).

Other methods such as the use of SWIR cameras have also been used for similar purposes. Renfu Lu of Michigan State University (East Lansing, MI, USA: www.msu.edu), for example, has shown how an InGaAs area array camera from UTC Aerospace Systems (Princeton, NJ, USA; www.sensorsinc.com) covering the spectral range from 900-1700nm mounted to an imaging spectrograph from Specim (Oulu, Finland; www.specim.fi) can detect bruises on apples (http://bit.ly/zF8jlN).

While crop picking robots have yet to be fully realized, those used for grading and sorting fruit are no longer the realm of scientific research papers. Indeed, numerous systems now grade and sort products ranging from potatoes, dates, carrots, and oranges. While many of these systems use visible light-based products for these tasks, others incorporate multi-spectral image analysis.

Last year, for example, Com-N-Sense (Kfar Monash, Israel; www.com-n-sense.com) teamed with Lugo Engineering (Talme Yaffe, Israel; www.lugo-engineering.co.il) to develop a system capable of automatic sorting of dates (http://bit.ly/KN2ITf). Using diffuse dome lights from Metaphase (Bristol, PA, USA; www.metaphase-tech.com) and Prosilica 1290C GigE Vision color cameras from Allied Vision Technologies (Stadtroda, Germany; www.alliedvisiontec.com), the system is capable of sorting dates at speeds as fast as 1400 dates/min.

Multispectral image analysis is also being used to perform sorting tasks. At Insort (Feldbach, Austria; www.insort.at), for example, a multispectral camera from EVK DI Kerschhaggl, Raaba, Austria; www.evk.co.at) has been used in a system to sort potatoes while Odenberg (Dublin, Ireland; www.odenberg.com) has developed a system that can sort fruit and vegetables using an NIR spectrometer (http://bit.ly/zqqr38).

Packing and wrapping

After grading and sorting, such products must be packed and wrapped for shipping. Often this requires that the crops are manually packed and wrapped by human operators. Alternatively, they can be transferred to other automated systems that can perform this task. Once packed, these goods may be finally inspected by vision-based systems. In either case, numerous steps are required before such products can be shipped to their final destination.

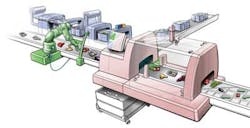

In an effort to automate the entire sorting, packaging and checking process, the European Union has announced a project known as PicknPack (www.picknpack.eu) that aims to unite the entire production chain (Figure 2). Initiated last November, the system will consist of a sensing system to assesses the quality of products before or after packaging, a vision controlled robotic handling system that picks and separates the product from a harvest bin or transport system and places it in the right position in a package and an adaptive packaging system that can accommodate various types of packaging. When complete, human intervention will be reduced to a minimum.

Unmanned vehicles

After packing, of course, such goods must be transported to their final destination. Today, manned vehicles are used to perform this task. In future, however, such tasks will be relegated to autonomous vehicles. At present, says Professor William Covington of the University of Washington School of Law (Seattle, WA, USA; www.law.washington.edu) fully independent vehicles that operate without instructions from a server based on updated map data remain in the research prototype stage.

By 2020, however, Volvo expects accident-free cars and "road trains" guided by a lead vehicle to become available. Other automobile manufacturers such as GM, Audi, Nissan and BMW all expect fully autonomous, driverless cars to become available during this time frame. (http://bit.ly/1ibdWxv). These will be equipped with sensors such as radar, lidar, cameras, IR and GPS systems to perform this task.

Automated retail

While today's supermarkets, rely heavily on traditional bar-code readers to price individual objects, future check-out systems will employ sophisticated scanning, weighing and pattern recognition to relive human operators of such tasks.

Already, Toshiba-TEC (Tokyo, Japan; www.toshibatec.co.jp) has developed a supermarket scanner that uses pattern recognition to recognize objects without the use of bar-codes (http://bit.ly/18KCRXp). Others such as Wincor Nixdorf (Paderborn, Germany; www.wincor-nixdorf.com) has developed a fully automated system known as the Scan Portal that the company claims is the world's first practicable fully automatic scanning system (Figure 3). A video of the scanner at work can be found at http://bit.ly/rSG5HB.

Whether it be crop grading, picking, sorting, packaging, or shipping, automated robotic systems will impact the production of every product made by man, effectively increasing the efficiency and quality of products and services along the way. Although developments currently underway can make this future a reality, some of the technologies required still need to be perfected for this vision to emerge.

Companies mentioned

Allied Vision Technologies

Stadtroda, Germany

www.alliedvisiontec.com

Com-N-Sense

Kfar Monash, Israel

www.com-n-sense.com

Danish Technological Institute

Odense, Denmark

www.dti.dk

Deere & Company

Moline, IL, USA

www.deere.com

Elmo

Plainview, NY, USA

www.elmousa.com

EVK DI Kerschhaggl

Raaba, Austria

www.evk.co.at

Euresys

Angleur, Belgium

www.euresys.com

Insort

Feldbach, Austria

www.insort.at

JAI

San Jose, CA, USA

www.jai.com

Lugo Engineering

Talme Yaffe, Israel

www.lugo-engineering.co.il

Mälardalen University

Västerås, Sweden

www.mdh.se

Melles Griot

Rochester, NY, USA

www.cvimellesgriot.com

Metaphase

Bristol, PA, USA

www.metaphase-tech.com

Michigan State University

East Lansing, MI, USA

www.msu.edu

Nippon Signal

Tokyo, Japan

www.signal.co.jp

Odenberg

Dublin, Ireland

www.odenberg.com

Optical Insights

Santa Fe, NM, USA

www.optical-insights.com

Photron

San Diego, CA, USA

www.photron.com

Robert Bosch

Schwieberdingen, Germany

www.bosch.de

Schneider Optics

Hauppauge, NY, USA

www.schneideroptics.com

Shanghai Jiao Tong University

Shanghai, China

www.sie.sjtu.edu.cn

SICK

Minneapolis, MN, USA

www.sick.com

Sony

Park Ridge, NJ, USA

www.sony.com

Specim

Oulu; Finland

www.specim.fi

Toshiba-TEC

Tokyo, Japan

www.toshibatec.co.jp

Tyzx

Menlo Park, CA, USA

www.tyzx.com

Universitaire des Sciences

Agronomiques de Gembloux

Gembloux, Belgium

www.gembloux.ulg.ac.be

University of Washington School of Law

Seattle, WA, USA

www.law.washington.edu

UTC Aerospace Systems

Princeton, NJ, USA

www.sensorsinc.com

VectorNav Technologies

Richardson, TX, USA

www.vectornav.com

Wall-Ye

Mâcon, France

http://wall-ye.com

Wincor Nixdorf

Paderborn, Germany

www.wincor-nixdorf.com

Vision Systems Articles Archives