Vision makes light work of egg case palletizing

Cris Holmes

Hickman's Family Farms (Buckeye, AZ, USA;www.hickmanseggs.com) has deployed many automated systems at its egg laying and production facility to guarantee that the eggs that are produced, inspected and packaged are of consistent quality.

Today, in a highly integrated process, eggs are gathered automatically from laying houses onto belts which transport them to a production facility. There, they are lifted onto moving tracks and passed through a variety of systems that wash, grade and sort them by size. Once sorted, the eggs are packed into plastic, Styrofoam or cardboard packages and stamped with a best before by date.

Cartons of eggs are then packed into boxes prior to shipment. To increase the productivity at the plant, eight packing lanes are used, each one dedicated to packing cartons printed with the branding of a specific customer. Once packed, the boxes of eggs are conveyed through the plant where bar code labels that identify the contents of each carton are affixed to them. Lastly, the bar codes are read and the data is used by a robot to stack the boxes into pallets which are then shipped to customers.

In the past, the process of labeling the boxes to ensure that the products inside them could be identified was performed manually. Rolls of identification stickers were printed out at the start of the day and two to three times during a shift, depending on how many boxes were to be packaged. These were then affixed to the boxes after they had been filled with cartons of eggs.

This manual process had its limitations. Since the bar codes on the stickers contain a packing date, any surplus stickers that remained unused at the end of the day had to be destroyed. More importantly, if a sticker fell off or was not placed on the box, it was impossible to identify which customer the box had been packed for without opening it. Furthermore, if the stickers were not placed on the correct side of the box, the bar code reader was unable to read the code, and the automated system could not direct the box to the correct lane for palletizing.

Vision identifies egg boxes

To improve the production process, the owners of Hickman's Family Farms turned to engineers at Impact Vision Technologies (IVT; Phoenix, AZ, USA;www.go-ivt.com) to develop a system that identifies the type of egg cartons packed inside open boxes as they traveled down a conveyor. An ink jet printer then prints the appropriate bar code on both sides of the box. Not only was the system required to identify the cartons, it was also essential that it could be trained to recognize existing sized cartons when the artwork on the packaging was changed.

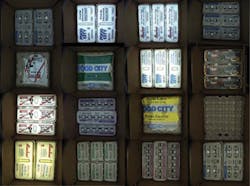

Compounding the inspection problem was the fact that the boxes of eggs travel down the conveyor in any orientation. Furthermore, a box can contain a variety of sizes of egg cartons - packs of six eggs, a dozen, or eighteen eggs. Each of the egg cartons within the boxes can also feature artwork and labels specific to an individual customer (Figure 1).

The vision system hardware developed to identify the boxes of eggs employs a 1296 x 966 pixel M150 color camera from Datalogic (Minneapolis, MN, USA;www.datalogic.com) fitted with a 28mm lens that is interfaced to a Datalogic MX40 embedded vision processor over a Power over Ethernet interface. The camera is mounted above an aperture in the center of a DLP-300 x 300 white flat diffused LED panel from Smart Vision Lights (Muskegon, MI, USA; www.smartvisionlights.com) that illuminates the open tops of the boxes of eggs as they move under the vision system at a rate of 30 boxes per minute (Figure 2). Images from the camera are then transferred to the MX40 embedded vision processor for processing.

To build the software for the system, Impact Vision Technologies used the Datalogic Impact Software Suite. The software suite comprises two software packages - the Vision Program Manager (VPM) and the Control Panel Manager (CPM). While specific functions in the VPM were drawn upon to process and analyze the images of the tops of the egg cartons in the boxes, the CPM was used to create operator interface panels for the system, enabling an operator to make adjustments to the system using a flat panel touch screen display.

Training the system

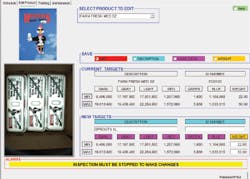

To identify specific types of cartons of eggs contained in the boxes, the vision system must be trained to create a unique signature for each one. To do so, an operator places the system into a teach mode using an interface panel on the touch screen display. The name of the product to be trained together with a unique ID number that identifies the customer, the number of eggs in each case and their size, are then selected from a drop down menu.

Having done so, ten boxes of egg cartons are passed in different orientations through the vision station and later through a weigh station on the conveyor. Unique visual and weight signatures are then stored in a database (Figure 3).

To identify the type of egg cartons in the box - whether they contain six, twelve, or eighteen eggs - the image captured by the M150 color camera is first filtered to remove the printed detail on the top of the cartons. Once filtered, a pattern-matching tool is used to determine the number of specific geometrical shapes formed by the egg cartons in the box. From the number of such geometrical shapes, the type of carton in the box can be derived.

Next, the RGB image captured by the camera is converted into a grayscale image. The shades of gray in the image are then divided into three ranges - dark, grey and light - after which a blob tool detect areas in the image in which the dark, grey and light values fall within prescribed ranges. Calculating the size of these areas then provides three unique identifiers for each package of eggs (Figure 4 a, b and c).

While useful, the grayscale identifiers are insufficient to enable the system to identify each package, since packages of differently-sized eggs for a single customer may feature artwork that is remarkably similar. Hence, it was important to complement the grayscale information with identifiers that categorized how much of a given color was found in the image. To do so, a blob tool was employed to detect areas in the image with specific color characteristics. By using the tool to find areas of green, red and blue blobs in the image, (Figure 4 d, e and f) and from that determine their size, it is then possible to create three additional identifiers that could be used to characterize the contents of each box.

In addition to using the size of the area of the blobs, the location of the three largest blobs is used to generate additional signature points. One signature point is created if all three blobs are in an expected size range, while two additional signature points are created if the two minor blobs are within a specific distance and relative angle to the major blob. The location of the largest blob is also compared to five of the largest blobs in the image, and signature points are created according to its relative orientation to them.

The unique signature data points associated with each type of box of cartons are complemented by data on the weight of the box, which is acquired by weighing it after the vision inspection process using a 4693i conveyor-based scale from the Thompson Scale Company (Houston, TX, USA;www.thompsonscale.com). Data from the scale is sent to the MX40 embedded vision processor via Ethernet. All the signature points are then recorded to create a unique signature for each type of box which is stored in the system's database and used to identify the boxes during the production process (Figure 5).

Aside from identifying the type of cartons of eggs in the boxes, the system also enables an operator to download a daily production schedule from the company's Microsoft Dynamics (Redmond, WA, USA;www.microsoft.com/en-us/dynamics) NAV (Navision) enterprise network system. The production schedule determines the types of egg cartons to be packed on each of the eight packing lines. To enable an operator to visualize the packaging process, an operator interface panel provides a written description of each type of carton that is being packed in each lane. Using a pull down menu on the interface panel, an operator can stop the production run at any of the eight packing stations and reschedule the system to pack different cartons (Figure 6).

In production

In operation, boxes of eggs now pass through the inspection station, are weighed and the type of packs within them verified. If the box has been packaged correctly, the VPM software passes the identified product ID to a UBS Printing Group (Corona, CA, USA;www.ubsprint.com) printer over an Ethernet interface. The printer then generates a product identification label and GS1 and UPC bar codes that are printed on both sides of the box. If the box has been packed with the wrong cartons, however, no bar code is printed on it.

Boxes with bar codes printed on them are read by a BL600 bar code reader from Keyence (Osaka, Japan;www.keyence.com) and the box is then assigned to a specific lane. An M410 robot from Fanuc (Rochester Hills, MI, USA; www.fanucamerica.com) removes the boxes from the line and stacks them into a pallet. If no bar code is present, the box travels past the stacking robot into a storage area where it can be inspected and the egg cartons inside manually repacked.

Since the system was deployed at Hickman's Family Farms, the company has made cost savings of $150,000 per year due to the fact that 27,000 hand applied identification stickers costing 2.5 cents each no longer need to be manually applied to the boxes. What is more, mismarked, improperly packed and damaged boxes have been eliminated, significantly reducing the products returned to the company by its customers.

Cris Holmes,President, Impact Vision Technologies, Phoenix, AZ (www.go-ivt.com)

Companies mentioned

Datalogic

Minneapolis, MN, USA

www.datalogic.com

Fanuc

Rochester Hills, MI, USA

www.fanucamerica.com

Hickman's Family Farms

Buckeye, AZ, USA

www.hickmanseggs.com

Impact Vision Technologies

Phoenix, AZ, USA

http://www.go-ivt.com

Keyence

Osaka, Japan

www.keyence.com

Microsoft Dynamics

Redmond, WA, USA

www.microsoft.com/en-us/dynamics

Smart Vision Lights

Muskegon, MI, USA

www.smartvisionlights.com

Thompson Scale Company

Houston, TX, USA

www.thompsonscale.com