Embedded imaging: FPGAs speed crowd monitoring analysis

Today, most airports, inner cities and shopping malls take advantage of security surveillance systems to monitor the activity of people who use them. Despite this, should a potentially threatening situation arise, it is a human operator that must identify this by viewing feeds from multiple cameras. Since this requires a large number of operators, it is generally not until much later when archived events are replayed that a person or group of people can be identified.

Recognizing this, Dr. Shoab Khan and Muhammad Bilal at the Center for Advanced Studies in Engineering (Islamabad, Pakistan; www.case.edu.pk) have developed an FPGA-based system designed for autonomous monitoring and surveillance of crowd behavior. In this way, should an unusual event occur, it can be immediately reported to an operator located at a central control station.

To accomplish the design of their human crowd motion-classification and monitoring system, Khan and Bilal used an optical flow method to track specific features in an image across multiple frames.

Rather than use the popular Kanade-Lucas-Tomasi (KLT) for this purpose, a template-matching scheme was used since, according to Khan, this is better for motion estimation in images of low or varying contrast.

Developed in Microsoft Visual C++ with the OpenCV library, the template-matching algorithm divides an image frame into smaller rectangular patches and motion computation is performed on the current and previous images using a method based on the sum of weighted absolute differences (SWAD). Each patch can then be used to generate a motion vector of the extent and direction of motion between two sequential images.

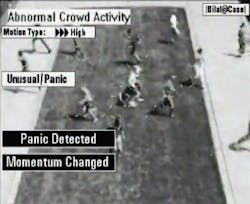

After multiple motion vectors are computed across the image, statistical properties such as average motion length and dominant direction are determined. These values are then used as the input to a weighted decision tree classifier to classify the motion into one of several categories.

"For example," says Kahn, "if the motion is high and there is a sudden change in momentum in the scene, then it will be classified as a possible panic condition."

Because the most computationally intensive part of the algorithm is calculating the motion vectors, this task was relegated to a Spartan-6-FPGA from Xilinx (San Jose, CA, USA; www.xilinx.com).

Using the Xilinx Embedded Development Kit, a customized video pipeline was built to handle image data I/O under control of the FPGA's on-board MicroBlaze processor.

To speed the calculation of the motion vectors, Khan and Bilal developed an FPGA-based hardware accelerator that was synthesized in RTL using Xilinx's Vivado high-level synthesis software. By doing so, the FPGA was capable of computing four motion vectors in parallel.

Once computed for the entire image, these vectors are then copied as a grid of motion vectors into main memory by the MicroBlaze processor.

By operating at 200MHz, data transfer and computation of all the vectors across the complete image takes less than 10ms. Statistical properties and classification of these vectors are then computed in software.

After the classification is complete, the results and motion vectors are displayed on the processed frame. A demonstration of the system can be viewed at http://bit.ly/1GJU5Ii. Further technical information regarding the design of the system can be found at: http://bit.ly/1Mfo5Mk.