March 2015 Snapshots: Robot and UAV fight fires, Vision-guided robot explores Antarctica ice, deep-space imaging

Humanoid robot and UAV fight fires

As part of an Office of Naval Research (ONR; Arlington, VA, USA;www.onr.navy.mil) project called Damage Control Technologies for the 21st Century (DC-21), a humanoid robot called the Shipboard Autonomous Firefighting Robot (SAFFiR) will work in tandem with a quad rotor unmanned aerial vehicle (UAV) to fight fires.

Developed by researchers at Virginia Tech, SAFFiR is a 143 pound robot that has two legs and what the ONR referred to as a "super-human range of motion to maneuver in complex spaces." On February 4, SAFFiR made its debut at the Naval Future Force Science & Technology EXPO. In November, last year the robot was tested aboard the decommissioned Navy vessel USS Shadwell. In the tests, the robot used infrared imaging to identify overheated equipment, and used a hose to extinguish a small fire in a series of experiments.

"We set out to build and demonstrate a humanoid capable of mobility aboard a ship, manipulating doors and fire hoses, and equipped with sensors to see and navigate through smoke," said Dr. Thomas McKenna, ONR program manager for human-robot interaction and cognitive neuroscience. "The long-term goal is to keep sailors from the danger of direct exposure to fire."

SAFFiR uses a number of components for its imaging system, including infrared stereo cameras and a rotating LIDAR, enabling it to see through dense smoke on the ship. The robot is programmed to take measured steps and handle hoses on its own, but for now, takes its instruction from researchers.

In addition to the humanoid robot, a small quad rotor developed by researchers at Carnegie Mellon University's Robotics Institute (Pittsburgh, PA, USA;www.cmu.edu) and spin-off company Sensible Machines (Pittsburg, PA, USA; www.sensiblemachines.com) was also tested aboard the USS Shadwell. In the demonstration, the UAV operated in confined spaces inside of the ship to gather situational awareness to guide firefighting and rescue efforts. As part of the DC-21 concept, information gathered by the UAV would be relayed to the SAFFiR robot, which would work with human firefighters to suppress fires and evacuate casualties.

The UAV uses an RGB depth camera similar to that of a Kinect 3D vision sensor from Microsoft (Redmond, WA, USA;www.microsoft.com) to build a map of fire areas.

"It operates more effectively in the dark," Scherer noted, "because there is less ambient light to interfere with the infrared light the camera projects".

In addition to the depth sensor, the UAV has a forward-looking infrared camera to detect fires, and a downward facing optical flow camera to monitor the motion of the UAV as it navigates.

Vision-guided robot explores Antarctica ice

A team of researchers funded by the National Science Foundation (Arlington, VA, USA;www.nsf.gov) and NASA (Washington, D.C, USA; www.nasa.gov) have developed and deployed a vision-guided robotic vehicle to study polar environments under the ice in Antarctica.

Along with Frank Rack, executive director for the ANDRILL (Antarctic Drilling Project;www.andrill.org) Science Management Office and the University of Nebraska-Lincoln's (UNL; Lincoln, NE, USA; www.unl.edu), the robot was built by Bob Zook, an ROV engineer recruited by UNL, and Justin Burnett, UNL mechanical engineering graduate student and ANDRILL team member.

Known as "Deep SCINI" (submersible capable of under-ice navigation and imaging), the remotely-operated robot was deployed after the team used a hot water drill to bore through the ice. Aboard Deep SCINI are three cameras (upward, downward, and forward-looking), a temperature sensor, LEDs from VideoRay (Pottstown, PA, USA;www.videoray.com) a gripper that can grasp objects and a syringe sampler used for collecting water samples. A 300ft tether includes a power line that provides Ethernet for data communications.

The cameras used on the robot are NC353L-369 open source hardware and software IP cameras from Elphel (West Valley City, UT, USA;www.elphel.com). Featuring a 5 MPixel CMOS image sensor, the cameras achieve a maximum frame rate of 5 fps and are housed inside of a custom 3000psi tested 2.5in diameter x 7in long pressure housing.

Images were stored as jpeg images rather than video resulting in more than 700,000 jpegs at the end of the dive. With this method, the team can store metadata within the images as opposed using time stamped images.

The cameras perform on-board jpeg compression at a rate that enables the vehicle to be moved smoothly while keeping bandwidth low.

Custom viewing software is used to adjust settings such as exposure, color compression and binning to increase frame rate. This, according to Burnett, allows the team to take instantaneous full resolution images with a key stroke.

In addition, the camera has an accessory board that provides on-board storage, configurable USB ports (one of which is used to control a focus motor), and built-in pulse synchronization control for capturing multiple images.

In addition to unveiling new information on how Antarctica's ice sheets are affected by rising temperatures, the project also uncovered a unique ecosystem of fish and invertebrates living in an estuary deep beneath the Antarctic ice off the coastline of West Antarctica.

Furthermore, the robot provided a first look at what's known as the "grounding zone," where the ice shelf meets the sea floor.

Telescope camera receives government funding

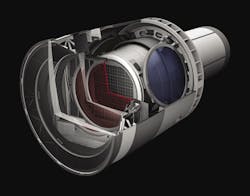

The U.S. Department of Energy (DOE) has granted "Critical Decision 2" funding approval for the plans to construct a large digital camera at the SLAC National Accelerator Laboratory (Menlo Park, CA, USA;www.slac.stanford.edu).

The 3,200 MPixel centerpiece of the Large Synoptic Survey Telescope (LSST) will help researchers study the formation of galaxies, track potentially hazardous asteroids, observe exploding stars, and understand dark matter and dark energy, which make up 95% of the universe but whose nature remains unknown.

"This important decision endorses the camera fabrication budget that we proposed," said LSST Director Steven Kahn. "Together with the construction funding we received from the National Science Foundation in August, it is now clear that LSST will have the support it needs to be completed on schedule."

The LLST camera comprises an array of 189 tiled 4k x 4k CCD image sensors and an 8.4m primary mirror. The camera will be cryogenically cooled to -100°C and will have a readout time of 2s or less and will operate at wavelengths from 320 to 1060 nm.

The components of the camera will be the size of a small car, weigh more than three tons and will be built by an international collaboration of labs and universities, including DOE's Brookhaven National Laboratory (Upton, NY, USA;www.bnl.gov/world/), Lawrence Livermore National Laboratory (Livermore, CA, USA; www.llnl.gov), and SLAC, where the camera will be assembled and tested.

Science operations for the LLST are scheduled to start in 2022, with the camera capturing images of the entire visible southern sky every few nights from atop Cerro Pachón mountain in Chile. It will produce the widest, deepest and fastest views of the night sky ever observed, according to SLAC.

During a 10-year time period, the observatory will detect tens of billions of objects, which is the first time a telescope will catalog more objects in the universe than there are people on Earth.