Image Processing Software: Using neural networks to caption images

People can summarize a complex scene in a few words without thinking. However, it is more difficult for computers to perform this task. To do so, Alexander Toshev, a Research Scientist and his colleagues, Oriol Vinyals, Samy Bengio and Dumitru Erhan at Google (Mountain View, CA, USA; www.google.com) have developed machine learning software that can automatically produce captions to describe images as they are presented to the user. The software may eventually help visually impaired people understand pictures, provide cues to operators examining faulty parts and automatically tag such parts in machine vision systems.

The software that Toshev and his colleagues used to perform this task is a neural network that consists of two different networks-a convolutional neural network (CNN) and a language generating recurrent neural network (RNN). While the deep convolutional neural network (CNN) is used for image classification tasks, its output layer is used as the input to the RNN to generate a caption describing the image.

For a number of years, CNNs have been used to produce a representation of an input image as a fixed length vector (see "Integrated Recognition, Localization and Detection using Convolutional Networks," by Pierre Sermanet et.al.; www.bit.ly/1KHMGuH).

In the implementation developed at Google, images from Microsoft's image recognition, segmentation, and captioning dataset-MS COCO (http://mscoco.org) were first resized to a 220 x 220 x 3 (RGB) format an input to a CNN consisting of an input layer of 220x220 x3, 30 hidden layers and an output layer of 7x7 nodes. Image features from this output layer is then used as an input vector to the RNN.

"Normally, the output from the CNN's last layer is used to assign a probability that a specific object, for example, might be present in the image. However, if this final data layer is fed to an RNN designed to produce phrases, the complete CNN-RNN system can be trained on images and their captions to maximize the likelihood that the descriptions it produces best match the training descriptions for each image," says Toshev.

To generate descriptions of images, the last layer of the CNN is fed to the RNN that consists of a number of long-short term memory (LSTM) blocks that are used to remember an input value (such as a word) for an arbitrary length of time. Each LSTM block determines when the input is significant enough to remember, when it should continue to remember or forget the value, and when it should output the value. Each LSTM model is then used to predict one word of the sentence according to the data fed from the CNN.

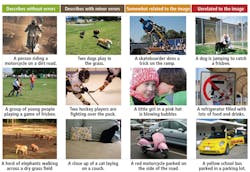

To train the system, Toshev and his colleagues used a number of image datasets including MS COCO where each image had previously been annotated manually. Individual images and descriptions were fed to the CNN and RNN, respectively. After the system was trained, random images were presented to the system and the results of the generated sentences presented for evaluation using an Amazon Turk experiment. "It is interesting to see in the second image of the first column, how the model identified the frisbee, given its size," says Toshev. Further details about how Google's CNN-RNN is used to generate descriptions from images can be found at: www.bit.ly/1PFtnyU.

To aid developers in creating such designs, the company has also introduced a programming language known as TensorFlow (www.tensorflow.org), an open-source software library for machine intelligence using data flow graphs that provides tools to assemble sub-graphs common in neural networks.