Understanding pattern recognition

Understanding pattern recognition

MARK A. VOGEL

Vision-based pattern-recognition techniques can automate routine activities such as cell classification and counting in microscopy applications, sorting parts on an assembly line, tallying biological products, and scanning aerial imagery for objects of interest. The recent increases in capabilities of low-cost PC processing have made automated pattern recognition a more desirable alternative to many tedious manual vision tasks. For example, some automated medical applications are now demonstrating results with high consistency and high measurement accuracy.

The intelligent implementation of vision-based pattern recognition is expected to lead to numerous vision-based pattern-recognition systems that can easily and quickly outperform humans in applications for which high precision is required. The field of pattern recognition covers a range of vision techniques and procedures for template-matching parts, discovering the natural similarities in groups, developing and training classifiers from collected data, and producing complex hybrid systems that can recognize objects from partial evidence.

For some vision applications, simple rules about extracted features can recognize objects. For others, complex probabilistic structures that are trained from large numbers of samples classified by experts are more appropriate. Careful task analysis at the start of a vision development effort is the key to successful application designs.

Preliminary analysis

The outputs of a preliminary analysis process consist of the requirements of the vision-system hardware, a top-level algorithm design, and in many cases, a quickly prototyped demonstration. By having a broad set of analysis and classification tools available, the system design team can produce a quick result with a high confidence in its success. However, proceeding directly to development without first conducting a significant analysis generally leads to longer development times and less optimized designs.

Evaluating the proposed hardware analysis and degree of difficulty often involves the answer to a basic question: Can a test technician, after a short training period, recognize the objects of interest from the same imagery supplied to the recognition algorithms? If the answer is yes, then past studies indicate with reasonable confidence that an automated recognition process could be developed quickly. If the technician needs a long period of training to perform this task, then the recognition-algorithm development is more likely to take a longer time.

If a technician cannot reliably perform the task using the imagery produced from the hardware but can reliably perform the task in the real world, then the design team should consider using different hardware or a different image-collection strategy. For example, to perform the vision task, the technician might have to use both eyes to derive needed stereo information or might have to integrate information from multiple angles. This same sort of information might have to be provided to the recognition process.

Another basic task-analysis question that needs to be investigated: Is an automated pattern recognizer ruled out if the image-collection options are limited to imagery from which a technician cannot reliably perform the recognition task? The answer depends on whether gaining reliability is possible through precise measurement of some quantity from the imagery (that is, the exact length or width of an object, its density, or its color). If this is the case, then the automated recognizer can easily outperform the technician. The automated recognition system will fail if the key information for making a definitive recognition possible is missing because the resolution is too coarse or the monochrome image data lack vital color information.

A vision-based pattern-recognition system that relies on object features usually requires a consistent set of operations. First, the objects of interest must be located. Second, the objects must be separated from the background. Third, the characterizing features must be extracted. Proper system design will produce high-quality features as inputs to the recognition algorithm.

For example, consider the task of identifying different types of mechanical nuts (see Fig. 1). Note that one of the nuts differs in shape from the other two. Also note that the two hexagonal nuts differ in size. Any extraction process that allows shape determination and precise size measurement or matching should provide the means for recognizing these objects. A color camera is not required in this vision task. Note, however, that the nuts are all easily separated from the background, and no confusing shadows exist. By carefully choosing the lighting devices and using a dark background, the design team can easily develop the best object location and segmentation process.

Determining as early as possible if key recognition information is available is essential to efficient vision-system design. The type of information available for recognition is important. Then, shape, size, texture, and color features can all be extracted and quantified in various ways.

In some recognition problems the choice appears obvious, and the recognition approach is straightforward. For example, consider the design of a vision system that must sort red Rome apples from ripe yellow bananas (see Fig. 2). One approach might be to locate the objects and make color measurements. A simple color-feature rule would be to assign all yellow objects to bananas and all red objects to apples.

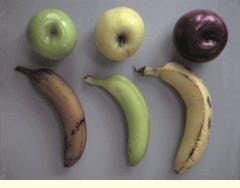

If during the color-feature analysis, some of the apples are green or yellow and some bananas are green or brown (see Fig. 3), the initial color features might have to be discarded and a shape feature applied. Because all the bananas are elongated whereas the apples are round, a simple rule-based classifier could be incorporated that assigns round objects to apples and elongated objects to bananas.

Still another analysis question needs to be resolved. Which vision features must be used and which can be discarded in an automated way that works consistently in different recognition problems? First, a sample of imagery data that represent real-world variability must be collected. In the case of sorting apples from bananas, color is not the best feature for performing the determination process; an elongation measure is best. Still, perhaps another more reliable or accurate feature should be chosen. The vision-task analysis must highlight the feature or set of features that have the highest potential to discriminate between the recognition classes of interest.

The use of image-processing routines to segment objects from the background and feature-extraction routines to produce measurements of object characteristics contribute to the generation of a database of measurements for all object classes of interest. A straightforward method for quickly locating potentially discriminative features for classification is to compute the mean and standard deviations for each feature in each class. Any feature with different mean values in each class is a good candidate for use in a classifier. If the separation of mean values is large with respect to the standard deviations, then the feature has a very high potential of usefulness in a classifier. More sophisticated methods can reveal features with a high potential of assistance in classification and provide a rank ordering of these features.

The Recognition Toolkit from Recognition Science Inc. (Lexington, MA) contains several different types of feature evaluation and ranking tools. These tools permit measurement of the discriminant information available from individual features and the mechanisms for investigating the combined interaction of features. In particular, developers can use the discriminant information operator to rank-order the features and then rapidly build classifiers using subsets of the most highly ranked features.

Next month`s column will show how to select classifiers.

FIGURE 1. The vision process for recognizing three different mechanical nuts is easily achieved by extracting both shape and size information.

Precise size and distance measurements through segmentation or edge location are directly related to actual size in a calibrated vision system. Measurements from the segmented regions can be used to readily classify the nuts into three different categories.

FIGURE 2. For the design of a vision system that must sort red Rome apples from ripe yellow bananas, one approach might use an object recognition process to separate the round apples from the elongated bananas and assign all yellow objects to bananas and all red objects to apples.

FIGURE 3. Consider the vision design task of sorting green Granny Smith apples, yellow Golden Delicious apples, red Rome apples, and green and yellow bananas at varying stages of ripeness and color. Collecting data that contains real-world variables with which an object recognition system must cope is a major factor in achieving a proficient vision system design. Clearly, color is not a reliable characteristic for separating these apples from the bananas. However, color could play an important role if the system recognition requirements included subtypes for the apples or freshness for the bananas.

MARK A. VOGEL is president of Recognition Science Inc., Lexington, MA; e-mail: mvogel@ RecognitionScience.com.