Software enriches 3-D medical imaging

By R. Winn Hardin,Contributing Editor

Computed tomography (CT), magnetic resonance imaging (MRI), microscopy, and other advanced imaging protocols used in medical-imaging acquisition systems have been dramatically improved in speed and accuracy by at least tenfold during recent years. At the same time, the imaging software for displaying collections of large medical images has become smarter—by adding automated protocols—and more efficient. Moreover, imaging-based workstations are steadily moving from large and expensive machines to smaller and affordable dual-Pentium, Windows NT/2000-based machines (see Vision Systems Design, Feb. 2000, p. 17).

FIGURE 1. Researchers at Massachusetts General Hospital's Surgical Simulation Group are using 3D-Doctor software to collect 3-D data from computed-tomography axial image files for later prototyping of an exact replica of a human torso. The program will enable the US Army to train personnel in battlefield surgical operations. This early prototype consists of a torso mounted on a litter and equipped with a tracking-device transmitter, motion sensor connected to a decompression needle, and display of the virtual environment on a flat-panel display. (Photo courtesy of Able Software)

Recently, the cost of medical three-dimensional (3-D) modeling applications has dropped further, thanks to the continuing evolution of the microprocessor, lower-cost graphics boards, and open graphics standards. The results are improved 3-D algorithms and wider commercial graphics support on desktop platforms. For example, Able Software Corp. (Lexington, MA) 3D-Doctor software builds on the benefits of the OpenGL standard and uses vector-based boundary determination rather than raster-based systems to markedly improve computational efficiency while manipulating 3-D images.

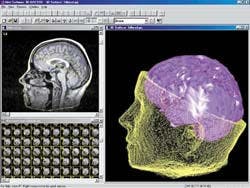

FIGURE 2. Windows-based 3D-Doctor does not require additional graphic accelerators to operate in nearly real time for 3-D visualization. Computed-tomographic images, magnetic-resonance images, or micrographs can either be scanned into a desktop computer or transferred digitally. The software does not get bogged down in interface concerns with image-acquisition devices other than standard and high-resolution scanners. Instead, it concentrates on accepting, converting, and exporting a range of image formats such as JPEG, DICOM, STL, and CAD files for 3-D prototyping.

Says Y. Ted Wu, president and chief executive officer of Able Software Corp., "A single CT or MRI slice of 512 x 512 x 16-bit resolution is about 0.5 Mbytes, and a typical CT/MRI data set can contain around 200 slices. Thanks to the optimized surface-rendering algorithm in 3D-Doctor, 3-D surface models can be created from a few seconds to a few minutes on a standard Pentium III PC with 128 Mbytes of memory, depending on the complexity of the object under study, without using additional hardware accelerators," he adds.

3-D PC modeling

For some researchers, making a digital medical representation is not enough. Dr. Stephane Cotin at the Massachusetts General Hospital (MGH) Surgical Simulation Group (Boston, MA) is using 3D-Doctor on a custom PC system with two Intel Corp. (Santa Clara, CA) Pentium III processors, 512 Mbytes of RAM, and the Windows 2000 operating system. To display the rendered images, Cotin has installed a Nvidia (Santa Clara, CA) GeForce256 video card with 128 Mbytes of on-board video memory. The goal to make replicas of human internal organs.

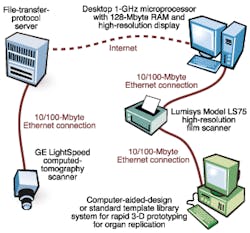

Under a US Army grant, Cotin's group is combining 3-D surgical visualization with a life-size mannequin of a human torso to create a "flight simulator for surgeons." The system will be used to train US Army medical personnel in the best ways to handle battle wounds, such as the insertion of tubing into the lungs to alleviate air pressure. Cotin says, "Radiologists help us extract the correct shape and contours from a CT scan. Then we can use 3D-Doctor to model 3-D structures." His program requires both MRI and CT scans of human subjects. Typically, the scans are done at a separate location across the MGH campus on a GE Lightspeed CT Scanner from General Electric Medical Systems (Waukesha, WI).

Cotin downloads the resulting DICOM (Digital Imaging and Communications in Medicine) files across a local Intranet using an EtherLink 10/100 PCI Network Interface Card from 3-COM (Santa Clara, CA) attached to his PC. From there, 3D-Doctor can access the DICOM files, create 3-D models from the axial images, and convert the 3-D models into any of several file types for later use. The software's computer-aided-design export feature helps create exact replicas of human organs for surgical teaching instructions (see Fig. 1).

Surface rendering

Rather than using conventional raster-based surface-modeling algorithms, 3D-Doctor uses a variation on tiling and adaptive Delaunay triangulation algorithms to create vector-based boundary lines that outline the 2-D image or user-selected regions of interest within the image. To create 3-D representations, images are first filtered from the resulting point set. 3D-Doctor uses a variation of the Delaunay triangulation method to generate a set of polygons that allow surface rendering to take place (see Fig. 2). Because the images are located at preset spatial intervals as determined during the imaging protocol, the system can create a full surface of the 3-D object.

To correct for patient movements during image acquisition, 3-D Doctor's auto-alignment function is used. In operation, the user defines a rectangular training area, and all pixels within the area are used to create a feature template. A correlation function then uses the template to match image slices. The template moves around in the target image until it finds the best correlation, and the target image slice is aligned.

Selective rendering

Whereas vector space determines the surface map and creates the polygons for easy display by means of the OpenGL protocol, volumetric rendering still requires ray-tracing algorithms to give full 3-D perspective in space. Medical-imaging systems, such as that used by Cotin at the Surgical Simulation Group, use both surface- and volume-rendering methods to show 3-D objects. However, surface rendering and volume rendering display 3-D objects in different ways.

Surface rendering is mainly targeted at creating surface properties of an object and does not use voxels within the object. This makes it easier to handle the display of multiple objects and their spatial relationships because only surfaces are concerned and treated either as transparent or opaque. For Cotin's work, however, surgeons must be trained using all the data, including data representing tissues inside the heart or lungs. For this type of volume rendering, 3-Doctor software returns to the original data set and combines pixels on subsequent 2-D images into 3-D voxels.

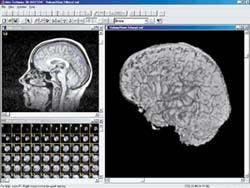

FIGURE 3. Using 3D-Doctor, physicians and researchers can view the surface of a 3-D object (left) or in full 3-D volume-rendering mode (right). This technique reveals the 3-D voxels underneath the object's exterior. Triangular polygons are used for surface rendering. The first step is to identify the boundary lines that define the object in the 2-D image. The processing system depends on a user-defined template to automatically align subsequent images (from a CT system, for instance) and then connects the common boundaries from subsequent axial images to create 3-D surface rendering. According to system designers, working with vectors rather than raster-scanned pixels markedly decreases processing time and provides the accuracy necessary for presurgical planning.

To reduce the amount of 3-D data, or voxels, that must be created to form a volumetric rendering, 3-D Doctor allows the user to draw a simple cubic box around the area of interest. A key benefit provided by volume rendering is the similar appearance of voxels in 3-D perspective to those in 2-D image slices or film. This attribute often reduces the number of voxels that have to be processed down to 20% or less of the total volume. Therefore, it achieves near-real-time rendering on a standard PC without the need for additional hardware.

The volume rendering in 3D-Doctor uses a ray-casting-based algorithm. Ray-casting is similar to ray-tracing. However, instead of tracing a light ray from the eye to the voxels, it casts a light ray from behind the object through the voxel to the eye in creating a 3-D perspective of the image. In volume rendering, all voxels along each light ray contribute a portion to the final image while only the point to the eye gets to be displayed during surface rendering.

Flexibility to switch back and forth quickly between volume and surface renderings was crucial to Cotin's project. "We chose this software so that we could have an easy way to get quick 2-D images and 3-D models. We started to design our own tools, but we were limited to how many measurements we could make and how we could segment the anatomy images. This program offers both basic- and expert-level tools," Cotin says. Using 3D-Doctor, physicians and researchers can view the surface of a 3-D object or, in full 3-D volume rendering mode, can reveal the 3-D voxels underneath the object's exterior (see Fig. 3).

Radiologists can use the program to help get the right shape and contours of human organs. Then, computer scientists can make 3-D structures and convert the 3-D information into either image files for the computer display side of the simulator or for export to rapid-prototyping programs to make tangible models of the organs for placement in the mannequin. Instead of limiting movements through the anatomy on a computer, plans call for the development of a system that uses both computers and mannequins to allow combat medical personnel to get full feedback from the anatomy data.

Company InformationAble Software Corp.Lexington, MA 02420Web: www.ablesw.comGE Medical Systems

Waukesha, WI 53188

Web: www.gemedicalsystems.com

Nvidia

Santa Clara, CA 95050

Web: www.nvidia.com

3-COM

Santa Clara, CA 95052

Web: www.3com.com