How to Accelerate Image Processing Development and Validation with Camera Simulation

Developers of image processing algorithms are often confronted with obstacles when testing and validating their system: They need to acquire image data from a camera via a frame grabber and process that data. All these steps must be validated according to multiple use-case scenarios to ensure the system performs as required. However, testing under real-life conditions can be time-consuming, costly, and challenging as it is difficult to reproduce identical imaging conditions. Camera simulation tools speed up the validation process while making it more robust with standardized, comparable image data.

Time-Consuming Test Setups

Acquiring the validation images under real-life conditions is time-consuming. Every use case scenario must be set up individually and repeated after every bug fix. Sometimes it even requires moving the equipment to a remote location, for example a special lab or an outdoor location. This slows down the validation process considerably and adds additional costs.

In addition to this effort-related cost, system developers need to place the cameras in the target system or location to capture real images. This drives up the cost needed for validation tests. Here are some examples:

- In a large automated optical inspection (AOI) system in which the vision system is integrated into a larger automated machine, integrators may spend $50,000 or more to test the entire system under real-world conditions.

- Stadium installations are complex and costly. Engineers need to wait for real activity, such as lightning, to perform realistic testing.

- Aerial mapping systems require many flight hours to adequately test them.

Non-Reproduceable Test Data

Every single frame captured by a camera is unique, due to camera noise, movement, or scenario condition changes such as lighting. This results in different images in every frame, which means a specific experiment cannot be reproduced 100% identically. Consequently, if the image processing algorithm does not perform as expected, it may be difficult to determine whether this is due to a fault in the algorithm or an unpredictable uniqueness in the image.

Even if the issue is clearly on the algorithm side, how can a fix be validated if the next test does not reproduce exactly the same image data entry? These variations in the image data make debugging of corner cases, or those that occur under rare circumstances, extremely difficult and time-consuming, if not practically impossible.

Related: Research on Optical Neural Networks Explores Fast Energy Efficient Image Processing

Camera Simulation Boosts Time to Deployment and Cuts Costs

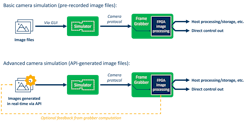

Camera simulator tools generate image data without a camera. They can either playback real-life images from previous experiments or feed virtual images created by the developer for testing. The simulation software may interface with CoaXPress, Camera Link, or any of the user’s frame grabber.

Some frame grabbers perform image pre-processing locally on their own FPGA. In this article, we will focus on the debugging, testing, and validation of systems with FPGA processing on the frame grabber, yet the principles also apply to systems without any FPGA processing.

Camera simulation is a simple and affordable alternative to investing in a full system or on-site testing: All you need is a host computer, frame grabber(s) and the camera simulation tool.

The camera simulation tool feeds image data into the frame grabber’s FPGA via its camera protocol as if it were streamed by a real camera. However, because this image data is not issued by a physical image sensor, it is controllable and reproduceable. Engineers can use this data stream to test and debug their FPGA image processing, or even their downstream host processing, knowing exactly what the image data contains and how their algorithm is supposed to behave with it.

Related: Gage R & R Studies in Machine Vision

The tests can run at full speed, slow motion pace, or even frame by frame for an optimized viewing method. On top of the real images/video footage, system developers may generate dedicated images to better verify algorithm corners or debug unexpected behavior.

Camera simulation can be used in two ways:

- The basic operation feeds pre-recorded image files into the system. These images may be actual images captured during a previous test under real-life conditions or simulated/edited images created by the system developer.

- The advanced operation allows engineers to generate the images in real-time via an API. This approach automates testing by generating new variations of the test images depending on the test result.

Use Case #1: Machine Vision System Validation

When validating a machine vision system, for example for quality inspection, camera simulation can be used to reliably debug the image processing algorithm with sample images of the object and its defects. In that case, the images used have been captured under real-life conditions, but unlike with a real camera, each frame can be repeated with 100% accuracy. If a processing error is detected with a specific frame, that very exact frame can be repeated as much as needed until the issue has been solved. Engineers may even re-use the camera simulation tool with the same set of images later to test their system in the field and compare its performance to the original design with the exact same data.

Use Case #2: Simulate Synchronized Multi-Camera Acquisition

In some applications, such as 3D reconstruction, engineers need to acquire multiple images simultaneously. It is possible to synchronize multiple simulated cameras just as if they were actual cameras. They can all be synchronized via an external trigger or via the protocol’s trigger sent by the grabbers. For example, one development team used this approach to test a 3D sports analytics broadcasting system to be installed in stadiums: ~40 camera simulation units were used to simulate a whole game via the synchronized acquisition of ~40 cameras taken from different locations around the stadium.

Use Case #3: Simulate Changing Environmental Conditions

Outdoor imaging is particularly challenging because the lighting conditions constantly change depending on the time of the day, the season, or the weather. Fitting a camera on a mobile device such as a drone adds even more variability, such as a camera’s angle of view or position relative to the sun.

When developing an image processing algorithm for a drone, these variations must be addressed, for example with appropriate HDR IP (High Dynamic Range). Testing under real-life conditions means performing actual flights in varying conditions (sunny, cloudy, high noon, or dusk) and varying environments (dark surfaces, water, or buildings) to validate the scenario such as low-light or high reflection. Such tests must be repeated after each update, which is very time-consuming and costly. Besides, the weather conditions of the tests are always unpredictable.

With camera simulation, it is possible to feed images of such scenes exactly as needed. Engineers can also tune the speed of the stream to identify image processing errors more easily than with a live stream.

Related: CPUs, GPUs, FPGAs: How to Choose the Best Method for Your Machine Vision Application

Use Case #4: Simulate Corner Cases for Algorithm Development

Corner cases might be difficult to reproduce in real life, so why not just simulate them? Create a test image file (BMP) that corresponds to the corner case you need to test and run it with a camera simulation tool. The image will be fed into your system as if it were streamed by a real camera. You can then use de-bugging tools such as SignalTap from Intel (Santa Clara, CA, USA) or ChipScope from AMD (Santa Clara, CA, USA) to debug processing issues with problematic images.

Creating their own test images gives engineers full flexibility to depict their corner cases by tuning specific image features, e.g., adding or removing noise or gain.

These few examples illustrate the potential of camera simulation to speed up development, testing and validation while cutting costs. Camera simulation tools do not eliminate the need for real-life testing completely, but it allows developers to minimize the need for it and save precious time and money.

About the Author

Reuven Weintraub

Reuven Weintraub is the founder and CTO of Gidel (Or Akiva, Israel), which specializes in FPGA-based imaging and vision solutions.