Across the industrial, military, manufacturing, medical and social commercial sectors, Augmented Reality (AR) is finding many applications and use cases which are contributing to its adoption. One excellent example is a large parcel delivery company that is currently using AR smart glasses which can read the bar code on the shipping label. Once the bar code has been scanned, the glasses can communicate with the company’s servers using WI-FI infrastructure, to determine the resultant destination for the package. With the destination known the glasses can indicate to the user where the parcel should be stacked for its on-going shipment.

Anatomy of an AR System

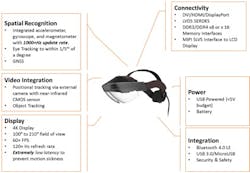

Deconstructing the anatomy of an AR system like smart glasses shows that they can be quite complex (see Figure 1. Anatomy of an Augmented Reality System). These systems require the ability to interface to and process data from multiple camera sensors which enable the system to understand the surrounding environment. These camera sensors may also operate across different elements of the Electro Magnetic (EM) spectrum such as infra-red or near infra-red. Additionally, the sensors may provide information from outside the EM spectrum providing inputs for detection of movement and rotation, for example MEMS accelerometers and Gyroscopes along with location information provided by Global Navigation Satellite Systems (GNSS). Embedded vision systems which perform sensor fusion such as this from several different sensor types are commonly known as heterogeneous sensor fusion systems. AR systems also require high frame rates along with the ability to perform real time analysis, frame by frame to extract and process the information contained within each frame.

Figure 1. Anatomy of an Augmented Reality System

Considerations in Choosing the Right Components

As a designer of AR systems, you must consider the unique aspects of AR systems. The systems are required not only interface to cameras and sensors which observe the users surrounding environment and execute the algorithms as required by the application and use case. But also, they must be capable of tracking the user’s eyes determining their gaze and hence where they are looking. This is normally performed using additional cameras observing the user’s face and implementing an eye tracking algorithm. When implemented this algorithm allows the AR system to track the users gaze and determine the content to be delivered to AR display, enabling efficient use of the bandwidth and processing requirements. However, performing the detection and tracking can be computationally intensive in itself.

Providing the processing capability to achieve all the necessary system requirements therefore becomes a driving decision factor in component selection. Independent of the application and use case, several competing requirements all must be considered if the designers are to arrive at the optimal solution for the AR system:

- Performance

- Future proofing

- Power

- Security

The Optimal Solution: All Programmable SoCs

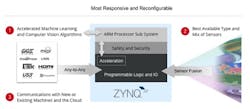

Xilinx®All Programmable Zynq® -7000 SoCs or Zynq® UltraScale+™ MPSoCs are used by AR solutions providers to implement the processing core of the AR system. These devices are themselves heterogeneous processing systems which combine ARM processors with high performance programmable logic. Zynq UltraScale+ MPSoCs, the next generation of Zynq-7000 SoCs, additionally provide an ARM® Mali-400 GPU (select family members also contain a hardened video encoder which supports H.265 and HVEC standard).

These devices enable you to segment the system architecture optimally using the processors to implement the real-time analytics and transferring to the ecosystem traditional processor tasks. The programmable logic can be used to implement the sensor interfaces and processing, this offers several benefits namely:

- Parallel implementation of N image processing pipelines as required by the applications

- Any-to-Any connectivity, the ability to define and interface with any sensor, communication protocol or display standard allowing flexibility and future upgrade paths.

Figure 2. All Programmable SoCs Enable Most Responsive and Reconfigurable Systems

Best Performance-Per-Watt

Most AR systems are also portable, untethered and in many instances wearable as is the case with the smart glasses. This brings with it a unique challenge which is implementing the processing required within a power constrained environment. Both the Zynq SoC and Zynq UltraScale+ MPSoC families of devices offer the best performance per watt, and can further reduce the power during operation by implementing one of several different options. At one end of the scale the processors can be placed into standby mode to be awoken by one of several sources, to powering down the programmable logic half of the device. Both these options can be possible when the AR system detects that it is no longer being used, extending the battery life. During operation of the AR system elements of the processor which are not currently being used can be clock gated to reduce the power consumption. Within the programmable logic element following simple design rules—for example making efficient use of hard macros, planning carefully control signals and considering intelligent clock gating for device regions not currently required—you can achieve a very efficient power solution.

Information Security and Threat Protection

Several AR applications, for example medical sharing of patient records or sharing manufacturing data, call for a high level of security both in the Information Assurance (IA) and Threat Protection (TP) domains, especially as AR systems will be highly mobile and could be misplaced. Information assurance requires that you can trust the information stored within the system along with information received and transmitted by the system. As such, for a comprehensive Intelligence Artificial domain you can make use of the Zynq secure boot capabilities which enables the use of encryption, and verify using the AES decryption, HMAC and RSA verification. Once the device is correctly configured and running you can use ARM Trust Zone and hypervisors to implement orthogonal world, where one is secure and cannot be accessed by the other.

When it comes to threat protection you can use the in-built XADC within the system to monitor supply voltages, currents and temperatures to detect any attempts to tamper with the AR system. Should these events occur the Zynq device then has several options from logging the attempt to erasing secure data and preventing the AR system from connecting to the supporting infrastructure again.

Software-Defined Development

Xilinx All Programmable SoCs and MPSoCs are the optimal choice for your next AR solution. The good news? You do not need deep levels of hardware design expertise, thanks to the reVISION™ development stack which features a familiar eclipse-based environment using C, C++ and/or Open CL languages with SDSoC™ and ready-to-use OpenCV libraries.

For more information about Xilinx All Programmable SoCs and MPSoCs and resources for your next AR solution, visit the Xilinx reVISION Developer Zone at: www.xilinx.com/revision