Progress update on integrating embedded vision into GenICam

Briefing of Embedded Vision Devices Standardization

By Martin Cassel, Silicon Software

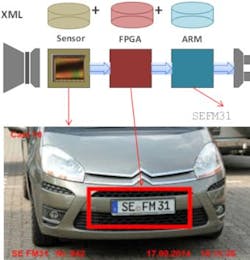

Embedded image processing devices have until recently consisted of cameras or vision sensors interfaced to heterogeneous processing units such as CPUs, GPUs, FPGAs and SoCs (System on Chip), a combination of processors or processing modules. The focus of the IVSM (International Vision Standards Meeting) standardization body is the software-side harmonization of these various elements during its semi-annual sessions and progressively move solutions forward. To achieve standardized data exchanges within image processing devices, the panel plans to further develop the GenICam camera standard, which will ensure the rapid implementation and cost-effective operation of devices.

At the October 2016 meeting in Brussels, the IVSM panel agreed to tackle the two most important standardization aspects of connecting subcomponent software: The description of pre-processing and its results using so-called processing modules, and secondly connecting various XML data which serve the cameras’ standardized parameter and function descriptions. The results of these discussions were presented at the following meeting in Boston in May 2017 where further implementation was specified. One challenge in standardization is posed by changing image data formats, as well as by the input and output formats of processing modules that the application software accesses and then must dynamically interpret. Additionally, an interface structure is needed that will link data from camera sensors and processors with those of the processing modules.

In the future, different image and pixel formats as processing module output data — such as raw, binary, RGB or blob images — with additional metadata such as enlarged or reduced images and/or image details (ROI) would be uniformly described using XML and integrated into the new general streaming protocol GenSP (GenICam Streaming Protocol). This would allow the standardization of a process such as turning processing on and off in order to retain or change the input image data. As an example, in the case of a laser profiling image, the laser line can be dynamically switched on or off. Another example would be the parameter description of the image formats should be valid for all camera interfaces.

Uniform XML Descriptions

Just as image formats must be flexibly described, so must those of the processing modules, as well as camera sensors and processors, for a proper interconnection to occur. The application software will then be able to read the camera’s parameters in a standardized and automated fashion, and to determine which data the camera delivers with which parametrization. In this method common changes made by various manufacturers can be uniformly implemented such as the camera parameters’ XML and that of its preprocessing would be merged. Flexible modules that can represent one unit as a processing module in relation to the preprocessing (consistent with GenICam SFNC), could replace highly optimized individual components. Parameter trees of both the processing module and the camera are then merged into a single tree. In the future, the tool that merges the trees will be made available in the GenICam Repository, ensuring uniform readability of all cameras within sensor groups.

In the embedded world, the use of FPGAs is pervasive and is also described by the GenICam standard. ARM processors are too slow for the image processing tasks required and are instead better suited for post-processing. By contrast FPGAs, meet the demands on computational performance and for heat build-up, are extremely well suited for preprocessing, and are built into practically all cameras. Combinations of FPGAs with GPUs and CPUs are possible. With the embedded GenICam standard that is being created, these processor modules will coalesce into one homogenous unit with a consistent data structure.

Summary: In implementing a new embedded Vision standard for the software level, the IVSM Committee is placing particular emphasis on XML-based descriptions. XMLs are widely used in the GenICam GenAPI standard, and they define semantics and their interpretation for camera descriptions. Equally well known in GenICam are the processing modules that were first used for 3D line scan camera description. Further steps in implementing the standard will be covered in future briefings.

Pictured above: Processing units with different image input and output formats