Maximizing smart camera optical character recognition success

Font classification library experimentation leads to higher read rates

Alan L. Lockard

Smart cameras provide a substantial set of tools to meet the requirements of most applications. One of these is optical character recognition (OCR). This technology has been around for over 50 years1 in postal letter sorting machines and more recently, in automated teller machines that can read handwritten personal checks with high accuracy or in mobile banking apps that enable users to deposit money by means of a digital photograph of the check.

Today’s smart cameras read—or more accurately—classify the text being imaged by first segmenting the text into individual characters and then comparing those against a set of look up artifacts in a font library. These smart cameras can be taught the font set from the actual printed characters being read. Each font in the library can have multiple trained artifacts for each character in the library to accommodate slight variations in contrast and character formation.

The camera classifies each character by comparing it to the classification library. The classification results in two candidate matches, the first and hopefully the correct match having a higher score corresponding to its respective library character and the second having a lower score corresponding to second possible candidate in the character library. The bigger the spread in classification scores, the more reliable the OCR results.

One such example of an OCR project involves the reading of production batch information laser marked onto a white plastic diagnostic test card (Figure 1). The same camera also reads the lot and serial number data from a 1D barcode applied to the card. This combined information is then sent to a thermal transfer overprinting (TTO) printer encoding the data into a 2D data matrix barcode printed on the outside of the foil pouch.

Figure 1: Filtered, segmented and classified text strings (3) are acquired from the smart camera.

Initially, this was an easy setup, as the character was trained as the process went on, building up the library over time. However, many read failures occurred due to similarities in characters reducing the score separating the first and second character classification. For instance, the characters 2, Z and 5 can look like one another. The laser marking system had been in use for several years prior to attempting to perform the OCR task on the laser marked cards and no attention was paid as to font selected to laser mark the cards. Switching the laser marking equipment to an OCR-A font set helped mitigate this problem.

The benefit of the OCR-A font set is that the characters in the font set are specifically constructed to minimize similarities between the characters. So now, 2, Z and 5 have more unique character formation allowing for more robust discrimination between characters in the library. Working from a font library trained with OCR-A laser-marked characters resulted in a much higher read success.

As time went on, characters were trained and added to the classification library. The OCR read success continued to improve as the font set was expanded to encompass all the possible marking variations. As the font library was trained for every variation of character marking, a degradation of the OCR performance began to occur because the 2’s, Z’s and 5’s began to look alike again, even using the OCR-A font set.

If the camera had come bundled with font libraries from which users could choose, this would have provided a solution. Lot-specific characters being laser marked onto the white plastic diagnostic test card had an alphanumeric batch number with letter and number combination that encompassed a 120-month sequence of first and last letters to identify the month and year of manufacture. Compiling a complete font library of this 120-month sequence of laser marked characters pre-loaded into the camera was going to require marking custom artifacts on validated production equipment. In addition, it may not be possible to capture sufficient marking variations to assure future OCR read success whilst in production.

Further, having a set of pristine font characters would allow for the most accurate character classifications. As the application matured, it seemed that the misclassifications caused by day-to-day variations in the laser marking was always going to be an inevitable part of the process. Increasing the frequency of cleaning the optics on the laser markers and OCR cameras produced a bump in accuracy, but the read rate was always short of 100%.

Since the laser marking equipment was deriving the OCR-A font from the Microsoft Windows operating system controlling the laser marking system, an experiment involving using the same source was performed. A Microsoft Word document using the OCR-A font set was created, and a string of alphanumeric characters used in the batch marking was typed. The OCR-A font set was limited to only those characters used in the alphanumeric batch number.

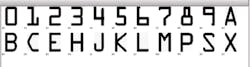

Figure 2: An MS Windows® OCR-A font library sub-set for internal batch number is shown here.

Including the entire alphabet—including characters that may never be encountered—increased the chance of misclassifying one of those never-to-be-used characters included in the font library. Further experimentation was performed, finding that a font size that produced 2 mm-high characters matching the laser marking system. The snipping tool was used to save the character string as a .jpeg file, and from there, the image could be opened in the smart camera environment to train the pristine OCR-A natives into the OCR classification library. Previously-trained characters were deleted, and after training the MS Word-generated characters, the system was able to have a working set consisting of a single-trained artifact for each character (Figure 2.)The resulting font classification library produced slightly-lower scored classifications, since no laser marked characters perfectly matched the pristine OCR-A characters imported into the classification library direct from the MS Word document.

Although the original OCR classification library contained multiple iterations of high-scoring trained characters, the separation of classification scores from one character to another was diminished, thus reducing OCR read rate success. However, the slightly-lower scored OCR classifications that were resulting from the single-artifact-per-library character maintained a greater classification score separation, resulting in a much higher OCR read success rate.

1 source: http://bit.ly/VSD-SRC.

This article does not reflect the views or position of the Company but those of the author.

Alan L. Lockard, Principal Engineer in Reagent Engineering at bioMérieux, Inc. (Hazelwood, MO, USA; www.biomerieux.com)