OPC Machine Vision part one officially adopted

By Martin Cassel

As of Q3 2019, the first part of the OPC UA Companion Specification for Machine Vision (OPC Machine Vision, for short) is officially adopted. Developed under the umbrella of the VDMA Machine Vision Group and based on the OPC Foundation’s OPC UA (Open Platform Communication Unified Architecture), the standard’s goal is the integration of anything from image processing components up to entire image processing systems into industrial automation applications, with the aim of enabling machine vision technologies to communicate with the entire factory and beyond. With the standard, a generic interface is being developed for image processing systems at the user level, including semantic description of image data.

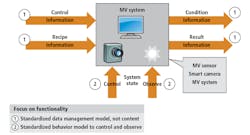

OPC Machine Vision describes image processing systems at the semantic level (Figure 1) as an information model, as well as a state machine, in terms of its involvement with surrounding machines. Among these are production control and information tehcnology (IT) systems: programmable logic controllers (PLC), robotic control, human-machine interfaces (HMI), software systems (manufacturing execution systems, supervisory control and data acquisition, enterprise resource planning, cloud), and data analysis systems. As ethernet-based networks form the basis of the new OPC Machine Vision standard, proprietary interfaces are dismissed in favor of generic ones.

OPC UA describes and addresses the abstract construction of image processing systems. One important building block of the specification is the generic information model which abstracts image processing systems, from a simple vision sensor to cameras and multi-camera systems, up to more complex inspection systems. On the input side is an image processing task, such as a product type to be manufactured, described by a recipe and a configuration (recipe and configuration information). On the output side, the image processing system imparts the result and ts general state to the environment (result and condition information). At the conclusion of the image processing, the system transmits the result to surrounding systems that can retrieve data from image processing systems at any time from their side, for example, to determine whether the system is functioning.

Every interaction with the image processing system and every access of the information model is dependent upon the model’s condition at the time and, with it, the capabilities of the image processing system. For that reason, the state machine concept was defined as the second basic building block of the specification, which abstracts the possible image processing system states. An image processing system takes in various states regarding its environment, one single state to a given point in time in each case, such as initial state, operational readiness, image processing, errors, warnings, stops, and result signal. The system’s possible operational states and state transitions are represented using a fixed number of states. The state machine alters its state based upon external and internal inputs (method calls or events). It is the basis for the system control with the aid of general capabilities, such as communicating the state.

Part 2 targets specific services and capabilities

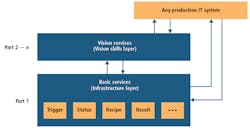

Because the contents of input and output are manufacturer specific, they are treated in the first part of the specification as a black box. The definitions described in part 1 target the basic system capabilities and not those of image processing, and thus not the application software which differentiates, for example, a code reading system from a surface inspection system or a vision-guided robotic solution.

Describing this level in the form of a terminology to then represent it in capabilities-based information models is one element of the planned next step. The manufacturer-specific black boxes will then be replaced by standardized information structures and semantics such as configuration, recipe, and result information for applications such as presence detection, completeness checking, and position sensing (Figure 2). Doing so ensures that different image processing systems, when using the same recipes—such as sensor correction or lens aberration— also provide the same results.

Furthermore, definitions are planned for rules to structure application-specific messages, by which the image processing system communicates its state, and how those messages interact with the state machine. The goal is that even a generic client without deep knowledge of a given application will get a basic picture of the image processing system’s current state, showing—for example—how long it has been productive, defective, or in maintenance.

To date, the state machine only provides information on the current controller state of the image processing system. Should an error occur, it switches to the “error” state, but offers no information as to why this has occurred. In this case, the schema of the six basic system states defined by the SEMI E10 norm (bit.ly/VSD-SEMI) is applied. Each piece of error information then carries information so that generic clients with no deeper application knowledge can understand possible causes of errors in principle.

Integrating image processing and factory automation

OPC Machine Vision is designed for the demands of ever faster and constantly changing production processes and represents a milestone for the concept of Industry 4.0. In the future, the integration investment for image processing in manufacturing environments will be markedly reduced and latencies can be alleviated for hard real-time demands, which means acceleration of production at lower costs. Previously, there was no software layer between the two worlds—neither for identification and control of available components, nor for device communication or the exchange of measurement results.

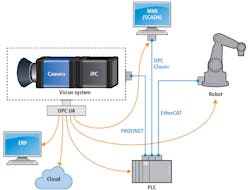

By integrating image processing into the machine controls (PLC), new possibilities arise from self-optimizing production processes, individualized products, and a direct connection of image processing data with conventional sensor data such as temperature and pressure. Operation and information technologies will become closely intertwined. In this manner, for example, process controls will check image processing devices and modify them if the production line is converted to another product type. Or in the event that lighting is exchanged, and the image processing system is adjusted anew, the system reports this to the PLC. Using OPC Machine Vision, cameras and the PLC are concurrently programmable via OPC UA.

A common situation in manufacturing individual workpieces is that the PLC informs the image processing system by sending a start signal regarding the arrival of a new part. It waits until the image processing system answers with a result, i.e., quality information (pass/no pass), a measurement value (size), or position information (x and y coordinates, rotation, possibly z coordinates or complete position in a 3D system), whereby work on the piece then continues. The interface described in the OPC Machine Vision specification can coexist with existing interfaces such as digital I/O, field busses, and industrial ethernet systems, and offer an additional view of the image processing system (Figure 3). It can also be used as a single interface to the system, depending upon the requirements of the application in question.

If a transfer with deterministic real time is necessary, optional TSN (Time-Sensitive Networking) is switched on, thus transporting OPC UA over TSN. In image processing applications, TSN should be adopted on Gigabit Ethernet (GigE). By precisely setting a robot’s position, for example, in relation to the acquired image, direct access to the robot’s motion control is given.

Results

Thanks to the general information model and the state machine with defined and consistent semantics, integration of image processing systems into existing automation systems is now markedly simplified. OPC Machine Vision will enable accelerated development and configuration of machine vision systems by virtue of generic applicability and scalability. The specification eases their integration into devices, controls, human-machine interfaces, and the software environment—meaning a bottom line of faster market availability and implementation of new applications.

Expert knowledge is no longer required for implementation or use. Hardware can be conserved by using smart cameras with integrated sensors, optics, lighting, processors, and power supplies. System integrators are still required for the setup of the entire system and its integration to a variety of different sensors. Embedded image processing systems combined with OPC Machine Vision and embedded GenICam standards will be strongly integrated into the Industry 4.0 landscape, playing a key role in the future.

View more information on OPC Machine Vision here: bit.ly/VSD-OPCUA.

Martin Cassel is an Editor at Silicon Software (Mannheim, Germany; https://silicon.software)