IP cores boost machine vision system performance

Integrating IP cores directly into field programmable gate arrays (FPGA) provides ready-made functionality for systems integrators and camera manufacturers. This includes image processing functions like contrast enhancement, noise reduction, or the integration of machine vision standards into cameras or image acquisition devices. Use of IP cores can save time and money as it provides an alternative to programming FPGAs, which involves complicated VHDL or Verilog programming languages.

IP cores for designing machine vision systems

JPEG compression represents one example of why someone might use an IP core instead of programming an FPGA. To do this, one must understand the JPEG algorithm and how to implement that into the FPGA. Such an implementation requires simulation, verification, and integration into a platform, says Ray Hoare, President and CEO, Concurrent EDA (Pittsburgh, PA, USA; www.concurrenteda.com), an FPGA design company.

“It’s also less expensive to buy the JPEG compression core from someone and integrate it into the frame grabber,” says Hoare.

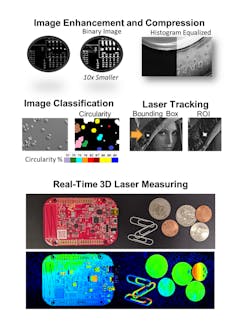

Common IP core examples for image processing include improving the quality of an image, reducing image noise, image classification, and adapting to lighting or motion (Figure 2). Another benefit for end users looking to deploy IP cores lies in data speeds, says Hoare.

“If you have four CoaXPress 2.0 lanes running at 12.5 Gbps per lane but the camera produces more data than this, the data must be processed,” he says. “If all this information is sent to the frame grabber and the PC is running software, it may not be able to keep up with the data rate. One option is waiting for the data or lowering the frame rate but keeping the data in the frame grabber or camera enables the system to keep up with the data rate.”

Machine vision system examples

In a recent life sciences imaging example, a client needed to find all cells with high levels of circularity, which indicated a specific abnormality. By implementing a custom IP Core into the frame grabber, the machine vision system was able to identify the cells in the image and colorize them based off circularity, helping the client to quickly identify these types of cells.

Laser tracking, whether for defense or manufacturing, is another example of when IP cores add value. In applications requiring laser tracking, immediate feedback is necessary. Processing must be done as quickly as possible, even before the next frame comes in, as adjustments are required immediately, says Hoare. In a recent additive manufacturing application, an IP core provides feedback for closed loop laser power control. As the laser heats powdered metal into solid metal, the machine vision system counts the number of pixels appearing above a certain threshold. When working correctly, the system recognizes a constant number of pixels in the area the laser heats, says Hoare. If the laser heats too much area, the metal starts to boil, and the laser power must be reduced. If the number of pixels drops too low, laser power is increased.

Deep learning applications also require some heavy lifting in terms of processing power. While training for deep learning almost always occurs on a GPU and CPU combination, deep learning technology can be deployed in IP cores, according to Hoare.

“IP cores are a good option for deep learning, especially when power is factored in. It isn’t really possible to put a 250-watt GPU into a camera,” he says. “But for image processing, when dealing with 10 or 12-bit data, using IP cores allows for a more power efficient, faster network.”

IP cores speed time to market

Camera manufacturers use IP cores to decrease time-to-market, shortening the time it takes to make the latest camera functionality available for customers. One such example involves implementation of machine vision interface standards into cameras (Figure 1). Companies such as Euresys (Angleur, Belgium; www.euresys.com), FRAMOS (Taufkirchen, Germany; www.framos.com), Kaya Instruments (Nesher, Israel; www.kayainstruments.com), and Pleora Technologies (Kanata, ON, Canada; www.pleora.com) offer such IP cores.

Integrating standards like GigE Vision, USB3 Vision, and CoaXPress into the backend of cameras is a lot of work. Utilizing IP Cores allows camera manufacturers to focus on the primary design task of integrating and controlling the sensor to work toward creating the best possible image, suggests Mike Cyros, Vice President of Sales and Support, Americas, Euresys.

“Manufacturers can plug IP cores into their design and save time in adhering to standards, as the IP core supplier takes care of the complexity of the implementation,” he says. “As a result, IP cores allow camera companies to introduce the latest cameras and sensors to market quickly, which puts the cameras into the hands of the end user.”

Kaya Instrument’s CoaXPress IP cores have been successfully implemented in a range of FPGA families, supporting link speeds ranging from CXP-1 to CXP-12. These IP cores were developed with the idea of shortening the development cycle, according to Michael Yampolsky, Founder and CEO.

“In terms of choosing to develop the IP core or buy it, two considerations must be made: timeliness and budget,” he says. “Building IP cores from scratch can take months or even years depending on the complexity of the project. If you don’t have in-house developers, the costs of custom development is difficult to estimate, but is most definitely expensive.”

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.