Deep learning networks track up to 100 animals simultaneously

Simple models can provide insight into understanding the collective movement of animals. However, those models may lack the complexity to predict the movement of individual animals within a flock, herd, school, or swarm. Adding detail to such models can increase the ability to predict the individual behavior of animals in a collective, but the increased complexity can make the model difficult to draw simple insights from.

In order to provide a solution for this conundrum, researchers from the Champalimaud Center for the Unknown biomedical research center (Lisbon, Portugal; bit.ly/VSD-CCFT) developed two deep learning systems. The first system, named idtracker.ai and described in the research paper idtracker.ai: tracking all individuals in small or large collectives of unmarked animals1 (bit.ly/VSD-IDTR), is species-neutral and designed to track individual animals within large collectives.

The second system, described in the paper Deep attention networks reveal the rules of collective motion in zebrafish2 (bit.ly/VSD-ZFSH) incorporates idtracker.ai to track pairs of fish within groups of between 60 and 100 zebrafish and uses that information to create a predictive model as to how the fish will move. Results provide an understanding of a simple set of parameters that describe a complex system of collective movement in schools of zebrafish.

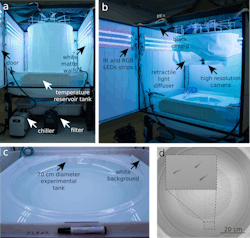

Two experimental setups captured video for this research. The first setup, designed for studying zebrafish, is built around a one-piece, circular, 70 cm-diameter tank of water inside a box with matte white acrylic walls (Figure 1). A 20 MPixel monochrome 10 GigE Emergent Vision Technologies (Maple Ridge, BC, Canada; www.emergentvisiontec.com) HT-20000M camera with a ZEISS (Oberkochen, Germany; www.zeiss.com) Distagon T* 28 mm lens, suspended 70 cm over the surface of the water captured video footage.

Related: Deep learning continues growth in machine vision

Infrared and RGB light strips provide illumination. A custom designed, cylindrical, retractable light diffuser made of plastic placed over the tank ensures homogenous lighting without hotspots. Black fabric covered the top of the box to prevent reflections from the ceiling of the room.

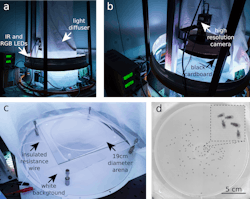

The second setup, built to study fruit flies, incorporates two arenas designed from transparent acrylic (Figure 2). The first arena, 19 cm in diameter, 3 mm in height, uses white insulated resistance wire from the Pelican Wire Company (Naples, FL, USA; www.pelicanwire.com/) to heat the walls and prevent flies from walking on them. Top-down and bottom-view recordings were taken from this arena. The second arena, 19 cm in diameter, 3.4 mm in height, has 18 mm width conical walls set at an 11° angle of inclination.

SMD5050-model RGB and SMD2835-model 745nm infrared LEDs were placed in a ring around a cylindrical light diffuser that ensured even lighting in the center of the setup. The fruit fly experiments used the same camera as the zebrafish experiments. Black cardboard placed around the camera reduced reflections from the arenas’ glass ceilings. A Sigmacote SL2 chemical solution from Sigma-Aldrich (St. Louis, MO, USA; www.sigmaaldrich.com) prevented the flies from walking on the ceilings.

Desktop computers with Intel Core i7-6800K or i7-7700K processors, 32 or 128 GB RAM, NVIDIA (Santa Clara, CA, USA; www.nvidia.com) Titan X or GTX 1080 Ti GPUs, 1 TB SSD, running GNU/Linux Mint 18.1 64-bit, processed image data.

Related: CPUs, GPUs, FPGAs: How to choose the best method for your machine vision application

The idtracker.ai system is based off idTracker (www.idtracker.es), a previous system developed by the researchers for identifying individual animals within groups. idTracker has proven its ability to track individuals within small groups of 2-15 animals who did not cross paths often during movement. It cannot, however, handle the more complex task of tracking 100 animals whose paths of movement frequently intersect. The idtracker.ai system is designed to handle these larger groups.

When the researchers used 184 single-animal videos and generated 3000 images per animal to train idtracker.ai, the system demonstrated >95% accuracy for identifying up to 150 animals. When identifying individual animals within large groups, the researchers lacked 3000 images of each individual within the group and developed three cascading protocols to gather the needed images to train the identification network.

Two convolutional neural networks (CNN) comprise idtracker.ai. The first CNN, called the crossing detector, analyzes video to detect blobs, defined as a collection of connected pixels that do not belong to the image background. Once a blob is identified, the system them decides whether the blob represents an individual animal or a crossing of two animals. The entire video is then broken down into individual fragments, collections of images of the same animal, and crossing fragments, collections of images with multiple animals. The training protocols then use this video to train the second CNN, called the identification network.

When tested against zebrafish, idtracker.ai provided approximately 99.96% accuracy for the identification of individual fish within a group of 60 fish, and approximately 99.99% accuracy for groups of 100 fish. When tested against fruit flies, idtracker.ai provided 99.997% identification accuracy for identification of individual flies within groups of up to 72 individuals, and >99.5% accuracy for groups of between 80 and 100.

The system worked best with around 300 pixel resolution for an individual animal. To test the system’s robustness, the researchers intentionally degraded the images by lowering image resolution to 25 pixels per animal, using Gaussian kernels to blur images, compressing videos into MPEG-4 and H.264 format, and degrading lighting quality. Even under these conditions, idtracker.ai still provides good results.

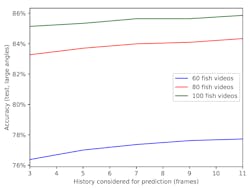

Using idtracker.ai and the same camera setup used to record zebrafish video as in the first paper, the researchers analyzed video to record position, velocity, and acceleration values for individual fish with groups of 60, 80, or 100 juvenile zebrafish (Figure 3). The trajectories described by this data were used to train a deep interaction network (bit.ly/VSD-DIN), a deep learning model that reasons how objects in complex systems interact, to support dynamic predictions and inferences about the abstract properties of the system, to predict in which direction a fish would turn in a given set of conditions.

The deep interaction network comprises two parts. The first part breaks fish interactions down into several classifications, by way of understanding the set of variables that determined an individual fish’s movement within the larger group. The speed of a fish, its location to a neighboring fish, and the speed of the neighboring fish were the most important values in determining whether a pair of fish would turn toward one another.

Related: What is deep learning and how do I deploy it in imaging?

The faster a fish moved, the less likely a neighboring fish would move toward it; and fish were more likely to move away from one another when one of the fish travels below the median speed of all the fish in the group. Other observations included the likelihood to move away from fish on the innermost part of the observation tank, align with respect to fish in the middle area, or turn toward fish in the outermost area, and how many of the neighboring fish showed meaningful influence in determining the movement of an individual fish.

The second part of the deep interaction network aggregated the results of the first part. The researchers were then able to create a predictive model with up to 86% accuracy of which direction a fish would turn given different sets of circumstances.

When the researchers created a simulation that included changes in all the potential directions in which a fish could move, other than turns to the left or right, the behaviors of the simulated fish generally matched the behavior of the fish in the experiment, thus demonstrating the successful extraction, from the video footage, of the basic rules that dictated how an individual fish would decide how to move.

The conclusion predicated on those rules suggests that each fish dynamically selects information from the collective in making its decision to move in a specific direction, thus demonstrating the connection between the movement of individual fish and the movement of the collective.

1. Romero-Ferrero, F., Bergomi, M. G., Hinz, R. C., Heras, F. J., & de Polavieja, G. G. (2019). Idtracker. ai: tracking all individuals in small or large collectives of unmarked animals. Nature methods, 16(2), 179-182.

2. Heras, F. J., Romero-Ferrero, F., Hinz, R. C., & de Polavieja, G. G. (2019). Deep attention networks reveal the rules of collective motion in zebrafish. PLoS computational biology, 15(9), e1007354.

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.