Deep learning/AI is becoming more mainstream for machine vision and imaging applications. As this area matures, trends emerge. Some are very prevalent, while others are not as widespread. Regardless, more solutions employ deep learning/AI, and as they do, applications for vision/imaging extend farther and farther away from the factory floor.

Adoption Factors

There are different factors driving deep learning/AI adoption. Eli Davis, Lead Engineer at Deepview (Mt. Clemens, MI, USA; www.deepviewai.com), says, “We’re seeing the sales and installation process mature toward simpler installation at lower cost, which is what drives mainstream adoption. Five years ago, AI systems were high-cost and bespoke; in 2022 AI is low-cost and off the shelf.” He adds that there is demand to solve sorting applications with AI cameras because of the current labor shortage. “The more AI can sort parts, the more line workers can focus on higher value tasks,” he says.

Although deep learning/AI is allowing vision/imaging to be used away from traditional factory floor applications, it doesn’t mean that it isn’t used on the manufacturing floor. “While traditional machine vision systems operate reliably with consistent manufactured parts, it faces challenges as exceptional cases increase and types of defects vary,” says Eunseo Kim, Marketing Manager, Neurocle (Seoul, The Republic of Korea; www.neuro-cle.com). “Additionally, while they can tolerate slight variations in the appearance of objects due to magnification, rotation, arrangement, distortion, and others, it can very poorly operate in increasingly diverse and complex surface textures and image quality issues.” She explains that traditional machine vision systems also may not be suitable for inspecting variation and deviation between visually similar parts, so deep learning image analysis can be an alternative. “Deep learning-based image analysis combines the sophistication and flexibility of visual inspection with the reliability, consistency, and speed of computer systems. This solves the problem of demanding vision applications that could hardly be maintained by traditional machine approaches.”

“The latest trends in machine vision are bringing powerful deep learning and AI innovations to manufacturers worldwide for improving quality and efficiency by tailoring automated vision solutions to each production process or assembly,” adds Corey Merchant, VP-Americas, Kitov.ai (Petach Tikva, Israel; www.kitov.ai). “These systems ‘learn’ over time for constant improvement in performance, which eliminates unnecessary manpower for additional inspection and rework while reducing scrap rates.” He explains that AI enables powerful customized vision solutions to be implemented easier, faster, and with fewer resources than ever before. “AI not only improves machine vision performance but allows for more intuitive-based inspection system programming, implementation, and maintenance,” he says.

Luca Verre, the CEO and Co-founder of Prophesee (Paris, France; www.prophesee.ai), says, “The most prevalent trend we see is the movement of AI/ML-enabled intelligent sensing to the edge. This mandates a much more efficient and flexible approach to implementing AI and ML for all sensing applications—and especially vision. Of critical importance are the necessary improvements in power consumption and data processing required for that to happen, but other factors are also driving this shift.”

There are a variety of reasons behind this, he explains: the need for flexible installation and operation of devices in untethered and/or remote use cases; the demand for more “smart” sensing applications in the home, workplace, industry, and in public spaces; the evolution of more efficient AI algorithms (e.g., tinyML) that can process inputs locally; and data privacy, security and performance concerns related to dependency on the cloud.

Gil Abraham, Business Development Director for the Artificial Intelligence and Vision business unit at CEVA (Rockville, MD, USA; www.ceva-dsp.com), also comments on vision at the edge. “As machine vision continues its accelerated move to edge devices, we observe a trend of higher efficiency deep learning requirements, so deep learning computations are not just ‘brute force’ CNN based anymore. But rather, AI processors today are designed for increased efficiency using dedicated acceleration, network optimizations, and memory bottlenecks elimination.

Simplification

Max Versace, Neurala (Boston, MA, USA; www.neurala.com) CEO and Co-founder, says that for deep learning/AI solutions to become widely available across enterprises, especially in manufacturing, they need to be easy to deploy. “While much progress has been made in making AI more understandable and easier to use, it is still an area where strong expertise is required,” he says. “In order to unleash an ‘AI revolution’ and really lower the barrier to AI adoption, it is critical to understand that we do not need a new coding language, but a true ‘no-AI-expertise-required’ tool that will be the basis for deep learning adoption across industries. This, and a reduction in the compute power needed to deploy AI in production lines from GPU to just CPU, will change the game for most manufacturers.” He compares what needs to happen with what occurred in the Internet arena when no-code platforms like WordPress unlocked Websites for everybody without HTML expertise. “Manufacturers need ‘WordPress for AI’ where applications can be developed, deployed, and maintained in minutes from anybody on the production floor and deploying on IPCs with just a CPU drastically reduces the cost of inspections, making it affordable to all,” he adds.

David Dechow, VP of Outreach and Vision Technology at Landing AI (Palo Alto, CA, USA; www.landing.ai), says, “Another critically important part of deep learning in automated inspection is the ease of use that comes from ‘democratizing’ the implementation of applications plant-wide and even enterprise-wide. Deep learning technology is accessible to everyone—from the machine learning engineer or system integrator to manufacturing experts on the shop floor—and is easy to configure and deploy without programming. Deep learning software provides a no- to low-code experience with a common workflow, standardized across all applications, that anyone on the team can use for every inspection project.”

Arnaud D. Lina, Director Engineering at Matrox® Imaging (Dorval, QC, Canada; www.matrox.com/imaging), which was recently acquired by Zebra Technologies (Lincolnshire, IL, USA; www.zebra.com), concurs that simplifying deep learning/AI is critically important. “Above all, simplifying the deep learning training process and experience is integral,” he says. “Key to deep learning is the training of a neural network model: the easier the data collection and preparation and the simpler the training configuration and interpretation, the more effective the outcomes.”

Bruno Ménard, Software Director, Teledyne DALSA, Vision Solutions, and Brandon Hunt, Product Manager, Teledyne DALSA, Vision Solutions (Waterloo, Ontario, Canada; www.teledynedalsa.com), discuss a recent technique called “Anomaly Detection” that allows training a model with very low effort. “Anomaly Detection models are trained on good samples only—which are often easy to obtain on the factory floor—and validated on just a few defective samples,” they comment. “Anomaly Detection does not require labeling the defects on the training images, saving users significant time.” Instead, defects are automatically located and output in a format called heatmaps at runtime.

“Many companies in the machine vision sector still have reservations about the new technology,” says Dr. Robert-Alexander Windberger, AI Specialist at IDS Imaging Development Systems (Obersulm, Germany; www.ids-imaging.com). “There is a lack of expertise and time to familiarize themselves in detail with the subject area. In contrast, the vision community is growing from the IoT sector and the startup scene. The new application areas and user groups inevitably result in different use cases and requirements. For the best possible support in the entire workflow from data set creation to training to running a neural network, the classic programming SDK is no longer sufficient. That is why we can see that for working with AI vision today, completely new tools are emerging that are used by very heterogeneous user groups without AI and programming knowledge. This improves the usability of the tools and lowers the barrier to entry, which is significantly accelerating the spread of AI-based image processing right now. Ultimately, AI is a tool for people and must therefore be intuitive and efficient to use.”

Data and Algorithms

Mateusz Barteczko, Lead Computer Vsion Engineer, Zebra Technologies, explains that in recent years, there has been enough interest in deep learning that it is safe to say this technology has now become an integral part of machine vision. “Users have learned to trust it, they are aware of its capabilities and potential and thus, when choosing machine vision software, they look for a library containing a set of deep learning tools,” he says. In terms of current trends, Barteczko says that a few years ago, people were very concerned about the performance gap between traditional machine vision algorithms and deep learning solutions. “This is no longer the case,” he says. “The technology has matured, and its performance has improved greatly. Many deep learning projects nowadays do not require an expensive PC with a powerful GPU. Instead, they run on a CPU with execution times as low as 50 to 80 ms.”

Christian Eckstein, MVTec Software GmbH’s (Munich, Germany; www.mvtec.com) Product Manager, Deep Learning Tools, says, “A big and relevant trend is a focus on data as opposed to algorithms. The goal is to achieve better results with smaller, high-quality datasets to increase time to market or speed up deployment. This pragmatic approach shows that the field is maturing. Machine vision software manufacturers provide the tools to build data pipelines to achieve those datasets. Smaller datasets and faster deployment also mean a focus on unsupervised methods, like Global Context Anomaly Detection, that require little labeling and can yield impressive results very quickly.”

Svorad Stolc, CTO of the Sensors Division at Photoneo (Bratislava, Slovakia; www.photoneo.com) says, “There are two major areas of using AI in machine vision. On the one hand, machine learning (ML) can massively improve the quality of signals obtained from vision sensors compared to traditional machine vision algorithms. ML-driven methods enable better acquisition of 3D scans even in challenging light conditions or for materials that are normally difficult to scan, such as transparent or glossy surfaces or materials causing interreflections. The other area of using ML in machine vision is data comprehension that makes use of contextual information. However, AI is only as good as the quality of data used for its training. The higher quality of 3D data we have, the better results we can achieve with machine learning.”

Among several important trends for deep learning/AI, Milind Karnik, EVP of Engineering, Elementary ML (Los Angeles, CA, USA; www.elementaryml.com) cites the importance of appropriate data curation to optimize a model. “This comes from the fact that ‘more data’ is not always the right approach to generating DL/AI models in the world of machine vision,” he says. “It’s more important to have the ‘right data’ that best represents what will happen in the real world so as to generate the most accurate models with the highest efficacy and lowest false positive/false negative rates. This often involves curating the data to get to the right data from large available datasets.”

Cory Kress, Sr. Solution Architect, ADLINK Technology, Inc. (San Jose, CA, USA; www.adlinktech.com), comments, “Deep learning-based visual inspection has become really hot and popular across different industries. However, achieving accurate results on a computer vision task such as defect detection, requires fine-tuned, trained deep learning systems with labeled ground truth data. This entire process can be time-consuming and costly including finding a suitable deep learning acceleration platform for the job.”

Solutions

There are various solutions for deploying deep learning/AI that lend themselves toward the points listed above.

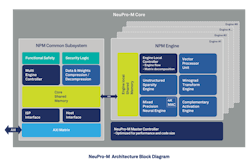

CEVA’s NeuPro-M™ (Figure 1) is a heterogeneous and secure AI/ML processor architecture for smart edge devices, available as a licensable IP core for SoC designs, and offers power efficiency of up to 24 tera ops per second per Watt (TOPS/Watt). Its CDNN software stack includes the NeuPro-M system architecture planning tool, neural network training optimizer tool, and CDNN compiler and runtime.

Neurocle provides three deep learning software packages (Figure 2): Neuro-T Auto Deep Learning Vision Trainer, Neuro-X Deep Learning Model Trainer for Experts, and Neuro-R Runtime API Engine. Neuro-T is deep learning vision software for people who have little understanding of coding and deep learning. Neuro-X is a deep learning model trainer for experts. Users can create optimized models by adjusting hyperparameters that give them greater control and flexibility. Neuro-R is runtime software that helps model deployment and supports optimized integration with various inference platforms including GPU and embedded processors.

Photoneo has released MotionCam-3D Color (Figure 3), a 3D sensor that enables area-based 3D scanning of scenes in motion in high resolution and submillimeter accuracy without motion artifacts and now also with color information. The camera combines 3D geometry, motion, and color, which opens up new possibilities, especially for AI applications and the recognition of products characterized not only by their 3D geometry but also by specific color features.

The current software release 2.6 for the AI vision system IDS NXT (Figure 4) focuses primarily on simplifying app creation. The initial phase in development is often one of the greatest challenges in the realization of a project. With the help of the new Application Assistant in IDS NXT lighthouse, users configure a complete vision app under guidance in just a few steps, which they can then run directly on an IDS NXT camera. With the block-based editor, which is also new, users can configure their own program sequences with AI image processing functions, such as object recognition or classification, without any programming knowledge.

Teledyne DALSA has announced that its Sapera™ Vision Software Edition 2022-05 is now available. Sapera Vision Software offers image acquisition, control, image processing, and AI functions to design, develop, and deploy high-performance machine vision applications. The new upgrades include enhancements to its AI training graphical tool, Astrocyte™ (Figure 5) and its image processing and AI libraries tool, Sapera Processing. As an example, for localization and classification of various types of knots in wood planks, Astrocyte can robustly locate and classify small knots 10 pixels wide in high-resolution images (2800 × 1024) using the tiling mechanism, which preserves native resolution. This update includes improved anomaly detection algorithms, live video acquisition from frame grabbers, and new functionality that delivers increased performance and a better user experience.

The Matrox Imaging MIL CoPilot application (Figure 6) features an interactive environment that provides the platform for training neural network models for use in machine vision applications. It delivers all the functionality needed for this task, so users can create and label the training image dataset; augment the image dataset, if necessary; and train, analyze, and test the neural network model.

Kitov.ai’s CorePlus (Figure 7) line of standalone vision systems combines deep learning, 2D and 3D imaging, novel and traditional machine vision algorithms, and intelligent robotic planning technologies and can scan larger part sizes in both offline and inline configurations. With Kitov.ai’s hybrid deep learning systems, operators set up a new inspection plan with a CAD file or by allowing the system to capture a full 3D scan of a reference part, from which the system generates a 3D digital twin model of the physical product. A CMOS camera with multiple brightfield and darkfield lighting elements in a photometric configuration then captures multiple 2D images to create the 3D model. The system can also work directly from a CAD file, which may contain manufacturing specifications as well; the system takes these tolerances into account automatically when programming the inspection routine.

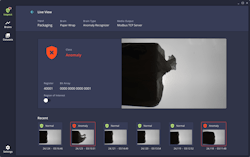

Landing AI launched LandingEdge (Figure 8), a deployment application within the company’s flagship platform, LandingLens. With LandingEdge, manufacturers can deploy deep learning visual inspection solutions to edge devices on the factory floor to better and more consistently detect product defects. LandingEdge extends the capability of LandingLens into even more manufacturing environments. With the new edge capabilities, LandingLens customers will more easily integrate with factory infrastructure to communicate with cameras, apply models to images, and make predictions to inform real-time decision- making on the factory floor.

Neurala recently added new product features to its vision inspection software suite VIA (Figure 9). They include the integration of Detector AI models into Neurala VIA Inspector, as well as the addition of the Ethernet/IP communication protocol to the solution. Neurala also announced the upcoming availability of support for PROFINET. In conjunction with the launch of Inspector’s Detector capability, which enables Neurala VIA to tackle use cases requiring part location, picking, and counting, the release of EtherNet/IP expands VIA’s ability to easily integrate into existing machinery, without the need for specialized knowledge.

ADLINK and Amazon recently announced the general availability of Amazon Lookout for Vision Starter Kit (Figure 10). With this kit, system integrators can install, configure, and start training AI models within a matter of hours. Once setup, training data can begin to be collected and self-labeled within the Lookout for Vision system to enable ease of creating models. The machine learning models then can be deployed to the starter kit at the edge, allowing defects to be detected and acted upon in real time. The kit also comes with prevalidated deep acceleration platform based on NVIDIA Jeston, plus ADLINK Edge™ and AWS IoT Greengrass.

MVTec Software GmbH has released version 22.05 of its HALCON machine vision software (Figure 11), which will offer “Global Context Anomaly Detection”. By detecting logical anomalies in images, HALCON 22.05 opens up new application areas and represents a further development of the deep learning technology of anomaly detection. Global Context Anomaly Detection can “understand” the logical content of the entire image. Like the existing anomaly detection in HALCON, Global Context Anomaly Detection requires only “good images” for training. The training data do not need to be labeled. The technology can thus detect completely new anomaly variants, such as missing, deformed, or incorrectly arranged components of an assembly, for example.

Zebra Aurora™ Vision Studio 5.2 (Figure 12) features a new off-line mode and Filmstrip control, a brand-new Golden Template 3D tool, as well as support of multiple GPUs in Deep Learning inference and improved Deep Learning OCR. The 5.2 version of Zebra Aurora™ Vision Studio has entered the BETA stage and is available for download.

The DeepView X400 (Figure 13) features fully onboard artificial intelligence. The DeepView AI Camera enables production inspection, image storage, and new application training all from an intuitive Web browser user interface. It includes 6 CPUs, a 400-core GPU, 2 AI accelerators, and 1 TB storage. According to the manufacturer, this allows the camera to store eight jobs and up to one million images. Google Chrome connects to the camera’s IP address and grants access to the image capture and labeling, neutral network training, and inspection job creation functions. This facilitates “train-on-the-fly” neural network improvement.

Prophesee has launched the HD Metavision® Evaluation Kit (Metavision® EVK4-HD), which allows developers of computer vision systems to evaluate the Sony IMX636ES HD stacked event-based vision sensor (Figure 14). The kit comes with the Metavision® Intelligence Suite, a set of software tools for application development, including 95 algorithms, 67 code samples, and 11 ready-to-use applications. The kit also includes a C-mount 1/2.5’’ lens, C-CS lens mount adapter, tripod, and USB-C to USB-A cable. It has a maximum system bandwidth of 1.6 Gbps and maximum power of 1.5 W, which is powered via USB.

FabImage® Studio Professional (Figure 15), from Opto Engineering (Mantova, Italy; www.opto-e.com), is a software development environment equipped with an intuitive graphical interface for visual programming, more than 1,000 functions, and an HMI designer. It features a deep learning add-on that has a set of five ready-made tools that are trained with 20-50 sample images, and which then detect objects, defects, or features automatically. Internally, it uses large neural networks designed and optimized for use in industrial vision systems. Typical training time on a GPU is 5-15 minutes (training time can be different depending on the hardware choice and image size). Inference time varies depending on the tool and hardware between 5 and 100 ms per image.

Leveraging machine vision, edge computing, and secure cloud-based machine learning models, Elementary’s proprietary vision platform (Figure 16) can perform notoriously difficult or impossible quality inspections from any remote location with an Internet connection. The platform has been streamlined to allow anyone to quickly learn to train inspection models without typing a single line of code. Elementary machine learning offers multiple tools that can solve inspection needs ranging from foreign object detection, anomaly detection, label placement, and presence checks to part classification, code reading, and OCR text reading

Companies Mentioned

ADLINK Technology

San Jose, CA, USA

www.adlinktech.com

CEVA

Rockville, MD, USA

www.ceva-dsp.com

DeepView

Mt. Clemens, MI, USA

www.deepviewai.com

Elementary ML

Los Angeles, CA, USA

www.elementaryml.com

IDS Imaging

Obersulm, Germany

www.ids-imaging.com

Kitov.ai

Petach Tikva, Israel

www.kitov.ai

Landing AI

Palo Alto, CA, USA

www.landing.ai

Matrox Imaging

Dorval, QC, Canada

www.matrox.com/imaging

MVTec

Munich, Germany

www.mvtec.com

Neurala

Boston, MA, USA

www.neurala.com

Neurocle

Seoul, The Republic of Korea

www.neuro-cle.com

Opto Engineering

Mantova, Italy

www.opto-e.com

Photoneo

Bratislava, Slovakia

www.photoneo.com

Prophesee

Paris, France

www.prophesee.ai

Teledyne DALSA

Waterloo, ON, Canada

www.teledynedalsa.com

Zebra Technologies

Lincolnshire, IL, USA

www.zebra.com

About the Author

Chris Mc Loone

Editor in Chief

Former Editor in Chief Chris Mc Loone joined the Vision Systems Design team as editor in chief in 2021. Chris has been in B2B media for over 25 years. During his tenure at VSD, he covered machine vision and imaging from numerous angles, including application stories, technology trends, industry news, market updates, and new products.

![Figure 14: Prophesee has launched the HD Metavision® Evaluation Kit (Metavision® EVK4-HD). [Photo courtesy of Prophesee.] Figure 14: Prophesee has launched the HD Metavision® Evaluation Kit (Metavision® EVK4-HD). [Photo courtesy of Prophesee.]](https://img.vision-systems.com/files/base/ebm/vsd/image/2022/06/2208VSD_pf_mcl_p13.62b22b27a8392.png?auto=format,compress&fit=max&q=45&w=250&width=250)