ATM networks TRANSFER MEDICAL IMAGES AT HIGH SPEEDS

ATM networks TRANSFER MEDICAL IMAGES AT HIGH SPEEDS

By John H. Mayer, Contributing Editor

Replacing traditional film-based light boxes with computer images has been a goal of the medical industry for many years. Storing, distributing, and viewing radiological images in digital form offers a number of advantages, from reduced film and archiving costs to simpler data access from local and remote sites.

Over the past decade, developers building picture archiving and communications systems (PACSs) have faced many obstacles. While numerous high-speed networks support medical-imaging applications, most use proprietary hardware and protocols. To overcome the limitations of poor network performance, many systems have resorted to image prefetching or data-compression techniques.

In many cases, these systems constrained radiologists to particular workstations or application-specific hardware and software. In addition, the absence of standards for image acquisition and transmission presented problems when retrieving images from different digital imaging modalities.

New opportunities

The emergence of high-bandwidth asynchronous-transfer-mode (ATM) networking hardware and the evolution of the Digital Imaging and Communications in Medicine (DICOM) 3.0 standard allowed PACS networks to be built more cost-effectively (see "DICOM standard simplifies interoperability," p. 30). "Proprietary solutions lock you to a particular vendor and keep you from using the network for other things," notes Louis Humphrey, director of radiology informatics at the department of radiology at the Duke University Medical Center (Durham, NC). "We needed a system that could be integrated with other systems in the department, and so it was important that standardized, off-the-shelf components were used."

With the availability of ATM switches and network interface cards, Duke`s network-development team decided that there was appropriate hardware and networking equipment available to develop a standard, off-the-shelf-network (see "ATM delivers performance and scalability," p. 31). The department needed to transmit images ranging from 0.25 to more than 10 Mbytes, and, regardless of size, images had to be transmitted and displayed quickly.

"Studies have shown that a radiologist is willing to wait for about 1.6 seconds to display an image," said Humphrey. "After that, they don`t want to wait, so we developed a system that displays an 8-Mbyte image in less than 1.5 seconds." Early estimates placed average loads on the network between 500 and 1000 Kbytes/s during working hours, while burst rates might reach 5 Mbytes/s.

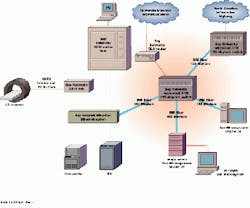

To form the heart of of the ATM network, Duke chose a LattisCell ATM switch from Bay Networks (Billerica, MA) and spent six months ensuring it would meet performance requirements (see figure below). Designed to serve as a high-speed desktop connectivity system, the LattisCell is a 16-port switch that supports data and imaging applications at speeds up to OC-3 (155 Mbit/s). The switch uses the Bay Networks Fast Matrix architecture to deliver 5-Gbit/s internal bandwidth for switching between input and output ports. Multiple parallel internal paths between each input and output port ensure fast data transmission, while embedded hardware-based multicast and broadcast capabilities support existing data protocols and applications.

For bursty communications streams, the LattisCell features output buffers on each port that can hold up to 1k cells. Each output buffer adds dual queues that, based on high-priority and normal-priority traffic, enable each port to handle multiple traffic types, including real-time images, audio, and data. A priority buffer stream enables the LattisCell to support all five Quality of Service classes defined in the ATM Forum`s User Network Interface (UNI) 3.0 specification.

Ethernet connectivity

The network also had to offer connectivity to existing Ethernet networks because the Duke University Medical Center linked its CT scanners over a 10Base-T Ethernet network. "Images are not produced as quickly as they need to be viewed," says Humphrey. "If you have an Ethernet network, images can be acquired at a slower rate than they need to be displayed. Because some systems are already connected to Ethernet, there was no need to integrate ATM into CT or MRI scanners."

To weave the Ethernet network into the medical center`s ATM system, the team used an EtherCell Ethernet-to-ATM switch from Bay Networks. The device multiplexes twelve 10Base-T ports onto one 155-Mbit/s SONET/ SDH ATM port and provides support for LAN emulation and UNI signaling.

To interface to the rest of the network, Duke turned to Bay Network`s Connection Management System (CMS), a software application that coordinates network traffic within the LattisCell. Installed in a Model 5740 Switch Control Module running in a Bay Network`s Model 5000AH hub, the CMS manages connections throughout the network and establishes permanent or switched virtual channels between stations on a point-to-point, point-to-multipoint, or true multicast basis. To allow shared media clients to participate in the system`s virtual networks and benefit from the bandwidth of the ATM switch without altering existing hardware or software, Duke relied on Bay Network`s Multicast Server (MCS) software. The MCS supports the network side of the ATM Forum`s LAN Emulation (LANE) specifications, whch allow existing LAN applications or protocols to run over the ATM network.

Standard platforms

For image servers, Duke opted for Sparc 20 and UltraSparc workstations from Sun Microsystems (Mountain View, CA). Each is supported by a Sun Disk array (one 30 Gbyte, the other 60 Gbyte). A 200-Mbyte/s Fibre Channel serial bus provides a link between the each array and workstation.

Reading images of the array using the standard UNIX file system presented a potential bottleneck. Humphrey and his colleagues developed a custom file system that allowed the server to read images faster than using the standard capabilities of Sun`s Solaris operating system. "That helped us avoid disk fragmentation," explains Humphrey.

Sun Sparc20s and UltraSparcs were chosen to display the images. The workstations link to the network via 155-Mbit/s ATM network interface cards from either Interphase (Dallas, TX) or Efficient Networks (Dallas, TX). Each platform uses a monochrome 2000-line monitor from MegaScan Technology (Westford, MA).

Putting images on-screen

To deliver the 2k ¥ 2k resolution required for diagnostic-quality images, the team turned to Dome Imaging Systems (Waltham, MA). Dome manufactures single-board display subsystems for imaging applications. The Duke team used Dome`s Md5/Sun Sbus display board because it was capable of displaying 2k ¥ 2.5k-resolution, diagnostic-quality images. Using a 10-bit digital-to-analog converter (DAC), Dome`s board can display up to 256 shades of gray from a palette of 1024. An on-board custom accelerator application-specific integrated circuit supports copy functions to maximize X-window GUI performance.

Dome`s board, DICOM-compliant software, and the 155-Mbit/s ATM network provides the capability to deliver diagnostic-quality images in less than 1.5 seconds. With sustained data throughputs from the image server to the display workstation of more than 100 Mbit/s, Duke University has met all its immediate performance goals.

This network integrates a high-speed asynchronous-transfer-mode switch with imaging workstations, storage devices, and film printers. Images are transferred from CT scanners over 10-Mbit/s Ethernet to Sun workstations equipped with high-resolution monitors.

DICOM standard simplifies interoperability

Key to successful implementation of an image server by Duke University (Durham, NC) was use of image-storage, acquisition, and display components that comply with the Digital Imaging and Communications in Medicine (DICOM) 3.0 standard. DICOM simplifies digital communication between diagnostic and therapeutic equipment from various manufactures by establishing a standard for the transfer of radiological images and other medical information.

Efforts to develop such a standard began in 1983 when members of the American College of Radiology (ACR) and the National Electrical Manufacturers Association (NEMA) formed a joint committee. The original effort focused on specifications of a hardware interconnect and a dictionary of data elements needed for proper image display and intrepretation. A second effort in 1988 added new data elements but failed to include an interface between imaging devices and networks.

With DICOM 3.0, ACR and NEMA re-engineered the problem, turning to object-oriented design to develop an interface. Objected-oriented techniques provide a way to describe not only the information, but what to do with the information or how programs can access the information about a collection of objects. Object-oriented design associates methods with defined objects, and DICOM 3.0 uses that capability to define services such as "store image" or "get patient information." These services are implemented in DICOM using constructs known as operations or notifications. The standard defines a set of generic operations and notifications and calls them DICOM message service elements. Together an information object and service elements comprise a service-object pair (SOP). SOPs represent elemental units of functionality under DICOM 3.0. By specifying a SOP class to which an implementation must conform and the role a conforming device must support, one can define a precise subset of DICOM functionality including the types of messages to exchange, the data that will be transferred in those messages, and the semantic context within which those data are understood.

ATM delivers performance and scalability

When network developers at the Duke University Medical Center`s Department of Radiology (Durham, NC) began designing their network, they faced many network topology options and trade-offs. Collapsed backbone architectures that rely on a multiprotocol router to link Ethernet workgroups have been used to build imaging environments and offer excellent protocol control with little complexity. But this approach provides limited bandwidth for high-volume, time-sensitive data between workgroups. While router-based networks have delivered images to one specific workstation in a department, they constrained radiologists to work in predetermined locations. This conflicted with one of the primary goals at Duke University--to deliver images rapidly to any node in the radiology department.

Frame-switched 10/100-Mbit/s Ethernet networks offer significantly more bandwidth. But as the number of users grow, the network quickly runs out of bandwidth for nonimaging applications. FDDI presented a better option. A single FDDI network connecting FDDI-attached servers and Ethernet workgroups via routers offered both protocol control and firewall capabilities. The network could easily scale up with the addition of more routers and workgroups. Unfortunately, transfers between routers and across shared-access FDDI LANs limited application performance.

Duke University determined that a network for the transport of radiological images must supply not only high throughput, but carry video and voice and offer the ability to extend seamlessly to a wide-area network (WAN). Asynchronous transfer mode (ATM) clearly offered the best solution (see figure). While the technology is relatively new and standards are evolving, it offers the 155-Mbit/s backbone needed to transmit images in real time. At the same time it promises scalability, either through a mesh of switches to provide more links to servers or workstations, or by integrating 622-Mbit/s ATM switches. With virtual LANs, Duke could create logical workgroups to simplify management.

The relative response time of different network topologies is heavily dependent on the application. In medical-image networking applications, asynchronous-transfer-mode-based networks prove the clear winner for both bandwidth and number of users supported.