Explore the Fundamentals of Machine Vision: Part II

Image-processing software packages are available from numerous vendors for PCs and smart cameras that perform similar, if not identical, functions. Many commercial software packages provide graphical integrated development environments (IDEs) that enable system integrators to customize a vision algorithm by dragging and dropping canned functions from a library and interconnecting them.

Many of these commercial packages require a royalty to be paid for every vision system deployed, but this cost can be eliminated by using open-source imaging code such as OpenCV or by programming the application in C++, C, C#, or .NET.

However, because OpenCV is an open-source environment, it is not guaranteed by a single manufacturer nor is it supported. Users of commercial software, on the other hand, are guaranteed that their image-processing toolbox will be supported by the developer.

Before selecting the software, system builders should carefully evaluate their in-house capabilities. Although a royalty for a single implementation of a commercial PC-based machine-vision software package can cost between $800 and $2500, developers might easily spend the same amount in time and engineering resources writing a software program from scratch in languages such as C.

Machine builders should also be wary of believing that one development environment will be suitable for every machine-vision system. Technical and economic pressures dictate the need to take different approaches to software development according to the requirements of each application.

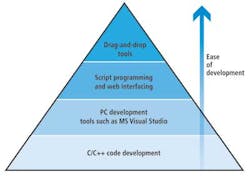

After first determining which algorithms are required to perform a vision task (see Fig. 1), the choice of programming environment will depend on the number of systems to be produced, the cost of each system, and the skills of the software developer. For example, the more complex and lengthier task of creating image-processing code in a high-level language such as C or C++ may be a better solution for engineers developing systems in greater numbers (see Fig. 2).

Algorithm classes

Image-processing algorithms can be categorized into different classes that are applied to perform a variety of tasks.

By preprocessing image data, features within the image can be extracted. Image thresholding, one of the simplest methods of image segmentation, is used to generate binary images from grayscale images. This allows objects to be separated from the background.

Other operators such as image filtering can sharpen the image, reducing image noise, whereas histogram equalization can increase image contrast. Preprocessing may also involve image segmentation to locate objects or boundaries of objects in an image with similar properties, such as color, intensity, or texture.

More sophisticated algorithms perform feature extraction, detect the edges or corners of objects, and perform measurements of objects in the image. Connectivity tools, such as blob analysis algorithms, can also measure the parameters of connected features of discrete objects inside an image.

Correlation and geometric search are two other powerful search algorithms that take a feature found in one object and search a new image for that feature, providing the location, and in some cases the size and scale, as well as how the new image has been skewed or translated.

Finally, there are sets of algorithms that can perform classification and interpretation of images. In their simplest form, the algorithms may perform simple template-matching operations; more complex classifiers may use sophisticated techniques such as neural networks and support vector machines (SVMs).

Applying the algorithms

In many vision systems, it is important to determine whether a part, or a specific feature of a part, is present. Properties such as size, shape, or color may be used to identify the part. Contrast analysis, blob analysis, template matching, or geometric search tools can identify the part on the image.

To differentiate one component from another, it may be possible to use a simple function such as an edge detection operator. If its exact position needs to be determined, then a geometric search or blob analysis could be performed.

To detect defects on a part or on a web at high speed, contrast analysis or template image-matching operators can be employed. If the defect must be classified and detected, blob analysis or edge analysis can measure the parameters of the defect after which they can be compared with known good parameters.

During some image-processing applications, sub-pixel resolution can be obtained by measuring the position of the line, point, or edge in the image with an accuracy exceeding the nominal pixel resolution of that image. This can be achieved by comparing the gray level of a pixel on the edge of an object to the gray levels of the pixels on either side of it.

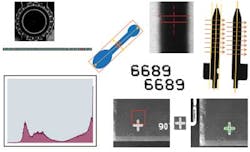

In particular instances, unwrapping an image of a circular object using a technique called polar extraction makes it easier to process. As shown in the middle of Fig. 3, blob analysis has been performed on the blue object to reveal details about its parameters. Using such an operator it is possible to detect the size, the bounding box, and centroid of the object.

In the upper-right portion of Fig. 3, an edge detection operation has been performed on an object where its edges are determined by analyzing the grayscale values in the image, and the position, pose, and angle of the objects. The histogram at the lower left shows the grayscale pixel values in a specific image—values that might be analyzed further to enhance certain features in the image from which they were derived.

There are two sets of optical characters in the middle of the image that might need to be identified, the upper ones being slightly degraded. In this case, verification could determine how degraded the characters may be. Alternatively, optical character recognition (OCR) software could be employed to read the degraded characters.

At the lower-right of Fig. 3, a search operation takes a target from one image—stored as a template or a geometric model—and subsequently finds the same target in another image. This is an accurate tool used to ensure specific features are present.

Editor's note: Refer to Part I of this series on fundamentals (http://bit.ly/Y3ZnRH) for an overview of several components (image sensors, cameras, illumination) used to build machine-vision and image-processing systems.

Plan, specify, and implement

Before embarking on the development of any machine-vision inspection system—whether internally or by outsourcing its development—the process to be automated should be evaluated carefully. Sometimes in this planning phase, machine vision may not even be required or be viable.

If machine vision is viable, the specific nature of the inspection process should be thoroughly documented. The physical geometry of the parts to be inspected and their attributes, such as color, surface finish, and reflective properties, must be classified. At the outset, the features that distinguish a good part from a bad one must be defined. Only by understanding the properties of the part or assembly can a system integrator decide what image-processing solution might be most appropriate.

The overall production process should also be analyzed to enable the system builder to gain an understanding of how a part is manufactured and what variations in the process might occur. An analysis may even reveal that the vision inspection could take place at an earlier stage in the production process.

After the planning stage is complete, the machine-vision components—including cameras, software, lighting, optics, and lenses—must be specified. This stage may reveal that there is inadequate resolution or insufficient lighting and optics in the production environment to produce the required results. However, it is better to realize this as the system specification is being written rather than when a system is being implemented.

At the system specification stage, a report should be generated to detail the hardware and software used, the inspection functions that the system is required to perform, the tolerances that it is required to meet, and the system's throughput. How the system will interface to any other automation systems that may be on the plant floor should also be documented.

Before deciding to develop a system in-house or work with an external system supplier, several key business issues should also be considered. From the beginning, system integrators need to ascertain whether they have the technical skills in-house that the project requires.

Even if such skills are available, management should determine whether the personnel involved have sufficient time and resources to commit to the project, and should unexpected challenges occur, how these will be met. Equally important is whether the system can be maintained, supported, serviced, and upgraded.

Vision Systems Articles Archives