Image processing software: Software packages offer developers numerous options for machine vision design

Systems integrators can take a number of different approaches when configuring their machine vision systems.

When building machine vision systems, developers can choose from a number of commercially available software packages from well-known companies. In choosing such software, however, it is important to realize the functionality these provide, the hardware supported and how easily such software can be configured to solve a particular machine vision task.

In the past, the choice of software was limited with many companies merely offering callable libraries that performed relatively simple image processing operations. These included point processing operations such as image subtraction, neighborhood operations such as image filtering and global operations such as Fourier analysis.

While useful, developers were tasked with understanding each of these functions and how they could provide a solution to a machine vision task such as part measurement. Often, the need to build software frameworks to support such libraries made developing such programs laborious and time consuming.

Rapid development

While such libraries are still available from a number of open-sources (see SIDEBAR "Open source code provides alternative options," p. 24), vision software manufacturers realized that systems integrators needed to more rapidly develop applications to solve specific machine vision problems without the need to understand the low-lying complexity of image processing code. Because of this, many vendors now offer higher level tools within their software packages that provide higher-level functionality such as image measurement, feature extraction, color analysis, 2D bar code recognition and image compression all within an interactive environment.

Examples of these high-level tools include Matrox Imaging Library (MIL) from Matrox Imaging (Dorval, QC, Canada; www.matrox.com), Open eVision from Euresys (Angleur, Belgium; www.euresys.com), HALCON from MVTec Software GmbH (Munich, Germany; www.mvtec.com), VisionPro from Cognex (Natick, MA, USA; www.cognex.com), Vision Builder from National Instruments (NI; Austin, TX, USA; www.ni.com), Common Vision Blox (CVB) from Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.com) and NeuroCheck from NeuroCheck (Stuttgart, Germany; www.neurocheck.com). Such tools allow many commonly used machine vision functions to be configured without the need for extensive programming. In this way, developers are abstracted from the task of low-level code development, allowing them to build machine vision applications more easily.

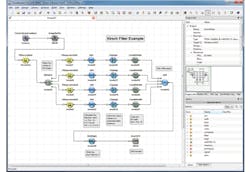

To further simplify this task, many software packages feature graphical interfaces that allow high-level image processing features to be combined within an integrated development environment (IDE). Matrox's Design Assistant, for example, is an IDE where vision applications are created by constructing a flowchart instead of writing traditional program code. In addition to building a flowchart, the IDE enables users to directly design a graphical operator interface for the application. Similarly, Vision Builder AI from NI allows developers to configure, benchmark, and deploy vision systems using functions such as pattern matching, barcode reading and image classification within an interactive menu-driven development environment (Figure 1).

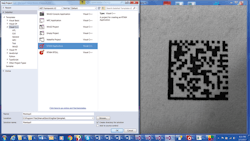

In many cases, vendors will use their software to provide end-users with software developed to address specific tasks such as optical character recognition (OCR). To read and verify barcode labels on large panels consisting of multiple PCBs, for example, Microscan (Renton, WA, USA; www.microscan.com), has used its Visionscape software to ensure that each of the individual circuit boards on the panels can be tracked though the entire production process (see "Modular vision system eases printed circuit board traceability," Vision Systems Design, April 2014, http://bit.ly/VSD-1404-1).

Some companies have even extended this graphical flowchart interface concept to allow developers to access the underlying power of field programmable gate arrays (FPGAs). VisualApplets from Silicon Software (Mannheim, Germany; https://silicon.software), for example, is a software programming environment that allows developers to perform FPGA programming using data flow models. In the company's latest version, functions for segmentation, classification and compression are provided as well as a Fast Fourier transform (FFT) operator that allows complex band pass filters to be implemented more efficiently (Figure 2).

Like Silicon Software, NI's LabVIEW FPGA Module allows FPGA-efficient algorithms such as image filtering, Bayer decoding and color space conversion to be performed without using low-level languages such as VHDL. By doing so, many compute-intensive image processing functions can be off-loaded to the FPGA, thus speeding machine vision applications.

For those wishing to develop machine vision systems using a variety of open source and commercially available software, development environments are now available that allow image processing algorithms from a number of different companies to be graphically combined. Such environments allow developers to combine both open-source algorithms and commercially-available packages to be integrated into a single environment. This allows machine vision software to be specifically tailored based on the most effective algorithms.

VisionServer 7.2, a machine vision framework from ControlVision (Auckland, New Zealand; www.controlvision.co.nz), for example, allows open source image-processing libraries and commercially-available packages such as VisionPro Software from Cognex to be used together in a graphical IDE. Also supporting VisionPro, the VS-100P framework from CG Controls (Dublin, Ireland; www.cgcontrols.ie) uses Microsoft's .NET 4 framework and Windows Presentation Foundation (WPF) to enable developers to deploy single or multi-camera-based vision systems.

Real-time options

While most commercially-available machine vision software run using operating systems such as Windows and Linux, the need to develop machine vision systems that can perform tasks within specific time periods has led to the support of real-time operating systems (RTOS). These RTOS then allow developers to determine the time needed to capture and process images and perform I/O within a system while leveraging the power of Windows to develop graphical user interfaces (GUI).

Today, a number of companies offer RTOS support for machine vision software packages. MIL, for example, can now run under the RTX64 RTOS from IntervalZero (Waltham, MA, USA; www.intervalzero.com), a fact that has been exploited by Kingstar (Waltham, MA, USA; www.kingstar.com) in the development of PC-based software for industrial motion control and machine vision applications. Built on the EtherCAT standard, machine vision tasks employ MIL running natively in IntervalZero's RTX64 RTOS. In operation, RTX64 runs on its own dedicated CPU cores alongside Windows to provide a deterministic environment. Using this architecture, developers partition MIL-based applications to run on RTX64 and Windows (Figure 3).

Third-party RTOS support for other machine vision software packages are also available. Running the HALCON machine vision package from MVTec, for example, can be accomplished using the RealTime RTOS Suite from Kithara (Berlin, Germany; www.kithara.de). Similar to other RTOS, the RealTime RTOS Suite uses a separate scheduler within the kernel of the RTOS to decide which image processing task should execute at any particular time. Like IntervalZero, this kernel operates in conjunction with Windows (see "Real-time operating systems target machine vision applications," Vision Systems Design, June 2015, http://bit.ly/VSD-1506-1).

For its part, Optel Vision (Quebec City, QC, Canada; www.optelvision.com) recently showed how it has developed a pharmaceutical tablet inspection machine using its own proprietary algorithms running under INtime from TenAsys (Beaverton, OR, USA; www.tenasys.com). According to Kim Hartman, VP of Sales and Marketing at TenAsys, INtime takes control of the response-time-critical I/O devices in the system, while allowing Windows to control the I/O that is not real-time critical (see "Packaging Line Vision System Gets Speed Boost," Control Engineering, May 2011; http://bit.ly/VSD-CON-ENG).

High-performance image processing has also become the focus of the embedded vision community. Recently, Dr. Ricardo Ribalda, Lead Firmware Engineer at Qtechnology (Valby, Denmark; www.qtec.com) showed how his company had created an application to perform high-speed scanning and validation of paper currency using processors from AMD (Sunnyvale, CA, USA; www.amd.com) and software tools from Mentor Graphics (Wilsonville, OR, USA; www.mentor.com) - see "Smart camera checks currency for counterfeits", page 15 this issue. A demonstration of the system in operation can be found at http://bit.ly/VSD-QTec.

Image classification

Today, the tools required to perform gauging functions, pattern matching, OCR, color analysis and morphological operations are common. Such tools allow developers to configure multiple types of machine vision systems to classify whether parts are acceptable or must be rejected. In some cases, however, where an object's features are variable, such tools are less useful. In fruit and vegetable sorting applications, whether a particular product is good or bad can depend on a number of different factors.

To determine whether such products are acceptable then depends on presenting the system with many images, extracting specific features and classifying them. A number of different classifiers are available to perform this task that include neural nets, support vector machines (SVMs), Gaussian mixture models (GMM) and k-nearest neighbors (k-NN). Using its HALCON software package, for example, MVTec developers can access all these classifiers.

Numerous companies have used such "deep learning" techniques in commercial products. To classify or separate products based on acceptable or unacceptable defects, ViDi green software from ViDi Systems (Villaz-St-Pierre, Switzerland; www.vidi-systems.com) allows developers to assign and label images into different classes after which untrained images can be classified. In a bottle sorting application demonstration, Datalogic (Bologna, Italy; www.datalogic.com) recently demonstrated how a k-d tree classifier could be used to identify and sort bottles after test bottles are first presented to the system and key points in the image automatically extracted (see "Image classification software goes on show at Automate," Vision Systems Design, May 2015; http://bit.ly/VSD-1505-1).

Developers using CVB Manto from Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.de) also do not need to select the relevant features in an image prior to classification. Using extracted texture, geometry and color features, captured data is presented to an SVM for classification. Similarly, the NeuralVision system from Cyth Systems (San Diego, CA, USA; www.cyth.com) is designed to allow machine builders with no previous image processing experience to add image classification to their systems (see "Machine learning leverages image classification techniques," Vision Systems Design, February 2015; http://bit.ly/VSD-1502-1).

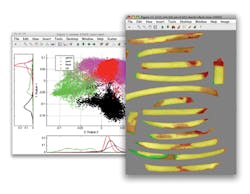

By applying multiple image classifiers on extracted data, developers can determine whether the extracted features are good enough to determine the specific features of the product being analyzed. If not, then different types of features may need to be extracted. Because of this, some companies offer software packages that allow multiple classifiers to be developed and tested. One such toolkit, perClass from PR Sys Design (Delft, The Netherlands; www.perclass.com), offers multiple classifiers to allow developers to work interactively with data, choose the best features within the data for image classification, train the numerous types of classifiers and optimize their performance (Figure 4).

Many deep learning resources are now available on the Web. Two of the most interesting of these are Tombone's Computer Vision Blog (www.computervisionblog.com), a website dedicated to deep learning, computer vision and AI algorithms and The Journal of Machine Learning Research (JMLR; www.jmlr.org), a forum for the publication of articles on machine learning.

However, while such deep learning approaches can be used to develop applications such as handwriting recognition, remote sensing and fruit sorting, they will always have a limited accuracy, making classifiers less applicable where, for example, parts need to be measured with high accuracy or aligned for assembly or processing, or for precision robotic-guidance applications.

Open-source code provides alternative options

Many developers choose high-level commercially-available software packages with which to develop machine vision systems because of their ease of use and the technical support available. Other more ambitious developers may wish to investigate the use of open-source code in their projects. Although little technical support may be offered, no licensing or royalty fees are required.

Such open-source software range from C/C++ and Java libraries, frameworks, toolkits and end-user software packages many of which can be found on the website of RoboRealm (Aurora, CO, USA: www.roborealm.com) at http://bit.ly/VSD-1704-7. Although some of the links are outdated, the website does provide a review of many open source machine vision libraries that are available.

Two of the most popular methods of developing applications using open-source code involve leveraging software such as AForge.NET (www.aforgenet.com), a C# framework designed for developers of computer vision and artificial intelligence and the Open Source Computer Vision Library (Open CV; http://opencv.org), an open source computer vision and machine learning software library that offers C/C++, Python and Java interfaces and supports Windows, Linux, Mac OS, iOS and Android operating systems.

For those wishing to use OpenCV from C#, Elad Ben-Israel has created a small OpenCV wrapper for the .NET Framework. The code consists of a DLL written in Managed C++ that wraps the OpenCV library in .NET classes, so that they are available from C#, VB.NET or Managed C++. The wrapper can be downloaded at: http://bit.ly/VSD-1704-8. Other .NET wrappers include Emgu CV (www.emgu.com), a cross platform .NET wrapper to OpenCV that allows OpenCV functions to be called from .NET compatible languages such as C#, VB, VC++ and IronPython. The wrapper can be compiled by Visual Studio, Xamarin Studio and Unity and runs under Windows, Linux, Mac OS X and Android operating systems.

To build computer vision applications using OpenCV, developers can use SimpleCV (http://simplecv.org), an open-source framework that allows access to several computer vision libraries such as OpenCV without the need to understand bit depth, file format, color space or buffer management protocols. Since integrating Intel's Integrated Performance Primitives (IPPs) is automatically performed by OpenCV, over 3,000 proprietary optimized image processing and computer vision functions are automatically accelerated. These IPPs can be freely downloaded from Intel's developer site at: http://bit.ly/VSD-1704-9.

To date, a number of companies support development with the OpenCV library. These include Willow Garage (Palo Alto, CA, USA; www.willowgarage.com), Kithara (Berlin, Germany; www.kithara.de), National Instruments (Austin, TX, USA; www.ni.com) and ControlVision (Auckland, New Zealand; www.controlvision.co.nz).

Companies mentioned

AMD

Sunnyvale, CA, USA

www.amd.com

CG Controls

Dublin, Ireland

www.cgcontrols.ie

Cognex

Natick, MA, USA

www.cognex.com

ControlVision

Auckland, New Zealand

www.controlvision.co.nz

Cyth Systems

San Diego, CA, USA

www.cyth.com

Datalogic

Bologna, Italy

www.datalogic.com

Euresys

Angleur, Belgium

www.euresys.com

IntervalZero

Waltham, MA, USA

www.intervalzero.com

Kingstar

Waltham, MA, USA

www.kingstar.com

Kithara

Berlin, Germany

www.kithara.de

Matrox

Dorval, QC, Canada

www.matrox.com

Mentor Graphics

Wilsonville, OR, USA

www.mentor.com

Microscan

Renton, WA; USA

www.microscan.com

MVTec Software

Munich, Germany

www.mvtec.com

National Instruments

Austin, TX, USA

www.ni.com

NeuroCheck

Stuttgart, Germany

www.neurocheck.com

Optel Vision

Quebec City, QC, Canada

www.optelvision.com

PR Sys Design

Delft, The Netherlands

www.perclass.com

Qtechnology

Valby, Denmark

www.qtec.com

RoboRealm

Aurora, CO, USA

www.roborealm.com

Silicon Software

Mannheim, Germany

Stemmer Imaging

Puchheim, Germany

www.stemmer-imaging.com

TenAsys

Beaverton, OR, USA

www.tenasys.com

ViDi Systems

Villaz-St-Pierre, Switzerland

www.vidi-systems.com

Willow Garage

Palo Alto, CA, USA

www.willowgarage.com

About the Author

Andy Wilson

Founding Editor

Founding editor of Vision Systems Design. Industry authority and author of thousands of technical articles on image processing, machine vision, and computer science.

B.Sc., Warwick University

Tel: 603-891-9115

Fax: 603-891-9297