PATTERN RECOGNITION: Neural networks ease complex pattern-recognition tasks

Pattern-recognition tasks in machine vision are performed by extracting data from images and comparing them with known good data. In examining whether the neck of a container is the correct shape, for example, algorithms may be used to determine the edges of the container, measure the distance between them, and return a result that helps decide whether the measurement is within a known tolerance.

“While such instruction-based models are extremely useful,” says Tim Carruthers, chief executive officer of Neural ID (Redwood Shores, CA, USA), “in applications where products with very different profiles need to be examined and classified, such approaches can prove complex and time-consuming.”

Such a task was presented to Neural ID by ecoATM (San Diego, CA, USA) in the development of its eCycling Station, a system designed to allow consumers to recycle their cell phones (see “Smart kiosk helps consumers recycle cell phones,” Vision Systems Design, November 2010).

Deploying Neural ID’s Concurrent Universal Recognition Engine (CURE), neural network-based software in the system allows extracted features to be rapidly compared with features from images of more than 4000 different cell phones stored in the system’s database. When a new cell phone model needs to be recognized, the system is trained to learn specific features of the cell phone and its display to classify and inspect the device.

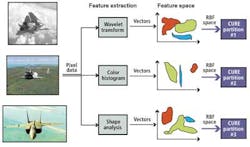

The CURE software is implemented as a single-layer artificial neural network called a radial basis function (RBF) network. By extracting features such as edges, color, and shape from the image in different regions of interest (ROIs) using standard image-processing algorithms, the values are then represented as vectors in RBF space (see Fig. 1).

During training of the system, each feature vector is computed to produce a map that represents the part. When an unknown part is presented to the system, the same image-processing operations are performed, which result in another set of vectors in RBF space. If these vectors lie within the influence region of vectors known to define a specific part, then the part is classified. Should these vectors produce a vector map that is radically different, the part is classified as unknown until the system is trained to recognize it.

“Because each feature extraction method can be uncorrelated,” says Carruthers, “comparing multiple vectors in RBF space can result in a more complete match.” Performing each match in parallel can also increase the speed of the identification process.

Neural ID has demonstrated its software in a system designed to inspect automotive tail-light assemblies (see Fig. 2).

“Depending on the model of automobile being manufactured,” says Carruthers, “different tail lights can be mounted on the automobile chassis. To ensure these are not scratched or damaged during the process, they are wrapped in a plastic film that scatters light from them, making it difficult for conventional machine-vision software to recognize whether the correct part has been mounted.”

To determine which tail light is present, the color histogram of a single ROI around the tail light is first analyzed and the saturation of the red component increased. Once this is accomplished, a shape profile of horizontal and vertical pixels within each row and column of the image is computed and stored as a single vector in RBF space. “Because each individual tail light will have a different shape profile, an analysis of these vectors allows the system to correctly classify each part,” says Carruthers.

Vision Systems Articles Archives