Down to the Core

Andrew Wilson, Editor

As multicore processors are becoming commonplace, machine-vision software vendors are re-engineering their products, allowing their customers to leverage the power of multiprocessing. However, the task is not trivial since the programmer must optimize the power of image-processing functions across multiple processors. While the maximum expected performance increase of multiprocessing can be predicted to some extent by Amdahl’s law, the total performance increase will be limited by any sequential part of the program that cannot be optimized to run in parallel.

Many image-processing functions such as point processing or neighborhood operators such as convolution do lend themselves to optimization. However, other techniques—such as adaptive image processing—that require some type of decision-making process and rely heavily on branching conditions are not easily optimized.

“Typically, there are two ways to boost the performance of a machine-vision application at the system level,” says Kyle Voosen of National Instruments, “namely task-level parallelization and data-level parallelization.” Task-level parallelization is best suited for programs with independent functionality that have minimal data dependencies or shared resources—such as multicamera systems—but data-level parallelization is best suited for code that repeats several sequential stages with data dependencies between stages. Voosen adds, “Because image-processing applications tend to be serialized (acquire, filter, extract, classify, output), data-level parallelization is a popular strategy for boosting performance on multicore processors. This scenario works best when each stage of the pipeline takes a similar amount of time.”

Forking threads

Although individual vendors’ multiprocessing source code for tailoring machine-vision software remains proprietary, most split each individual task or program into threads. In developing a simple filter, for example, the programmer may have developed an application program that contains a function to perform a 3 × 3 kernel filter. This application program then forks or copies itself as a number of child processes depending on the number of processor cores available.

FIGURE 1. In a single-core implementation of a 3 × 3 filter, system performance as measured by the number of frames per second may be as high as 57 frames/s (left). Partitioning this filter across a quad-core processor can result in an increase of more than twice the speed (right).

In many software packages, the number of cores can be set by the system integrator or left as a function of the operating system. The benefits of partitioning the filter have been demonstrated by National Instruments, for instance, to show an increase of more than twice the speed when the algorithm is partitioned across multiple cores (see Fig. 1).

“In task-level parallelization, images are processed in parallel on a frame-by-frame basis by splitting the image data evenly between the available cores. In doing so, the programmer has to take into account the seams between these regions and the fact that their proper treatment will vary with the class of algorithm,” says Volker Gimple, image-processing group manager at Stemmer Imaging.

“Functions such as logical operations (to mask out image regions) or summing images to eliminate image noise just use pixels from one or more input images to calculate the pixel values of an output image,” he says. “Such functions do not require any special treatment and can easily be optimized in a data-level parallelization method, scaling almost perfectly linearly with the number of cores working on the image.”

“However,” says Stephane Maurice, software development director at Matrox Imaging, “if the image is of significant size and exceeds the cache this is often not the case. Those functions can still saturate the memory I/O bandwidth with only a few cores. In those cases, often the scaling is not linear and bandwidth is saturated with only a few cores (1–2). Add and copy functions are good examples of this. Convolution algorithms do not suffer from this I/O problem since many operations are performed on each source pixel before a result is obtained.”

Overlapping regions

Functions such as morphological filters, linear convolution filters, and dynamic thresholding operate by moving a window across the input image. When using multithreading techniques, the programmer must also be aware of any boundary effects that will occur. In performing a simple 3 × 3 convolution on a single CPU, for example, the application program may need to take into account any image artifacts that will be generated at the periphery of the image. In the simplest case, the artifacts can be eliminated by padding image pixels at the periphery with the average value of nearest neighbors.

Should the algorithm be relegated to run on a quad-core processor, where perhaps the image is split into four subsections, then there will also be overlapping regions between the pixel boundaries between the sub-images. Therefore, the original filter application may need to spawn threads for each processor and also threads that take care of any artifacts generated at the boundary conditions. However, this is not the only approach that can be taken. “The Matrox Imaging Library (MIL) does not spawn any threads for the overlap and transparently treats them in each tile,” says Maurice. “So there are means to achieve no overhead associated with this tiling overlap.”

Functions that search for the occurrence of a pattern in the image—such as geometric pattern matching, blob detection, or decision-tree-based pattern recognition (such as Stemmer’s Common Vision Blox Minos)—also suffer from this same problem. When partitioning the input image there will always be an overlapping region that must be processed more than once. As the size of the overlapping region tends to be bigger in a pattern-recognition approach than it is on a filter algorithm, the performance hit on pattern-recognition algorithms will be more noticeable.

“Like point-processing operations, geometric transformations do not suffer a systematic penalty from parallelization,” says Gimple. “When transforming an input image, the size of the resulting image is first calculated and then pixels in the original image are transformed on a per-pixel basis back into the source image using an inverse transformation.

“An interpolation algorithm is then used to accommodate the fractional coordinates generated after the inverse transformation,” he says. “Hence, there is no region in the target image that is being processed more often than necessary and the scalability is almost ideal.”

“While these operators lend themselves to multicore processing where data can be partitioned and split across multiprocessors,” says Wolfgang Eckstein, managing director of MVTec Software, “there are many important operations where this is not possible. Prominent examples are connected component analysis, various segmentation technologies, watershed algorithms, or matching based on cross-correlation, edges, or interest points.”

He continues, “For these operators a more sophisticated approach has to be applied. To allow multiprocessing to occur, multiple sub-tasks within each operator can be processed in sequence and thus the complete function parallelized.”

Big and small

Coding algorithms using transparent tiled multithreading allows them to operate equally as well on single CPUs and on multiple processors so that, once coded, the performance increase of software is to some extent dependent on the number of processor cores available.

By splitting a program into many threads, each thread acts as an individual program, operating in the same shared address space. “This allows the operating system to quickly switch between threads and makes it easy for them to share data when running in parallel,” says John Petry, business unit marketing manager of vision software at Cognex.

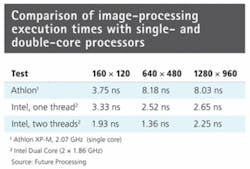

Michal Czardybon, product manager at Future Processing, has conducted tests comparing the execution times of image-processing algorithms on single- and dual-core processors. “Splitting an image for parallel processing using two threads for simple operations such as thresholding resulted in a 70–90% increase of performance. However, this increase in speed only worked on small and medium-sized images.

“When the images were larger (1280 × 960 pixels), the advantage of using two cores disappeared due to the limited size of the processor memory cache. For more complex spatial operations such as a box blur image filter, the speedup was the same for both small and large images,” says Czardybon (see table).

* All execution times normalized on a per-pixel basis

“Depending on the image size and the cache layout of the CPUs in the computer, memory bandwidth can become a limiting factor,” says Stemmer’s Gimple. “But often these situations can be defused before they occur by taking the machine architecture into account when assigning the CPU cores to their tasks: for example, by making sure that in a multicore system the memory-intense processing is evenly distributed among the available CPUs.”

Tasks in parallel

This model of multiprocessing is not the only way to increase performance. Rather than let the operating system switch between threads, it may be more effective for some image-processing algorithms to switch between processes. In this model, individual programs can operate on individual processors and address spaces.

While threads are more memory-constrained than processes because many threads can live in a process, it takes less time to start a thread and switch between them. However, by optimizing any given application into a series of processes, the system must be tailored for a specific number of processors. Should the number of processors increase, the software needs to be rewritten to accommodate them. Needless to say, this is not the approach taken by most machine-vision software vendors.

Amdahl’s law can be used to predict the theoretical maximum speed increase of a fixed problem using multiple processors. In machine-vision tasks, this is especially important since a number of images may need to be processed sequentially with multiple processors performing different operations on the image. Indeed, splitting an image for multiple cores is only one of many ways to take advantage of multicore processors and, says Future Processing’s Czardybon, may not the best one, since it complicates the implementation of individual image-processing functions.

“An alternative method,” he says, “may be to allow individual functions to operate on a single core and use pipelining instead.” In this manner, image-processing functions are split temporally instead of spatially across multicore processors. For example, in applications that require image acquisition, preprocessing, and analysis, simultaneous acquisition and preprocessing of an image may be occurring at the same time the previous image is being processed.

Pipelining and parallel execution of independent parts of an algorithm are not easy to implement using text-based imperative programming languages because they require manual thread management. However, with explicit FIFO queues for data exchange and data access synchronization, parallelization techniques can be made completely automatic and transparent for a user in dataflow-based programming environments such as National Instruments’ LabView. According to Czardybon, this type of programming environment will be implemented in the company’s Adaptive Vision Studio instead of low-level parallelization based on individual image splitting.

“However,” says Matrox’s Maurice, “one of the biggest disadvantages of pipelining with only one core for each stage is that the output speed of the pipeline is limited by the slowest member of the chain. With a parallel tiling approach, all the resources/cores can contribute at 100% to accelerate all the stages of the chain, thus reducing the whole pipeline latency.”

Pipelined approaches

“In a multithreaded environment, the system integrator needs precise control over which cores are being used for what processing tasks,” says Stemmer’s Gimple. “If an API provides parallelization in a ‘black box’ fashion, this is somewhat difficult to implement without re-engineering the existing programming interface. On the other hand, providing two versions of the API [with and without automatic parallelization] is not an attractive concept.”

Stemmer Imaging uses a round-robin approach, taking control over which thread does what and distributing the incoming images evenly and entirely (without partitioning them) over the CPU cores. In this implementation, all the image-processing cores can execute the same function, shifted by the offset of the images’ time of arrival. This round-robin approach in which a free core obtains the next image for processing is not a true pipeline because true pipelining involves partitioning a sequence of operations in which each core performs a specific operation.

“However,” says Gimple, “if the whole process of retrieving an image and extracting the desired results from it exists as one ‘work package,’ then this approach could be considered pipelining. The sheer size of the work packages may lead to less-than-ideal speed gain, especially when compared to a situation where the pipelined packages are more granular. However, if the work packages are made as granular as reasonably possible, then the work-stealing threading model may be easier to implement.”

In the approach taken by Stemmer, the assignment of cores and processing is not hidden from the programmer, and processing tasks can be optionally assigned to a specific core rather than relying on the operating system’s scheduler, a desirable feature when there are virtual HyperThreading cores in the system.

The round-robin approach may increase processing latency and delay the availability of results because each image is only processed by one core instead of being processed across multiple cores.

However, regardless of the type of operations involved, there are no redundancies in the computations. Since no partitions are being made, no seams are present in the image data that need special treatment—therefore, a higher speed gain due to parallelization can be expected.

While the throughput of a program parallelized in a pipelined fashion is at least equal to and sometimes even better than that of black box parallelization, the individual time to process an image may be slower but the overall system throughput can be higher, because four images can be processed on four cores in parallel. In most cases, this increase in overall throughput is what is required. Consequently, about 20% of Stemmer Imaging’s current customers have currently built applications in this fashion.

Combined approaches

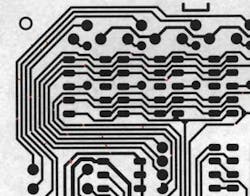

“When looking at any machine-vision application where a combined sequence of operators is used, it is clear that as many operators as possible must support automatic operator parallelization to achieve a significant overall speedup,” says MVTec’s Eckstein. To detect defects on PCB traces, for example, a gray opening and a gray closing have to be calculated. The resulting two images are used as input to a thresholding operator that determines the differences between them. These differences are the defects on the PCB (see Fig. 2).

To effectively increase the throughput of applications, vision libraries partition image-processing applications either by partitioning the image or series of image-processing algorithms or sub-algorithms or use a combination of the two approaches. At DALSA, the company plans to allow system developers to combine both of these approaches in its next-generation Sapera Essential machine-vision software. “Taking this approach,” says Bruno Menard, software team leader of the vision processors group, “will allow the system integrator to tailor the application to take full advantage of both methods of increasing system throughput.”

To take full advantage of machine-vision software, many vendors offer specific APIs that allow system developers to tailor their applications for multicore processors. What is missing, however, as DALSA’s Menard points out, is a simulation environment that effectively models processing power, number of cores, cache memory size, and I/O capability of the target system. While simulators like this remain the subject of academic research, it will be the task of the system developers to fully understand their applications and how to effectively optimize them.

“Although there is no single way to maximize the gains from multiple cores, there are ways to make it easier,” says Matrox’s Maurice. “Choosing an API that offers transparent improvement of existing programs is the best way to start optimizing for multicores. The API should also offer extensive options to let users control how the cores are used and the ability to coexist with other multiprocessing approaches like OS threads and GPUs.”

“Because of this,” says Petry at Cognex, “optimizing a machine-vision application for multicore processors can be a complex process with unpredictable results. Field testing is the only way to fully measure system throughput.”

Company Info

Cognex, Natick, MA, USA

www.cognex.com

DALSA, Waterloo, ON, Canada

www.dalsa.com

Future Processing, Gliwice, Poland

www.future-processing.com

Matrox Imaging, Dorval, QC, Canada

www.matrox.com/imaging

MVTec Software, Munich, Germany

www.mvtec.com

National Instruments, Austin, TX, USA

www.ni.com

Stemmer Imaging, Puchheim, Germany

www.stemmer-imaging.com