GPUs battle for imaging applications

Performing general-purpose computing on graphics processing units (GPUs) has generated much interest over the last few years, to the point where they are being used by commercial vendors of machine-vision software such as Stemmer Imaging (Puchheim, Germany; www.stemer-imaging.de; see Vision Systems Design, September 2007, p. 17). The General-Purpose Computation Using Graphics Hardware Web site (www.gpgpu.org) now lists more than 40 independent groups developing software for graphics processors.

Many foresee the dominance of these processors in computationally intensive computer-based systems as more important than which host processor is used. According to a recent article by David Strom in Information Week (www.informationweek.com), for example, graphics cards being developed by Nvdia (Santa Clara, CA, USA; www.nvidia.com) and ATI, now part of AMD (Sunnyvale, CA, USA; www.amd.com), will have a bigger impact on computational processing than the latest processors from either Intel or AMD.

As graphics processors become more easily programmable, they will perform more than specific graphics computations. Indeed, as general-purpose coprocessors, the speed and architectures of these devices makes them useful for a variety of other applications including image processing and machine vision.

However, the battle for dominance in this market is just beginning. To create code for these applications, developers have two different APIs from which to choose. Microsoft DirectX 10 API leverages its Direct 3D API, a .COM-based approach that only runs on the Windows Vista operating system. OpenGL (www.opengl.org) originally developed by Silicon Graphics (Sunnyvale, CA, USA; www.sgi.com), on the other hand, is an open standard that is independent of the operating system used by the host. OpenGL and Direct X are now both supported on the AMD and Nvidia range of add-in graphics cards.

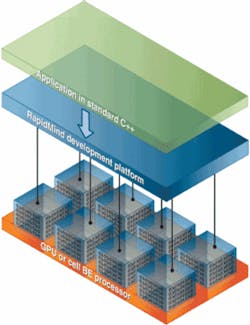

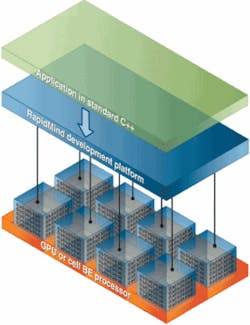

RapidMind provides a software development platform that allows developers to use standard C++ to create parallel applications that run on graphics processing units.

While vendors such as Stemmer Imaging prefer Microsoft Direct X API, academic researchers such as those at the University of Toronto (Toronto, ON, Canada; www.eecg.toronto.edu) are developing vision systems based on OpenGL. As part of his EyeTap Personal Imaging Lab (www.eyetap.org), for example, professor Steven Mann’s OpenVIDIA project uses single or multiple graphics cards to accelerate image analysis and computer vision.

OpenVIDIA is both an open source library and API aimed at providing graphics hardware accelerated processing for image processing and computer vision. It uses multiple GPUs built from commodity hardware. The API implements commonly used computer vision algorithms such as Canny edge detection, image filtering, identifying, and matching features within images. And because the project is open source, the code can be used as templates and examples for how similar algorithms are mapped onto graphics hardware.

Many of those involved in image processing and machine vision are programming these graphics devices using either DirectX or OpenGL. However, these high-level APIs do not allow developers to access low-level functions of the graphics devices themselves. To further leverage their hardware into the arms of software developers, both Nvida and AMD are offering software tools that allow such development to take place.

The Nvidia Compute Unified Device Architecture (CUDA) provides a set of extensions to the C language that allow programmers to target portions of their source code for execution on the device. Nvidia provides a host, device, and common component runtime as part of the CUDA to issue and manage computations on the GPU without the need to map them to a graphics API. When programmed through CUDA, the GPU is viewed as a compute device capable of executing a number of threads in parallel, allowing compute-intensive portions of applications running on the host to be offloaded onto the GPU.

This approach is notably different from AMD’s. In its latest generation of devices, the company has introduced what it calls a “close to metal” (CTM) initiative that allows access to the native instruction set and memory of the device. Thus, developers can program AMD’s graphics products directly in assembly language.

“The idea is that a development community will create the sort of libraries and higher-level tools that Nvidia is providing in a prepackaged but closed form with CUDA,” says Jon Stokes at arstechnica.com. “People who want to do math coprocessing with Nvidia’s ICs will have to rely on the quality, stability, and performance of the company-provided driver, whereas CTM lets you roll your own interface to the hardware if you do not like what is offered by AMD.”

But the question of whether CUDA or CTM will win the hearts of developers may be moot. Already third-party developers are creating high-level support for these and other graphics devices. One company, RapidMind (Waterloo, ON, Canada; www.rapidmind.net), provides a dynamic compiler that allows the developer to use standard C++ programming to create parallel applications to run on processors, including Nvidia’s GeForce 8000 series, ATII/AMD 2x00 series, and IBM’s QS20 Blade server with Cell BE processor.

“Users of the RapidMind development platform continue to program in C++ using their existing compilers,” says Michael McCool, founder and chief scientist. “After identifying components of their application to accelerate, the developer replaces numerical types representing floating-point numbers and integers with the equivalent RapidMind types. While the application is running, sequences of numerical operations invoked by the user’s application are captured, recorded, and dynamically compiled to a program object. The RapidMind platform runtime is then used to manage parallel execution of program objects on the target GPU.”