Exploring image-processing-software techniques

Exploring image-processing-software techniques

Peter Eggleston

After consulting with our readers, the editors of Vision Systems Design have concluded that imaging-processing software deserves more comprehensive coverage. Our goal is to provide you with deeper insight into the software algorithms and tools used in machine-vision and imaging-system designs and applications. This month, we begin a series of articles that will describe, discuss, and analyze the capabilities of available image-processing techniques with practical applications. We welcome your comments.--Ed.

Often, various categories of image-processing software must be combined to create a machine-vision or imaging application. The design goals of a vision system will dictate the type and complexity of the actual techniques used. For instance, if the goal is to make the images "look better," that is, to enhance or suppress certain qualities of the data, then the software image processes involved are much simpler than those required for a system that must replicate a human-vision function. Such software can change the way the data look and might encompass uneven lighting compensation, contrast stretching, feature boosting or suppression, or noise removal.

Filtering

A fundamental image-processing-software technique is called filtering. This broad term is used to encompass those software operators that act on an image pixel by pixel, therefore applying the same function to every picture element. This type of software is concerned only with the value of the pixels rather than with other spatial information in the image.

Accordingly, software functional operators that warp or transform the geometric information inherent in the image are not grouped into the enhancement class. These operators are more often referred to as geometric transforms. Likewise, software functions that convert images be tween color spaces are also thought of as transformation, rather than as enhancement.

Other filtering functions include frequency-selective operations that have the effect of boosting or suppressing high-, low-, or band-selective frequency components in the image data. Fast-Fourier-transform operators are often used for this purpose, because the multiplication of filter masks in the frequency domain can be done using fewer central-processing-unit cycles than convolution-based spatial filters on the original image.

Image enhancement refers to a subset of filtering functions that are typically applied to images to enhance or suppress particular features of the data, usually related to the viewing quality of the image. However, there is no official standard for classifying image-processing operations, and manufacturers often use these terms loosely.

Moreover, the imaging application in which a software operator is being used might influence how that operation is categorized. For instance, a low-pass filter may be used to remove unwanted components of an image, thus enhancing the image quality. Or, it may be used to compensate for uneven lighting conditions, thus acting as a filter to remove unwanted artifacts of the data-acquisition process.

Segmentation

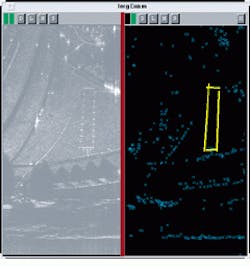

Filtering techniques are also used to further facilitate image processing such as segmentation--the process of extracting or delineating areas of interest from an image. For example, consider an application that calls for automatically locating a landing strip in a radar image (see figure). The first step in locating the landing strip is to find the radar reflectors that border it. Here, local maximal segmentation is used to extract the bright dots that correspond to these reflectors.

This imaging process works as follows: for every pixel in the input image, the corresponding pixel in the output image is set to a value of 1 if the value of the pixel is greater than some user-defined threshold, and the pixel is greater than any surrounding pixels. Otherwise, the output pixel is set to zero. After extraction, features can be computed for each runway reflector, such as its location, brightness, and orientation to the other reflectors. For some applications, this will be the end result--a list of objects and their characteristics.

In other applications, the vision system might require further processing of the objects. In the landing-strip example, the extracted reflectors can be grouped to form geometric shapes such as lines, corners, and rectangles. To perform this grouping, links are first established between neighboring reflectors. This is done via morphological processes that define `neighborhoods` of pixels about each pixel. Neighborhoods that overlap get linked. Once linked, lines are fit to these groupings. Last, line-intersection operators are used to look for lines that intersect at nearly right angles to form corners. Pairs of corners that are facing in opposite directions are grouped to form rectangles. The resulting rectangle formed by grouping the individual reflectors allows the vision system to recognize the presence and location of the landing strip. In other instances, recognition may involve comparing features of the extracted objects to statistical samples, templates, or rules, which would then assign each object to a prototypical type or class.

In future columns, other image-processing operators, such as those that perform edge- and region-based segmentation, will be explored in more detail. Advanced segmentation techniques will also be covered. In some instances, it is not possible to set segmentation process parameters so that all the objects of interest are located with respect to the background without over-segmenting the data.

Oversegmentation is the process by which the objects being segmented from the background are themselves segmented or fractured into subcomponents. In this case, the objects can be reassembled using region-merging techniques. In other situations, preliminary segmentation of the image may result in undersegmentation, whereby some objects of interest are adjacent or overlapping and are represented as a single object, rather than discrete components. Region-splitting techniques, which are based on the shape or statistics of the objects, can be used to break apart the objects in these situations.

Other processing techniques that will be investigated include morphological operators. These "shape-based" functions, which alter the image, are based on local processing templates called structuring elements. Morphological operators can be used to perform a variety of useful functions such as segmentation, boundary elimination, and hole filling. However, these operators are grouped into the morphology class because they share the common trait of processing based on geometric structure.

This column will also deal with statistical and fuzzy-based classification techniques. These approaches will be contrasted with traditional template matching and correlation techniques. For instance, the use of feature-based pattern recognition to provide faster and rotation/scale invariant recognition capabilities will be presented.

PETER EGGLESTON is North American sales director, Imaging Products Division, Amerinex Applied Imaging Inc., Amherst, MA; e-mail: [email protected].

The goal in processing synthetic aperture radar image of a landing strip was to group the runway reflectors to form a geometric shape that would enable the recognition of the strip. Software morphological techniques were initially used to extract the strong reflector details. Software grouping techniques were then applied to form the lines, corners, and rectangles of the runway.