MIT chip cleans up images

In work was funded by the Taiwan-based Foxconn Technology Group, researchers at MIT's Microsystems Technology Laboratory (Cambridge, MA, USA) have developed an image processing circuit that can create more realistic images from a smart phone.

Existing computational photography systems tend to be software applications that are installed onto cameras and smart phones. However, such systems consume substantial power, take a considerable amount of time to run, and require a fair amount of knowledge on the part of the user, says Rahul Rithe, a graduate student in MIT's Department of Electrical Engineering and Computer Science.

"We wanted to build a single chip that could perform multiple operations, consume significantly less power compared to doing the same job in software, and do it all in real time," Rithe says.

He developed the chip with Anantha Chandrakasan, the Joseph F. and Nancy P. Keithley Professor of Electrical Engineering, fellow graduate student Priyanka Raina, research scientist Nathan Ickes and undergraduate Srikanth Tenneti.

One task the chip performs is high dynamic range (HDR) imaging, which compensates for limitations on the range of brightness that can be recorded by existing digital cameras, to capture pictures that more accurately reflect the way we perceive the same scenes with our eyes.

To do this, the chip's processor automatically takes three separate "low dynamic range" images with the camera: a normally exposed image, an overexposed image capturing details in the dark areas of the scene, and an underexposed image capturing details in the bright areas. It then merges them to create one image capturing the entire range of brightness in the scene.

Software-based systems typically take several seconds to perform this operation, while the MIT chip can do it in a few hundred milliseconds on a 10-Mpixel image.

Another task the chip can carry out is to enhance the lighting in a darkened scene. "Typically when taking pictures in a low-light situation, if we don't use flash on the camera we get images that are pretty dark and noisy, and if we do use the flash we get bright images but with harsh lighting, and the ambience created by the natural lighting in the room is lost," Rithe says.

In this instance, the processor takes two images, one with a flash and one without. It then merges the two images, preserving the natural ambience from the nonflash shot, while extracting the details from the picture taken with the flash.

To remove unwanted features from the image, such as noise, the device blurs any undesired pixel with its surrounding neighbors, so that it matches those around it.

In conventional filtering, this means even those pixels at the edges of objects are also blurred, which results in a less detailed image. But by using a bilateral filter, the researchers were able to preserve these outlines because bilateral filters will only blur pixels with their neighbors if they have been assigned a similar brightness value.

The algorithms implemented on the chip were inspired by the computational photography work of associate professor of computer science and engineering Fredo Durand and Bill Freeman, a professor of computer science and engineering in MIT's Computer Science and Artificial Intelligence Laboratory.

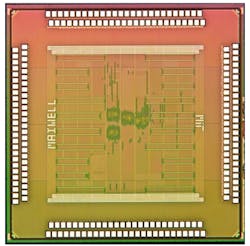

With the aid of Taiwanese semiconductor manufacturer TSMC's University Shuttle Program, the researchers have already built a working prototype of the chip using 40-nm CMOS technology, and integrated it into a camera and display. They will be presenting their chip at the International Solid-State Circuits Conference in San Francisco this month.

Related items from Vision Systems Design that you might also find of interest:

1. Embedded processor optimizes feature-extraction algorithms

With support from the National Science Foundation (NSF; Arlington, VA, USA) and the Gigascale Systems Research Center (GSRC; Princeton, NJ, USA), Silvio Savarese of the University of Michigan (Ann Arbor, MI, USA) and his colleagues have developed a multicore processor specifically designed to increase the speed of feature-extraction algorithms.

2. Guide helps programmers take advantage of multi-core processors

To help image processing software developers employ best practices when writing multicore-ready embedded software, the Multicore Association (El Dorado Hills, CA, USA) has produced the Multicore Programming Practices (MPP) guide.

3. GPU speeds up Generalized Hough Transform

Researchers at KPIT Cummins Infosystems (Pune, India) have created a parallel implementation of the GHT algorithm that can run on a GPU (Graphical Processing Unit). The researchers claim that their implementation is 80 times faster than a CPU-based version.

-- Dave Wilson, Senior Editor, Vision Systems Design