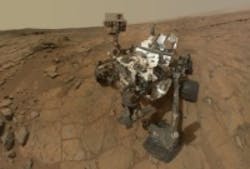

Image analysis algorithm will help NASA's Curiosity Rover analyze Mars soil

A multi-organizational research team has developed an image analysis and segmentation algorithm specifically designed to help NASA scientists analyze images of soil captured on the surface of Mars.

The team is made up of members from Louisiana State University, Rider University, Stony Brook University and the U.S. Geological Survey. The algorithm was developed and implemented in Mathematica, a computational software program. According to a RedOrbit press release, the algorithm uses a variety of different image processing steps to first segment the image into foreground and background grains, and then continues the process until nearly all nearly all coarser and finer grains are outlined.

"The code processes a single image within 1 to 5 minutes," LSU officials said in the press release. "The semi-automated algorithm, while comparing favorably with manual (human) segmentation, provides better consistency across multiple images than a human. The researchers are exploring the use of this algorithm to quantify grain sizes in the images from the Mars Exploration Rovers Microscopic Imager (MI) as well as Curiosity’s Mars Hand Lens Imager (MAHLI)."

The grain size distributions identified in images of the soil could potentially reveal trends, and the ability to identify most of the grains in the images will also enable more detailed, area-weighted sedimentology, they added.

The Curiosity Rover is equipped with 17 on-board cameras, according to NASA, which include:

- The Mars Descent Imager, which captured photos as the rover landed on Mars, uses a Bayer pattern filter CCD array to obtain 1600 x 1200 pixel natural color images.

- The MAHLI Instrument,which is a camera mounted on the end of an arm, acquires images of rocks and surface materials at the landing site. MAHLI images are 1600 x 1200 pixels with resolution as high as 13.9 µm per pixel.

- The HazCams, of which there are four in the front and four in the back. HazCams capture black and white images of the terrain near the wheels and close to the rover of up to 1024 x 1024 pixels. Due to their positioning on both sides of the rover, simultaneous images taken by either both front or both rear cameras can be used to produce a 3D map of the surroundings. Vision technology in the HazCams provided by Teledyne DALSA.

- The mast cameras, which will take color images and color video footage of the Martian terrain, are two separate cameras with different focal lengths and difference science color filters. One camera has a a ~34 mm focal length, f/8 lens that illuminates a 15° square field-of-view (FOV), 1200 × 1200 pixels on the 1600 × 1200 pixel detector and the other has a ~100 mm focal length, f/10 lens that illuminates a 5.1° square, 1200 × 1200 pixel FOV.

Also check out:

Cassini spacecraft image shows Earth, Saturn, Venus, and Mars all at once

NASA smart camera system recognizes planetary texture

NASA releases image of Saturn's view of Earth

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter | Check us out on Google +

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.