Fast and Faster

To improve speed, machine-vision software vendors are porting their products to a number of parallel processors

With the increase in data rates from linear and area-array cameras, developers of image-processing and machine-vision systems are looking to optimize performance of their systems by using off-the-shelf processors such as FPGAs, multicore CPUs, graphics processing units (GPUs), and more specialized parallel processors. Before doing so, however, developers must fully understand the nature of the image-processing task to be performed and how it can be best partitioned among these disparate elements to leverage the best performance.

Today, for example, many cameras and PC-based frame grabbers incorporate FPGAs to speed the computation of algorithms such as Bayer interpolation and white balancing. “However,” says Pierantonio Boriero, product line manager at Matrox Imaging, “while such functions are inherently suitable for these implementations, their performance must be analyzed in the context of a complete image-processing system.”

At the recent launch of Version 9 of the Matrox MIL library, Boriero compared the performance of linear Bayer interpolation performed on both an EP1S30 FPGA core from Altera and the same function running on a 3.73-GHz Pentium 4 extreme edition from Intel. While the FPGA performed Bayer interpolation on a 1k × 1k × 8-bit image in 5.4 ms, the single-core Pentium took 5.2 ms to complete the same task. While one may (incorrectly) assume that this task is then best performed on a Pentium processor, the processing power of the host Pentium will not be capable of performing other tasks such as image enhancement during this period, slowing image-processing throughput.

Multicore processors

To alleviate this bottleneck, many proponents are arguing for systems built with multicore processors. In these systems, imaging tasks can be partitioned between multiple cores. Here again, however, it is the imaging task to be performed that determines system performance. For algorithms that are sequentially executed, such as those that may use branching or conditioning, applying two processing cores will not result in a 2X speed increase. Indeed, according to Amdahl’s law (http://en.wikipedia.org/wiki/Amdahl’s_law), any increase will depend on how much parallelism can be eked from such seemingly sequential algorithms.

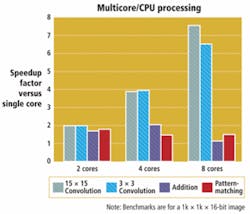

To highlight this, Matrox benchmarked a number of algorithms including a 3 × 3 convolution, 15 × 15 convolution, and pattern matching of a 1k × 1k × 16-bit image on single, dual, quad, and dual-quad core Xeon CPUs (see Fig. 1). As can be seen, while dual-core processors improved the performance of both convolution algorithms, image addition and pattern-matching functions did not exhibit a scalability improvement of 2X. More interestingly, pattern-matching algorithms that use alternate branching and conditioning were slower running on eight-core systems than quad-core processors.

FIGURE 1. While dual-core processors improve the performance of convolution, image-addition and pattern-matching functions do not exhibit a scalability improvement of 2X. Pattern-matching algorithms that use alternate branching and conditioning are slower running on eight-core systems than quad-core processors.

One of the reasons for this is the architecture of the Xeon processor itself. In the design of the processor, Intel provides each processor core with its own L1 cache and an L2 cache shared by both cores. When running pattern-matching applications on these architectures, both cores can access the L2 cache, causing memory contention problems and slowing system performance. Realizing this, Intel is poised to announce its next generation of multicore products, code-named Nehalem and Tukwila, that will incorporate a scalable shared-memory architecture called QuickPath, which will integrate a memory controller into each microprocessor and connect processors and other system components using the high-speed interconnect.

Even as Intel prepares its next generation of processors, image-processing and machine-vision software suppliers are looking to leverage their products on other platforms, most notably GPUs. Such concepts are relatively new. Last year, for example, Stemmer Imaging announced a dedicated toolset of its Common Vision Blox (CVB) with 40 functions callable from within CVB applications that can be run on an NVIDIA 8800 graphics card (see Vision Systems Design, September 2007, p. 17).

In its MIL Version 9.0, Matrox also offers a number of image-processing functions such as binarization, erosion, and image transformation, which can be offloaded from GPUs such as the Radeon HD3870 from ATI Technologies, now part of AMD, and the GeForce 8800 from NVIDIA. “Once again,” Boriero cautions, “the complete image-processing application must be analyzed to achieve the highest performance gains.”

Benchmarking algorithms

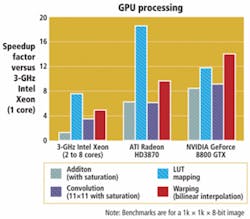

To demonstrate this, Matrox has also benchmarked a number of functions including addition, LUT mapping, convolution, and bilinear interpolated warping algorithm on these processors (see Fig. 2). As can be seen, the resultant increase in performance is noticeable across all of these algorithms when compared with a single-core Intel Xeon. “In the past,” says Philip Colet, vice president of sales and marketing at DALSA, “x32 PCI Express boards were optimized for graphics applications. However, with the introduction of PCI Express Version 2 products, the I/O instruction and data capability is more balanced, making such products more suitable for image-processing applications.”

FIGURE 2. When benchmarking addition, LUT mapping, convolution, and bilinear interpolated warping algorithms on these processors across multicore and graphics processors, the increase in performance is noticeable across all of these algorithms when compared with a single-core Intel Xeon processor.

Interestingly, the architecture of the graphics processors from ATI and NVIDIA are somewhat different, making specific algorithms run at different speeds. While ATI’s Radeon performs better at 11 × 11 convolution, for example, the NVIDIA GeForce 8800 GTX performs better when used to perform a bilinearly interpolated warp.

Despite such advances, DALSA’s Colet remains wary of using graphics processors in machine-vision applications. “Since graphics boards are primarily targeted at gaming applications, vendors must continually upgrade their products to compete. In the past, this has resulted in many graphics processors. In other cases, graphics chip vendors’ products from ATI and NVIDIA may not be supported over the ten-year lifetime of our OEM customers.”

Despite DALSA’s reticence in supporting these processors, Matrox MIL Version 9 does support both ATI and NVIDIA products under Direct X Version 10. With support for its own FPGA-based frame grabbers, multicore CPUs, and graphics processors, it seems that Matrox has set a standard that others are likely to follow. Indeed, at the upcoming VISION 2008 in Stuttgart, Germany, it is likely that other software vendors will also offer support for multicore processors. These will include NeuroCheck Version 6.0 from NeuroCheck and HALCON from MVTec Software.

For developers, however, implementing multicore approaches will be made more complex. Just as tailoring specific algorithms across multiple hardware products is difficult, software designers will need to develop multithreaded-based systems to optimize multiple processors for specific vision tasks.

Although this can be “automatically implemented” by companies such as Matrox and MVTec, optimizing these tasks will require software engineers with expert knowledge. Perhaps more important, many software vendors such as MVTec, NeuroCheck, and Stemmer, unlike DALSA, Matrox, and National Instruments, do not manufacture their own FPGA hardware, leaving their developers with multicore processors or graphics cards as the only current option to increase the speed of their image-processing systems.

Parallel processing speeds ultrasound analysis

Over the last four years, processor vendors have been unable to increase the performance of their processors by increasing the clock rate because power consumption increases dramatically with increased frequency. Because of this, these vendors have adopted multicore approaches to increase performance. By reducing the clock rate of a core by 20%, a 50% power savings is achieved and a two implementation still has 1.6 times the raw performance of a single higher-clocked core using the same total power. In addition, not all of these cores need to run the operating system, leading to further efficiencies.

The Cell BE processor from Sony/IBM/Toshiba (www.research.ibm.com/cell), for example, has nine cores, but eight of these are specialized for computation, and only one runs the operating system. Similar specialized cores or graphics processing units are available on video-accelerator cards. These are general-purpose, massively parallel compute engines with up to 10 times the raw floating-point throughput of the system’s main CPU.

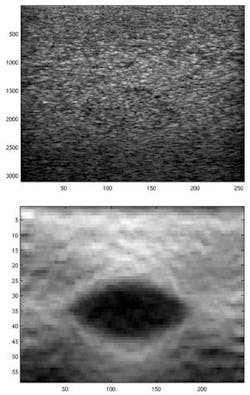

To achieve the additional performance of multicore processors, a parallel implementation of the desired image-processing algorithm is required. For example, elastography is a noninvasive technique used for detecting tumors, based on the fact that tumors are usually stiffer than surrounding soft tissue. During an ultrasound examination, compression or vibration can be applied to the soft tissue being imaged. Processing deformations between multiple images then determine the location of a tumor. This can be used, for example, to improve breast-cancer screening, since breast-cancer tumors are usually less elastic than surrounding tissue.

Using raw ultrasound image, physicians are often unable to locate specific tumors (top). With a method known as elastography, pairs of images are correlated to determine the deformation between them, revealing finer details (bottom).

To implement elastography, Susan Schwarz of Dartmouth Medical School first captures a series of ultrasound images with increasing mechanical strain. Pairs of images are then correlated to determine the deformation between them. After computing deformations between multiple images, the elasticity of the tissue can be estimated and the result displayed as a new image (see figure). The original code, written in C++, took 10 s to execute.

To increase the throughput of this computation, Schwartz and her colleagues turned to RapidMind to implement this correlation on the PC’s GPU. Using existing C++ compilers and RapidMind interface from inside C++, the parallel computation was specified using familiar concepts such as functions and arrays. The RapidMind platform then generates and optimizes code for the GPU and manages its execution. When image correlation was implemented using RapidMind to exploit parallel computation on a GPU accelerator, it took only 1 s. This was an order of magnitude performance improvement over the C++ implementation and a factor of 240 improvement over an earlier MATLAB implementation.

Michael McCool

Founder and Chief Scientist, RapidMind

Waterloo, ON, Canada; www.rapidmind.net