Robots aim to mimic humans

Over the last few years, research into the fields of vision, speech recognition, artificial intelligence, and robotics has become fragmented. This has limited the ability of researchers to develop cognitive robots that require the incorporation of many developments in each one of these disciplines.

Now a consortium of universities under the auspices of the EU-funded Cognitive Systems for Cognitive Assistants (CoSy; www.cognitivesystems.org) project aims to merge ideas from several relevant disciplines to develop a cognitive robot capable of emulating the abilities of a young child. The project has already led to the development of interactive robots that understand human speech and objects and react to their environment.

To do this, specific functions such as results from analyzing the color of an image or understanding speech must be performed. Further, results often need to be combined at a high level so that verbal questions such as “Is the red pig to the right or left of the brown cow?” can be interpreted and visual information used to understand the image and return a result.

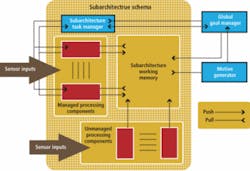

Researchers knew that they would require both a distributed computing architecture and a software toolkit to support it. What emerged was a computing subarchitecture that can take input from audio, visual, and other sensors, process the results using managed and unmanaged processing components, and place the results into system memory (see figure below).

Unmanaged processing components monitor data external to the subarchitecture and can be linked to cameras and thermistors. These processing components add information such as temperature to the system memory without proposing a local goal or further processing step. Unlike unmanaged processing components, managed processing components monitor the subarchitecture’s memory for information to process. When such information is present, the processor generates a local goal for further action. This goal is posted to the subarchitecture task manager, and the component must wait for it to be adopted.

For example, if a motion-control subarchitecture contains a description of a point in space that the robot must reach and a processing component within the subarchitecture can move the system to that point, then the processing component must propose a goal to that effect. These local goals can be automatically adopted, rejected, or stored until the necessary resources are available. This allows different components to process the same information in different ways with an appropriate mechanism selecting which one or all should be active. While such an approach appears to add processing overhead to architecture, it allows the architecture to be scaled to address more complex problems.

One of the most important concepts associated with this architecture is how multiple processes are integrated. This involves how results generated by processes in one subarchitecture can trigger processing in different subarchitectures and requires three additional components that connect all the subarchitectures. These include a motive generator, a global goal manager, and general memory. Connected to the working memory in every subarchitecture, the motive generator monitors working memories for information that could trigger processing in a different subarchitecture. When the motive generator notices such information, it generates a new global goal for the whole architecture that is sent to the global goal manager.

For example, the motive generator may notice that a speech-recognition system subarchitecture has correctly interpreted a verbal command. The motive generator can then generate a global goal to follow this command. Because the motive generator must necessarily be ignorant of the state of the architecture, an additional component—the goal manager is used to accept or reject proposed global goals.

To manage these goals, the global goal manager requires information about the architecture’s current state, the local goals currently being pursued by the subarchitectures, and the goals it has adopted in the past. While this architectural concept forms the basis for software development, hardware implementations of any specific implementation are not demanded. Instead, the CoSy Architecture Schema Toolkit (CAST) required to develop such systems provides a method to distribute a number of tasks across a network. Indeed, CAST’s primary purpose is to maintain a separation between a system’s architecture and its contents, allowing one to be varied independently of the other.