Novel sensors and lasers power 3D imaging cameras and applications

Obtaining 3D images requires specific technologies whether systems integrators opt to build systems with off-the-shelf components or buy integrated 3D products. Novel image sensors and laser illumination represent two such examples, and this article describes some of the latest technologies in each category specifically designed for 3D imaging.

Image sensors

At the heart of all imaging devices, image sensors detect and convert light into an electrical signal to create an image. In most implementations, deploying off-the-shelf sensors will suffice. In some cases, however, companies may develop image sensors specifically for 3D, whether for their own products or for third-party offerings.

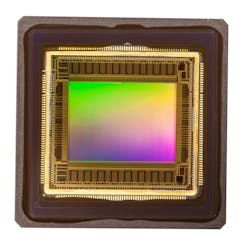

SICK (Waldkirch, Germany; www.sick.com) represents one company with a long tradition of using proprietary image sensors for its high-speed 3D cameras. In its latest generation, the Ranger3 series offers a compact 3D camera that uses SICK’s latest CMOS image sensor, the M30 (Figure 1). The M30 image sensor offers on-chip data detection (called ROCC technology, which stands for rapid on-chip calculation and reaches 30 kHz at 2560 x 200 pixel resolution with an output bandwidth less than 1 Gbps.

The Ranger3-60 camera, for example, uses a proprietary CMOS image sensor and can process its full frame imager (2560 x 832) to create one 3D profile with 2560 values at 7 kHz. The previous SICK CMOS sensor (M15) can be found in the Ranger E and Ranger D camera families, as well as the Ruler E and ScanningRuler devices.

“Several advantages exist for using proprietary sensor technology, one of which is living up to the company’s strategic objective of being independent,” says Markus Weinhofer, Strategic Product Manager, 3D Vision. “It also gives us a competitive advantage in having the core technology in-house, specifically with on-chip data reduction. Using this feature, adjustments and customization can be easily made.”

Related: Time of Flight sensors target high-speed 3D machine vision tasks

AIRY3D (Montréal, QC, Canada; www.airy3d.com) takes an economical approach in 3D image sensor design. The DEPTHIQ platform uses an optical encoder (transmissive diffraction mask) to embed the phase and direction of light into the pixel intensity data, generating a unique dataset of integrated 2D image and depth data. Proprietary algorithms extract depth data and restore the raw 2D image data, ready for a developer’s 2D image signal processing pipeline, and extracted depth data feeds to the DEPTHIQ image depth processing software pipeline, which outputs a depth map (Figure 2).

DEPTHIQ, according to the company, offers single-sensor 3D imaging and a 2D sensor drop-in replacement with no changes to the camera module or lensing. Furthermore, the design is sensor agnostic and can be customized to any given CMOS image sensor specification.

“Traditional depth solutions are difficult and expensive to deploy as they draw significant power and substantially increase the overall footprint on a platform. All of these issues have impeded the broad adoption of depth within vision systems,” says Paul Gallagher, Vice President of Strategic Marketing. “The AIRY3D approach is simple to deploy as it fits within an existing footprint and does not need any special lighting. It is very power-efficient, and its lightweight processing requires only a few line buffers as it processes the depth instream.”

He adds, “AIRY3D’s technology will enable broad depth deployment to the numerous applications that want or need depth but cannot deploy depth solutions due to traditional depth’s cost, size, or power requirements.”

Designed to enable accurate, fast 3D acquisition of moving objects, Photoneo’s (Bratislava, Slovakia; www.photoneo.com) mosaic CMOS image sensor (Figure 3) works similar to the Bayer filter mosaic, where each color-coded pixel has a unique role in the final, debayered output. Deployed in the company’s line of 3D products, the sensor allows the capture of a moving object from one single shot of the sensor. This sensor consists of a [confidential] amount of super-pixel blocks which are further divided into subpixels. Each sub-pixel can be controlled by a defined unique modulation signal / pattern—referring to the electronic modulation of internal pixels to control when a pixel receives light and when it does not hence the name mosaic shutter or matrix shutter CMOS sensor.

After exposure, raw data is demosaiced to gather a set of—a number that correlates with the number of sub-pixels per super-pixel—of uniquely-coded virtual images, as opposed to capturing several images with modulated projection. This means that the pixel modulation (turning on or off) is done on the sensor as opposed to the projection field (transmitter). Because there is full control over pixel modulation functions, it is possible to use the same sequential structured light algorithms and similar information, but from one frame and with slightly lower resolution.

Related: Understanding the latest in high-speed 3D imaging: Part one

“There are several techniques that use multiple frames from which they compute the final image. However, when capturing a moving object, they face the same problem as structured light technology- the individual images are captured at different instances, causing distortion of the final output,” says Tomas Kovacovsky, CTO, Photoneo. “Photoneo’s image sensor can capture a scene in one frame. There are sensors able to modulate pixels, but this method requires modulating them in a more delicate way.”

He adds, “What is most important here is each sub-pixel in a super-pixel being modulated independently.”

In its AR0430 sensor, ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com) offers a 1/3.2-in. backside illuminated CMOS image sensor capable of 120 fps at full resolution. The sensor enables the capture of a color image and simultaneous depth map from a single device via the company’s Super Depth technology. Techniques in the sensor, color filter array, and the micro-lens create a data stream containing both image and depth data. This data is combined via an algorithm to deliver a 30-fps video stream and depth map of anything within 1 m of the camera, according to ON Semiconductor. Additionally, the device offers simultaneous color imaging and depth data from a single sensor at 30 fps.

Structured line lasers

Utilizing 3D triangulation techniques to acquire 3D images is not possible without structured line lasers, as triangulation involves the use of lasers that project onto the surface of an object and a camera that captures the reflection of that line on the object’s surface. With triangulation, the distance of each surface point is computed to obtain a 3D profile or contour of the object’s shape. Laser-based 3D techniques require many individual images, each containing just one line of the object being inspected, to create a complete 3D image of the object or features requiring inspection.

Deploying such technologies may mean using pre-packaged systems such as the products offered by AT – Automation Technology (Bad Oldesloe, Germany; www.automationtechnology.de); Cognex (Natick, MA, USA; www.cognex.com); Keyence (Osaka, Japan; www.keyence.com); LMI Technologies (Burnaby, BC, Canada; www.lmi3d.com); Micro-Epsilon (Ortenburg, Germany; www.micro-epsilon.com); or SICK.

3D triangulation may also mean building a system with off-the-shelf components like industrial area scan cameras and structured line lasers. With the right software, suggests David Dechow, Principal Vision Systems Architect, Integro Technologies (Salisbury, NC, USA; www.integro-tech.com), nearly any industrial camera can become a 3D camera that uses triangulation.

“Specialized 3D imaging components that use laser triangulation are extremely sophisticated machine vision systems with advanced sensors, illumination, electronics and firmware or software. However, in the most fundamental sense structured laser line or sheet of light imaging can be implemented as a general-purpose application,” he says. “Of course, executing this successfully requires the right laser component, camera image sensor and appropriate supporting software.”

Related: Understanding the latest in high-speed 3D imaging: Part two

Numerous machine vision libraries support the acquisition of individual frames that can be combined into a 3D image from a laser triangulation image. This includes HALCON from MVTec (Munich, Germany; www.mvtec.com); Easy3D from Euresys (Seraing, Belgium; www.euresys.com), Matrox Imaging Library from Matrox Imaging (Dorval, QC, Canada; www.matrox.com/imaging), Common Vision Blox from Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.com), and even OpenCV (www.opencv.org). All these products and others, according to Dechow, have algorithms that allow integrators to create 3D representations using separate cameras and lasers.

“Building your own 3D laser triangulation system starts with finding a suitable off-the-shelf laser, not a laser you’d find at a home improvement store, but a focusable laser that delivers a low-speckle line, that’s uniform across the field of view of the camera sensor,” says Dechow.

To that end, Coherent (Santa Clara, CA, USA; www.coherent.com) offers the Stingray configurable machine vision laser (Figure 4), which allows customers to choose laser output power, line fan angle, wavelength, cable and connector options such as RS-232, and custom focus working distances.

“The most important metrics that impact the 3D application success are the uniformity and straightness of the projected top hat line,” says Daniel Callen, Product Manager, Coherent. “Stingray lasers incorporate a Powell lens, which is a cylinder lens with an aspheric profile. Compared to a conventional cylinder lens, a Powell lens delivers better performance in both these key parameters.”

Wavelength represents another important feature to consider when choosing a laser for triangulation. Different materials respond to different wavelengths, and choosing the wrong laser is the kind of thing that impacts the imaging from the point of view of the laser. Another factor to consider is the need for a powerful laser. Triangulation requires enough light on the object to overcome ambient light to a great extent, as well as enough light to reflect off surfaces that otherwise might absorb light. The laser needs to create a reflection that can be imaged in the sensor at imaging rates required for the application, explains Dechow.

ProPhotonix (Salem, NH, USA; www.prophotonix.com), for example, offers configurable lasers for 3D imaging applications such as the 3D PRO lasers (Figure 5), which are available in multiple form factors, adjustable focus options, and a wide range of wavelengths, powers, and uniformity levels, according to Jeremy Lane, Managing Director.

Numerous other laser companies develop structured laser lights designed specifically for 3D imaging, including Osela (Lachine, QC, Canada;www.osela.com), Laser Components USA (Bedford, NH, USA; www.lasercomponents.com), and Z-LASER (Freiburg, Germany; www.z-laser.com). These manufacturers develop lasers that are available with various features, such as different wavelengths and line widths.

Highly characterized and application specific optimized laser structured light solutions is the key to meeting the ever-increasing demands for machine vision systems, says Nicolas Cadieux, CEO, Osela.

“Laser beam shaping technologies designed for uniform pixel illumination, depth of field optimization, as well as laser safety certification know-how have allowed drastic improvements in the performance of the 3D vision systems built by our customers.”

When it comes to deploying a 3D machine vision system, systems integrators have numerous options. Pre-packaged products provide ready-made 3D functionality, while components like novel image sensors designed for 3D, individual structured line lasers, and third-party software packages provide the capability of building a system from scratch. It is always good to have options.

Companies mentioned:

AIRY3D

Montréal, QC, Canada

AT – Automation Technology

Bad Oldesloe, Germany

Cognex

Natick, MA, USA

Coherent

Santa Clara, CA, USA

Euresys

Seraing, Belgium

Integro Technologies

Salisbury, NC, USA

Keyence

Osaka, Japan

Laser Components USA

Bedford, NH, USA

LMI Technologies

Burnaby, BC, Canada

Matrox Imaging

Dorval, QC, Canada

Micro-Epsilon

Ortenburg, Germany

MVTec

Munich, Germany

ON Semiconductor

Phoenix, AZ, USA

Osela

Lachine, QC, Canada

Photoneo

Bratislava, Slovakia

ProPhotonix

Salem, NH, USA

SICK

Waldkirch, Germany

Stemmer Imaging

Puchheim, Germany

Z-LASER

Freiburg, Germany

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.