January 2014 snapshots: 3D imaging, UAVs, medical imaging, aerospace imaging

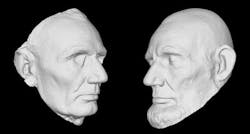

The Smithsonian (Washington, DC, USA; www.si.edu) has launched its X 3D Collection and 3D explorer program in which the famed museum will digitize its collection of historical artifacts with 3D scanners and subsequently make available the resulting 3D data and models.

3D digital scans are captured using a 3D Systems (Rock Hill, SC, USA; www.3dsystems.com) Geomagic Capture scanners and software package. The turnkey 3D scanner and software features a speed of 0.3 seconds per scan, a depth of field of 180 mm, a clearance distance of 300 mm, and an accuracy of 60 µm to 118 µm. Accompanying software enables 3D scans to be generated as polygon and surface models, which will be made available by the Smithsonian in its 3D Explorer program.

In the Explorer program, the 3D data captured by the scanners and software will enable the public to view the Smithsonian's priceless objects as 3D models. To do so, the museum had Autodesk (San Rafael, CA, USA; www.autodesk.com) create an interactive 3D educational tool accessible via the internet. Autodesk's Explorer contains a number of tools that allow users to rotate objects, make measurements, and adjust color and lighting. It also features a storytelling feature, which will provide historical context of each item.

The 3D scanners and Explorer program was launched with 21 representative objects from the Smithsonian's collection.

Some of the items include the Wright Flyer, casts of Abraham Lincoln's face, a mammoth fossil from the Ice Age and Amelia Earhart's flight suit.

Multimodal imaging targets breast cancer patients

Scientists at the Fraunhofer Institute (Munich, Germany; www.fraunhofer.de/en.html) have combined magnetic resonance imaging (MRI) with ultrasound imaging to produce a more effective method of performing breast biopsies.

Fraunhofer's magnetic resonance imaging using ultrasound (MARIUS) project requires one MRI scan of the patient's chest at the beginning of the procedure with a subsequent biopsy being guided by ultrasound. The system renders the MRI scan allowing a physician to use the live ultrasound scan and corresponding MR image available to guide the needle to the tumor.

Challenges exist for the new process however, as during an MRI the patient is lying prone, while during the biopsy, the patient lies supine. This change of position alters the shape of the patient's breast and shifts the position of the tumor.

To track these changes, ultrasound probes attached to the breast record volume data and track changes of the shape of the breast while the patient is in the MRI scanner. These changes are then analyzed and the MRI scan updated accordingly so that the MR image will change correspondingly. When the biopsy needle is inserted into the tissue, the physician then views the adjusted MRI and ultrasound image.

www.vision-systems.comVision Systems Design January 2014

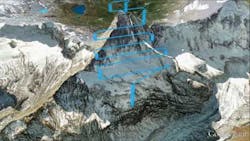

UAVs create 3D model of the Matterhorn

Sensefly (Écublens, Switzerland; www.sensefly.com), the creator of the vision-enabled eBee unmanned aerial vehicle (UAV), has teamed with Drone Adventures (Lausanne, Switzerland ; www.droneadventures.org) -a non-profit company which promotes the use of drones in civilian applications-to autonomously map the Matterhorn Mountain.

eBee drones have a 37.8 in wingspan and weigh 1.5 lbs. The foam airframe drones are equipped with a rear-mounted propeller and feature a 16 MPixel camera. eBees can travel in the air for more than 45 mins and are supplied with Postflight Terra 3D-EB mapping software to process aerial imagery into 3D models.

To map the 14,692 ft mountain, members from Drone Adventures scaled the mountain and hand-launched a drone while five other UAVs mapped the lower parts of the mountain.

Using Google maps prior to launchenables the eBees to perform their own 3D flight planning. Up to 10 drones can be used and controlled from a single base station during one mission.

Panoramic image shows multiple planets

NASA (Washington, DC, USA; www.nasa.gov) has produced a mosaic image taken by the Cassini spacecraft which shows the first ever natural-color image of Saturn, its moon and rings, Earth, Venus, and Mars. Cassini's Imaging Science Subsystem incorporates a CCD image sensor and two filter wheels, each with nine filters that allows the camera to capture images at specific wavelengths of light.

The panoramic image was created by processing 141 wide-angle images and shows a span of 404,880 miles across Saturn and its inner rings and outer E ring.

Because the sun is so close to Earth, Cassini must find the opportune time to capture images of Earth. In this particular instance, the sun slipped behind Saturn from Cassini's point of view, and the imaging system then captured an image of Earth and its moon, along with Venus, Mars, and Saturn.

Linda Spilker, Cassini project scientist at NASA's Jet Propulsion Laboratory (Pasadena, CA, USA; www.jpl.nasa.gov) says that Cassini aims to study Saturn's system from as many angles as possible. "Beyond showing us the beauty of the Ringed Planet, data like these also improve our understanding of the history of the faint rings around Saturn and the way disks around planets form -- clues to how our own solar system formed around the sun," she says.

3D robotic vision system ices perfect cakes

A joint project between Scorpion Vision (Tordivel, Oslo, Norway; www.tordivel.com), Mitsubishi Electric (Tokyo, Japan; www.mitsubishielectric.com) ,and Quasar Automation (Ripon, UK; www.quasarautomation.com) has resulted in a 3D robotic vision system that adapts to the shape and surface profile of individual cakes to enable precise and consistent icing.

The system uses the Scorpion Vision 3D Stinger Camera from Tordivel to scan the surface of the cake in less than 1s. Inside the 3D Stinger Camera housing are two XCG-V60E cameras from Sony (Park Ridge, NJ, USA; www.sony.com), one 10mW 660nm laser and two 6 mm lenses. Once the camera system scans the surface of the cake, the data is used to calculate the robot path, and a dispensing nozzle maintains the optimum distance from the surface, eliminating any potential for damage. The Quasar system uses a motor interfaced to the robot controller as an auxiliary axis to provide proportional control during the icing dispensing process.

The collaborative effort received the UK's 2013 Food Processing Award in the Robotics and Automation category. "With such accuracy the decorative icing is applied in a repeatable way. This allows the optimum time for the icing to set, which is often critical," says Jeremy Shinton of Mitsubishi.

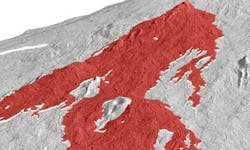

Laser scanning and 3D imaging shows lava flow patterns

Lava flows are difficult to study because of their dangerous nature, and satellite imagery-which is traditionally used to study the flows from above-often lacks the resolution and image quality needed to study the flows in detail, especially when clouds or trees obstruct the view.

To overcome these limitations, scientists from the University of Oregon (Eugene, OR, USA; www.uoregon.edu) have used laser scanning lidar to capture aerial images of volcanoes and produce 3D models that recreate the internal structure of lava flow. With the lidar technique, the researchers can extract trees and other objects from the images, providing high-resolution images for analysis.

Airplanes equipped with lidar scan the terrain and the scans are then compiled into 3D models that recreate the structure of the flow. Data gathered will enable hazard-mitigation teams to prepare vulnerable communities for future lava flows. It may also add to the body of information that these groups use to predict the behavior of flows, including the speed and direction of potentially damaging tributaries.

"No one has ever tried to look at where the lava is going within the flow in terms of places where it is thickening versus thinning, so this is a more complete view," according to Hannah Dietterich, a graduate student at the University of Oregon. "It's not a cross section, and it's not an estimate on top of an old topographic map." The University of Oregon team's project will be published in an American Geophysical Union (Washington, DC, USA; www.agu.org) monograph on Hawaiian volcanoes this year.

Autonomous vehicles integrate machine vision

RoboCV (Moskovskaya Oblast, Russia: www.robocv.com) has developed X-MOTION, a system which, with machine vision technology, enables warehouse vehicles to function autonomously.

X-MOTION AUTOPILOT uses data from a video camera to provide video data acquisition to capture shapes and features in the environment for vision-based navigation. In addition, scanners from SICK (Minneapolis, MN, USA; www.sickusa.com) and Omron (Schaumburg, IL, USA; www.omron247.com) are used to scan the environment with lasers and acquire time-of-flight data, which provides information on the environment configuration and depth maps for navigation.

Integrated into the warehouse management system, X-MOTION SERVER optimizes the distribution of work tasks among vehicles and provides information on system parameters, the location, and work status of every vehicle. X-MOTION's AUTOPILOT and SERVER work concurrently to exchange information about work tasks via Wi-Fi. This enables the autonomous vehicles to be integrated into an environment in which they operate alongside human operators.

X-MOTION features an industrial computer based on an Intel Core i5/i7 processor. This runs RoboCV's proprietary 3D-PATH imaging software, which performs all the machine vision data processing for the X-MOTION AUTOPILOT system. It also features a guidance controller, which routes instructions from the software to the guidance system of the vehicle to control the vehicle's movements.

X-MOTION can be used on tow tractors, pallet lifers, high-rack stackers, and eventually, forklifts, reach trucks, and stackers. The first X-MOTION system was installed on six vehicles in a Samsung factory in Russia.

IR sounder images typhoon Haiyan

NASA's Atmospheric Infrared Sounder (AIRS) instrument aboard its Aqua spacecraft captured satellite images of Super Typhoon Haiyan around the time it was approaching the Philippines on November 7, 2013 showing a glimpse of one of the most powerful typhoons ever recorded. The AIRS instrument was designed by BAE Systems (London, UK, www.baesystems.com) to capture temperature profiles within the atmosphere. A scanning mirror rotates around an axis along the line of flight and directs infrared energy from the Earth into the instrument. As Aqua moves, the mirror sweeps the ground creating a scan "swath" which extends roughly 500 miles (800 km) on either side of the ground track. Captured infrared data is then transmitted from AIRS to the Aqua, which then relays it to the ground at a rate of 1.27 Mbps, according to NASA's Jet Propulsion Laboratory (Pasadena, CA, USA, www.jpl.nasa.gov).

Vision Systems Articles Archives