Artificial Intelligence: Researchers add artificial intelligence to robotic systems

"Artificial Intelligence for Robotic Butlers and Surgeons," was the title of Dr. Pieter Abbeel's keynote presentation at this year's NI Week. In his presentation Abbeel, an Assistant Professor at the University of California Berkeley (Berkeley, CA, USA; www.cs.berkeley.edu), highlighted the current state-of-the art in machine learning techniques and how these could be deployed in semi-autonomous and autonomous systems.

In building such systems, developers may find that the software or software models needed to describe how to perform such autonomous learning may prove more expensive to implement that developing the hardware required. Indeed, in 2009, the cost of the PR2 Personal Robot was $400,000. Now, such robots are available from such companies as Rethink Robotics (Boston, MA, USA; www.rethinkrobotics.com) for approximately $30,000.

But how will such robots perform even the simplest tasks without the hand-coding of vision and motor control algorithms that are used in today's automation systems? One such method is apprenticeship learning in which the robotic system is shown a task that is performed by a human being. If such a task is performed numerous times, then a mathematical model can be created that describes the task and thus can be performed autonomously by a robotic system.

One such task, for example, could be employed in minimally invasive surgery to perform automatic suturing. At present, tele-operated robotic systems, such as the da Vinci system are used to perform such tasks in master-slave mode, giving the surgeon complete control of the robot at all times. However, if such tasks could be performed autonomously, it would reduce the surgeon's fatigue while at the same time allowing the task to be performed faster and more precisely. In such applications, the vision-based robotics system must learn to tie a knot based on the data it has extracted from a similar demonstration.

However, the initial conditions of the task presented may not exactly match those of those in the demonstration. Because of this, the trajectory of the robotic grippers will have to move in a different trajectory to perform the task. To accomplish this, a 3D warping function must be found that maps the features found in the demonstration scene onto the test scene. This warping function can then be used to control the end-effector trajectory of the robot.

In his paper "A Case Study of Trajectory Transfer Through Non-Rigid Registration for a Simplified Suturing Scenario" (http://bit.ly/14gcfvD), Abbeel shows that Thin Plate Spline Robust Point Matching (TPS-RPM) can be used to recover such a warping function.

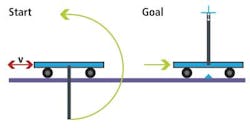

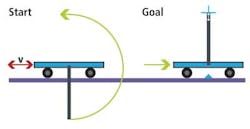

Apprenticeship learning techniques can bootstrap a robot's learning process, but ideally a robot would also be able to take it further on its own, exploring its environment and its own dynamics to further improve performance, or even learn to perform tasks it has never seen demonstrated. Such learning has proven rather challenging. Yet some progress is being made, albeit for now on simpler tasks than the ones that have been tackled with apprenticeship learning. An example of a task within current reach is cartpole swing-up in which a cart on a rail can be accelerated forward / backward. A pendulum attached to the cart will passively swing back and forth as the cart moves forward / backward. The task is to move the cart in a way that swings up the pole.

Vision Systems Articles Archives

About the Author

Andy Wilson

Founding Editor

Founding editor of Vision Systems Design. Industry authority and author of thousands of technical articles on image processing, machine vision, and computer science.

B.Sc., Warwick University

Tel: 603-891-9115

Fax: 603-891-9297