September 2017 snapshots: 3D microscopy, deep learning, thermal imaging, pattern matching

Microscope enables 3D viewing of objects

To allow team members working in different locations to view a 3D object simultaneously in virtual meeting rooms, Octonus (Moscow, Russia; www.octonus.com), developed the 3DDM, a flexible stereo 3D digital microscope.

Octonus' 3DDM is based on a Leica Microsystems (Wetzlar, Germany; www.leica-microsystems.com) M205a stereomicroscope, which is a standard Leica platform fitted with a few additions: an object holder mounted on a motorized stage, a custom LED illumination system, and a pair of industrial cameras from FLIR (formerly Point Grey; Richmond, BC, Canada; www.ptgrey.com). To create an image, an operator mounts a sample on the 3DDM's object holder, where the operator can rotate the sample under the cameras' field of view using a standard device such as a mouse, keyboard, or 3D joystick. Adjustments to a focusing drive fitted to the cameras and optical aperture of the system enable the capture of 3D video images, according to FLIR.

As the illuminated sample rotates, the cameras capture a live video stream. The cameras used in the microscope are Grasshopper GS3- U3-23S6C-C color cameras. This color camera features the 2.3 MPixel Sony (Tokyo, Japan; www.sony.com) IMX174 global shutter CMOS image sensor, which has a 5.86 μm pixel size and can reach speeds of up to 163 fps. Additionally, the camera features a USB3 Vision interface and a C-Mount lens thread.

A PC connected to the cameras stores the data captured in compact video format, as either a 3D video stream or 2D/3D image, while also storing the complete set of data for every frame-including all the digital settings of the optical aperture, the lighting system and holder-on top of the images or video sequences. Image analysis software running on the PC then measures object features with accuracy up to 10 μm. A combined 2D/3D mode also allows measurements to be made in both 3D space and along a projection plane through the object. With the microscope's multi-functional LED light sources, which include NIR, UV, and darkfield illumination, there are several ways to illuminate a sample.

"By controlling the movement of the motorized object holder with a 3D joystick, it is possible to position any object with an accuracy of up to one micrometer. In addition, the details of the location of the object, movement trajectories, and the focusing and zooming parameters selected can all be saved on the PC for review later," said Dr. Andrey Lebedev, Octonus Project Leader.

He continued, "The ability of the Grasshopper3 cameras to output pixel data in a RAW16, mode 7 format at frame rates of 60 frames/sec coupled with their 1920 x 1200 resolution were important factors that led us to choose the cameras. The ability to automatically synchronize the capture of images from the two cameras using external hardware triggering from the PC was also a significant criterion that facilitated the development of the system."

Octonus' 3DDM facilitates global team collaboration by using 3D TVs and stereo glasses to create a virtual meeting room, in which presenters can add annotated images or direct their colleague's attention to important details by using the mouse cursor, shifting the image, zooming in and out, changing the fps, or adding and removing layers.

3DDM features a C++ SDK which enables control of the system's lighting, optical unit, and holder, while allowing for extensions or additions to existing image processing algorithms. Octonus plans to increase the resolution of the 3D video images the microscope captures by upgrading its cameras from 2.3 MPixels to cameras in the range of 5 to 12 MPixels.

Computer vision and deep learning enhance drone capabilities

DJI's (Shenzhen, China; www.dji.com) latest drone, the Spark mini-which weighs less than a can of soda-features the Intel Movidius (San Mateo, CA, USA; www.movidius.com) Myriad 2 vision processing unit, which is used for accelerating machine vision tasks such as object detection, 3D mapping and contextual awareness through deep learning algorithms.

The Myriad 2 VPU, which the company calls the industry's first "always-on vision processor," features an architecture comprised of a complete set of interfaces, a set of enhanced imaging/vision accelerators, a group of 12 specialized vector VLIW processors called SHAVEs, and an intelligent memory fabric that pulls together the processing resources to enable power-efficient processing.

"To reach the impressive level of miniaturization seen in Spark, DJI looked to Intel Movidius chips for an innovative solution that reduces the size and weight of the drone," said Remi El-Ouazzane, vice president of New Technology Group and general manager of Movidius Group at Intel Corporation (Santa Clara, CA, USA; www.intel.com). "Our advanced Myriad 2 VPU combines processing of both traditional geometric vision algorithms and deep learning algorithms, meaning Spark not only has spatial awareness but contextual awareness as well."

DJI's Spark features a 1/2.3" CMOS image sensor that captures 12 MPixel images and shoots stabilized HD 1080p videos. It uses the VPU for onboard computer vision processing and deep learning algorithms at high speed, which enables sense-and-avoid, optical tracking, and gesture recognition capabilities.

In fact, the VPU has enabled several advanced features on the drone, which are as follows:

Face aware: When placed on the palm of the hand, Spark recognizes the user's face and knows when to take off, making the process of launching the drone seamless.

Gesture mode: Wave your hands to catch Spark's attention, move it with arm gestures, and let it know you're ready for the perfect shot by making a frame with your fingers.

Safe landing: Vision sensors placed on the underside detect and identify what is below to assist with a safe landing.

"Controlling a camera drone with hand movements alone is a major step towards making aerial technology an intuitive part of everyone's daily life, from work and adventure to moments with friends and family," said Paul Pan, Senior Product Manager at DJI. "Spark's revolutionary new interface lets you effortlessly extend your point of view to the air, making it easier than ever to capture and share the world from new perspectives."

Spark flies at speeds of up to 31 mph for up to 16 minutes at a time and features quick-swap batteries and can also be charged via a mini-USB port. Its onboard processing includes obstacle avoidance and DJI's geofencing system that keeps users from flying where they shouldn't. The Spark weighs just over the cutoff requiring registration of the vehicle with the Federal Aviation Administration, although a federal court has just ruled that registration doesn't apply to hobbyist drones.

Thermal imaging captures record-high temps in London tube

A thermal imaging camera has captured images exceeding 107°F (42°C) in the London tube during the hottest June day since 1976.

Temperatures recorded on the tube, which included 95°F (35°C) at Bank station and the 107°F (42°C) on the Central line, both exceeded the 86°F (30°C), which is the temperature limit at which cattle can be legally transported, according to the EU. These temperatures came during a week-long heatwave that left commuters undoubtedly sweating on the tube.

Katie Panayi, 29, told the Evening Standard (London, UK; www.standard.co.uk) "I can't walk anywhere it's so hot. I'm about to go on a date and my make-up is literally melting off my face. I can tell you this heat is not healthy. It feels like I'm about to pass out and die. "Last night I saw two elderly people completely collapse on the way home on the train because the air-con was broken. The poor lady was just lying on the floor but no one could move to help her."

Thermal images captured during the heatwave identified the temperatures throughout various parts of the train. The images were captured with a Caterpillar (Peoria, IL; USA; www.cat.com) S60 phone, which is a ruggedized smartphone that integrates a Lepton infrared camera from FLIR (Wilsonville, OR, USA; www.flir.com). The Lepton features an 80 x 60 uncooled VOx microbolometer array with a pixel size of 17 μm. The 8.5 x 8.5 x 5.9 mm fixed focus camera features a spectral range of 8 μm to 14 μm, 50° horizon field of view (FOV), and thermal sensitivity of <50 mK. Lepton utilizes wafer-level detector packaging, wafer-level micro-optics, and a custom integrated circuit that supports all camera functions on a single integrated low power chip.

Lepton can visualize heat invisible to the naked eye-as evidenced in the photos-and allows users to measure surface temperature from a distance, detect heat loss around doors and windows, spot moisture and missing insulation. The smartphone is waterproof to depths of up to 5 meters for one hour and also has a 13 MPixel underwater camera and a 5 MPixel front-facing camera. The camera also features FLIR's MSX multispectral dynamic imaging) image processing enhancement, which enhances thermal images with visible light detail for extra perspective.

While camera's such as the Lepton can help to identify potentially unsafe temperatures in places such as the London tube, they can't do much when it comes to that broken air conditioning. For the sake of those who rely on the tube, let's hope that has since been fixed.

For more on 3D and non-visible imaging, embedded and mobile vision systems, and deep learning software visit http://bit.ly/VSD-NEXT-GEN

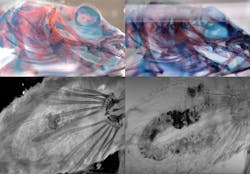

Computer vision aids prehistoric pottery project

Researchers at the University of South Carolina (Columbia, SC, USA; www.sc.edu) are developing software that automatically matches prehistoric pottery fragments with whole designs as part of a collaboration between the College of Arts and Sciences' Center for Digital Humanities and S.C. Institute of Archaeology and Anthropology, the College of Engineering and Computing and Research Computing Center.

Funded by grants from the National Science Foundation and National Park Service, the project aims to uncover information on how people interacted and traveled in the Southeast more than 1,500 years ago. Native Americans, according to the University of South Carolina, carved designs into wooden paddles and stamped them onto pottery during a period called the Woodland period, which ran from about 100 to 900 AD. Known as "Swift Creek pottery," the fragments-called sherds or potsherds-have been found throughout southern United States, mainly in Georgia, northern Florida, parts of western South Carolina and eastern Alabama.

"Pottery paddles are like fingerprints in a way," says archaeologist Karen Smith, director of the Institute of Archaeology and Anthropology's Applied Research Division. "At the most basic level, they are indicators of individual artists. Some sherds in middle Georgia were impressed with the same paddles as sherds in Florida. That's almost unprecedented in our field that we can see people, paddles and movement."

Swiss Creek pottery has garnered interest from archaeologists because of its prevalence in the region and the opportunity it presents to track human interaction and migration. In the 1940s, it was discovered that complete paddle designs could be reconstructed from sherds and that certain designs were widely distributed across the region. Archaeologist Bettye Broyles and naturalist Frankie Snow, in their work, reconstructed about 900 paddle designs from dozens of sites by using pattern matching techniques, but these techniques had proven to be tedious.

"We lack efficient methods for combing through the volumes of material from Swift Creek sites that reside in curation facilities across the Southeast," said Smith. "Finding sherds that match a particular known design is akin to finding a proverbial needle in a haystack."

To speed up the process, a collaboration with Colin Wilder of the Center for Digital Humanities, Song Wang, professor in the College of Engineering and Computing, and Jun Zhou of the Research Computing Center was initiated. Using digital imaging and analysis technologies, the group is developing algorithms and software that will automatically match patterns between sherds and designs.

"We are speeding up a task and making it hundreds of times faster," Wilder says. "This is a task that is tedious and difficult to do, and there are large collections of unprocessed pottery. There is a huge mystery out there. What is the actual story of these people and their movements? It's within the realm of the possible to find out."

When it comes to machine vision, one of the most important steps in many applications can be locating an object of interest within the camera's field of view-a task that can be accomplished with pattern matching software. One of the earliest approaches of performing pattern matching is correlation; in which a template of a known good image is stepped over the captured image and a pixel-by pixel region comparison made.

Other methods include texture-based pattern matching, and using multiple templates, such as in the CVB Polimago pattern matching tool from Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.com), which first extracts characteristics from a template that are then saved in a model. Artificial views of the template are then automatically generated at different scales, rotations and angles to reproduce various positions of the scene that may be encountered.

This summer, Smith and her graduate students will travel to various Swift Creek pottery collections in Georgia to scan pottery sherds and begin building a database of images. Along with data processing algorithms, the team will use a 3D scanner to make matches, said Wang, whose research expertise is in computer vision and image processing.

The algorithms will extract patterns from an individual sherd, identify the underlying design, and ultimately connect it with a corresponding reconstructed whole design. Because the paddles were applied to pottery multiple times to imprint designs, this presents a challenge that Wang is eager to accomplish by developing new algorithms.

"Solving this problem will advance and extend the basic computer vision research on shape and partial shape matching, which have been studied for a long time in the computer vision field," Wang says.

Furthermore, the team utilized high-performance computer clusters to bring the processing time down from hours to minutes.

The team chose to utilize computer vision algorithms, it said, because the paddle designs are not random but rather follow certain regularities and rules.

"Through this project, we can begin to map out how creativity in this artistic system changed through time, as well as when, where and how designs were copied," Smith says.

Ultimately, the goal of the project is to create a website where archaeologists and other interested individuals can upload photos of sherds, access the pattern-matching technology and identify pieces right away, according to Wilder.